Abstract

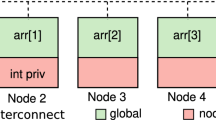

The main attraction of Partitioned Global Address Space (PGAS) languages to programmers is the ability to distribute the data to exploit the affinity of threads within shared-memory domains. Thus, PGAS languages, such as Unified Parallel C (UPC), are a promising programming paradigm for emerging parallel machines that employ hierarchical data- and task-parallelism. For example, large systems are built as distributed-shared memory architectures, where multi-core nodes access a local, coherent address space and many such nodes are interconnected in a non-coherent address space to form a high-performance system.

This paper studies the access patterns of shared data in UPC programs. By analyzing the access patterns of shared data in UPC we are able to make three major observations about the characteristics of programs written in a PGAS programming model: (i) there is strong evidence to support the development of automatic identification and automatic privatization of local shared data accesses; (ii) the ability for the programmer to specify how shared data is distributed among the executing threads can result in significant performance improvements; (iii) running UPC programs on a hybrid architecture will significantly increase the opportunities for automatic privatization of local shared data accesses.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Sobel - wikipedia, the free encyclopedia. http://en.wikipedia.org/wiki/Sobel

Amza, C., et al.: TreadMarks: Shared memory computing on networks of workstations. IEEE Computer 29(2), 18–28 (1996), citeseer.ist.psu.edu/amza96treadmarks.html

Bailey, D., et al.: The NAS parallel benchmarks. Technical Report RNR-94-007, NASA Ames Research Center (March 1994)

Bailey, D., et al.: The NAS parallel benchmarks 2.0. Technical Report NAS-95-020, NASA Ames Research Center (December 1995)

Barton, C., et al.: Shared memory programming for large scale machines. In: PLDI, June, pp. 108–117 (2006)

Berlin, K., et al.: Evaluating the impact of programming language features on the performance of parallel applications on cluster architectures. In: Rauchwerger, L. (ed.) LCPC 2003. LNCS, vol. 2958, Springer, Heidelberg (2004)

Bonachea, D.: GASNet specification, v1.1. Technical Report CSD-02-1207, U.C. Berkeley (November 2002)

Cantonnet, F., et al.: Performance monitoring and evaluation of a UPC implementation on a NUMA architecture. In: International Parallel and Distributed Processing Symposium (IPDPS), Nice, France, April, p. 274 (2003)

Cappello, F., Etiemble, D.: MPI versus MPI+OpenMP on the IBM SP for the NAS benchmarks. In: ACM/IEEE Supercomputing, November 2000, IEEE Computer Society Press, Los Alamitos (2000)

Cascaval, C., et al.: Performance and environment monitoring for continuous program optimization. IBM Journal of Research and Development 50(2/3) (2006)

Chen, W.-Y., et al.: A performance analysis of the Berkeley UPC compiler. In: Proceedings of the 17th Annual International Conference on Supercomputing (ICS’03), June (2003)

Coarfa, C., et al.: An evaluation of global address space languages: Co-Array Fortran and Unified Parallel C. In: Symposium on Principles and Practice of Parallel Programming (PPoPP), Chicago, IL, June, pp. 36–47 (2005)

UPC Consortium. UPC Language Specification, V1.2 (May 2005)

IBM Corporation. IBM XL UPC compilers (November 2005), http://www.alphaworks.ibm.com/tech/upccompiler

Costa, J.J., et al.: Running OpenMP applications efficiently on an everything-shared SDSM. Journal of Parallel and Distributed Computing 66, 647–658 (2005)

Dwarkadas, S., Cox, A.L., Zwaenepoel, W.: An integrated compile-time/run-time software distributed shared memory system. In: Architectural Support for Programming Languages and Operating Systems, Cambridge, MA, pp. 186–197 (1996)

El-Ghazawi, T., Cantonnet, F.: UPC performance and potential: a NPB experimental study. In: Proceedings of the 2002 ACM/IEEE conference on Supercomputing (SC’02), Baltimore, Maryland, USA, November 2002, pp. 1–26. IEEE Computer Society Press, Los Alamitos (2002)

Hoeflinger, J.P.: Extending OpenMP to clusters (2006), http://www.intel.com/cd/software/products/asmo-na/eng/compilers/285865.htm

Keleher, P.: Distributed Shared Memory Using Lazy Release Consistency. PhD thesis, Rice University (December 1994)

Numrich, R.W., Reid, J.: Co-array fortran for parallel programming. ACM SIGPLAN Fortran Forum 17(2), 1–31 (1998)

Smith, L., Bull, M.: Development of mixed mode mpi / openmp applications (Presented at Workshop on OpenMP Applications and Tools (WOMPAT 2000), San Diego, Calif., July 6-7, 2000). Scientific Programming 9(2-3), 83–98 (2001), citeseer.ist.psu.edu/article/smith00development.html

Wisniewski, R.W., et al.: Performance and environment monitoring for whole-system characterization and optimization. In: PAC2 - Power Performance equals Architecture x Circuits x Compilers, Yorktown Heights, NY, October 6-8, pp. 15–24 (2004)

Zhang, Z., Seidel, S.: Benchmark measurements of current UPC platforms. In: IPDPS, Denver, CO, April (2005)

Zhang, Z., Seidel, S.: A performance model for fine-grain accesses in UPC. In: IPDPS, Rhodes Island, Greece, April (2006)

Author information

Authors and Affiliations

Editor information

Rights and permissions

Copyright information

© 2007 Springer Berlin Heidelberg

About this paper

Cite this paper

Barton, C., Caşcaval, C., Amaral, J.N. (2007). A Characterization of Shared Data Access Patterns in UPC Programs. In: Almási, G., Caşcaval, C., Wu, P. (eds) Languages and Compilers for Parallel Computing. LCPC 2006. Lecture Notes in Computer Science, vol 4382. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-72521-3_9

Download citation

DOI: https://doi.org/10.1007/978-3-540-72521-3_9

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-72520-6

Online ISBN: 978-3-540-72521-3

eBook Packages: Computer ScienceComputer Science (R0)