Abstract

The classical perceptron algorithm is an elementary algorithm for solving a homogeneous linear inequality system Ax > 0, with many important applications in learning theory (e.g., [11,8]). A natural condition measure associated with this algorithm is the Euclidean width τ of the cone of feasible solutions, and the iteration complexity of the perceptron algorithm is bounded by 1/τ

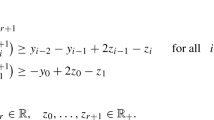

2. Dunagan and Vempala [5] have developed a re-scaled version of the perceptron algorithm with an improved complexity of O(nln (1/τ)) iterations (with high probability), which is theoretically efficient in τ, and in particular is polynomial-time in the bit-length model. We explore extensions of the concepts of these perceptron methods to the general homogeneous conic system  where K is a regular convex cone. We provide a conic extension of the re-scaled perceptron algorithm based on the notion of a deep-separation oracle of a cone, which essentially computes a certificate of strong separation. We give a general condition under which the re-scaled perceptron algorithm is theoretically efficient, i.e., polynomial-time; this includes the cases when K is the cross-product of half-spaces, second-order cones, and the positive semi-definite cone.

where K is a regular convex cone. We provide a conic extension of the re-scaled perceptron algorithm based on the notion of a deep-separation oracle of a cone, which essentially computes a certificate of strong separation. We give a general condition under which the re-scaled perceptron algorithm is theoretically efficient, i.e., polynomial-time; this includes the cases when K is the cross-product of half-spaces, second-order cones, and the positive semi-definite cone.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Belloni, A., Freund, R.M.: Characterization of second-order cone inclusion, and efficient projection, Technical Report, MIT Operations Research Center, OR-380-06 (2006)

Bertsimas, D., Vempala, S.: Solving convex programs by random walks. Journal of the ACM 51(4), 540–556 (2004)

Blum, A., Frieze, A., Kannan, R., Vempala, S.: A polynomial-time algorithm for learning noisy linear threashold functions. Algorithmica 22(1), 35–52 (1998)

Bylander, T.: Learning linear threashold functions in the presence of classification noise, Proceedings of the Seventh Annual Workshop on Computational Learning Theory, pp. 340–347 (1994)

Dunagan, J., Vempala, S.: A simple polynomial-time rescaling algorithm for solving linear programs, to appear in Mathemathical Programming (2007)

Freund, R.M., Vera, J.R.: Condition-based complexity of convex optimization in conic linear form via the ellipsoid algorithm. SIAM Journal on Optimization 10(1), 155–176 (1999)

Some characterizations and properties of the distance to ill-posedness and the condition measure of a conic linear system, Mathematical Programming 86(2) , 225–260. (1999)

Freund, Y., Schapire, R.E.: Large margin classification using the perceptron algorithm. Machine Learning 37(3), 297–336 (1999)

Karmarkar, N.: A new polynomial-time algorithm for linear programming. Combinatorica 4(4), 373–395 (1984)

Khachiyan, L.G.: A polynomial algorithm in linear programming. Soviet Math. Dokl. 20(1), 191–194 (1979)

Minsky, M., Papert, S.: Perceptrons: An introduction to computational geometry. MIT Press, Cambridge (1969)

Nesterov, Y., Nemirovskii, A.: Interior-point polynomial algorithms in convex programming, Society for Industrial and Applied Mathematics (SIAM), Philadelphia (1993)

Novikoff, A.B.J.: On convergence proofs on perceptrons. In: Proceedings of the Symposium on the Mathematical Theory of Automata X11, 615–622 (1962)

Pataki, G.: On the closedness of the linear image of a closed convex cone, Technical Report, University of North Carolina, TR-02-3 Department of Operations Research (1992)

Renegar, J.: Some perturbation theory for linear programming. Mathematical Programming 65(1), 73–91 (1994)

Linear programming, complexity theory, and elementary functional analysis Mathematical Programming 70(3) 279–351 (1995)

Rosenblatt, F.: Principles of neurodynamics, Spartan Books, Washington, DC (1962)

Wolkowicz, H., Saigal, R., Vandenberghe, L.: Handbook of Semidefinite Programming. Kluwer Academic Publishers, Boston, MA (2000)

Author information

Authors and Affiliations

Editor information

Rights and permissions

Copyright information

© 2007 Springer Berlin Heidelberg

About this paper

Cite this paper

Belloni, A., Freund, R.M., Vempala, S.S. (2007). An Efficient Re-scaled Perceptron Algorithm for Conic Systems. In: Bshouty, N.H., Gentile, C. (eds) Learning Theory. COLT 2007. Lecture Notes in Computer Science(), vol 4539. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-72927-3_29

Download citation

DOI: https://doi.org/10.1007/978-3-540-72927-3_29

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-72925-9

Online ISBN: 978-3-540-72927-3

eBook Packages: Computer ScienceComputer Science (R0)