Abstract

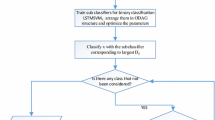

The directed acyclic graph support vector machines (DAGSVMs) have been shown to be able to provide classification accuracy comparable to the standard multiclass SVM extensions such as Max Wins methods. The algorithm arranges binary SVM classifiers as the internal nodes of a directed acyclic graph (DAG). Each node represents a classifier trained for the data of a pair of classes with the specific kernel. The most popular method to decide the kernel parameters is the grid search method. In the training process, classifiers are trained with different kernel parameters, and only one of the classifiers is required for the testing process. This makes the training process time-consuming. In this paper we propose using separation indexes to estimate the generalization ability of the classifiers. These indexes are derived from the inter-cluster distances in the feature spaces. Calculating such indexes costs much less computation time than training the corresponding SVM classifiers; thus the proper kernel parameters can be chosen much faster. Experiment results show that the testing accuracy of the resulted DAGSVMs is competitive to the standard ones, and the training time can be significantly shortened.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Vapnik, V.: Statistical Learning Theory. Wiley, New York (1998)

Kreß el, U.: Pairwise classification and support vector machines. In: Schölkopf, B., Burges, C.J.C., Smola, A.J. (eds.) Advances in Kernel Methods – Support Vector Learning, MIT Press, Cambridge, MA (1999)

Bottu, L., Cortes, C., Denker, J., Drucker, H., Guyon, I., Jackel, L., LeCun, Y., Muller, U., Sackinger, E., Simard, P., Vapnik, V.: Comparison of classifier methods: A case study in handwritten digit recognition. In: Proc. Int. Conf. Pattern Recognition, pp. 77–87 (1994)

Platt, J.C., Cristianini, N., Show-Tayler, J.: Large margin DAG’s for multiclass classification. In: Advances in Neural Information Processing Systems, vol. 12, pp. 547–553. MIT Press, Cambridge, MA (2000)

Hsu, C.-W., Lin, C.-J.: A comparison of methods for multiclass support vector machines. IEEE trans. Neural Networks 13(2), 415–425 (2002)

Keerthi, S.S., Lin, C.-J.: Asymptotic behaviors of support vector machines with Gaussian kernel. Neural Computation 15(7), 1667–1689 (2003)

Lin, H.-T., Lin, C.-J.: A study on sigmoid kernels for SVM and the training of non-PSD kernels by SMO-type methods, Technical report, Department of Computer Science and Information Engineering, National Taiwan University

Rojas, S.A., Fernandez-Reyes, D.: Adapting Multiple Kernel Parameters for Support Vector Machines using Genetic Algorithms. The 2005 IEEE Congress on Evolutionary Computation 1, 626–631 (2005)

Liang, X., Liu, F.: Choosing multiple parameters for SVM based on genetic algorithm. In: 6th International Conference on Signal Processing, vol. 1, pp. 117–119 (August 26-30, 2002)

Liu, H.-J., Wang, Y.-N., Lu, X.-F.: A Method to Choose Kernel Function and its Parameters for Support Vector Machines. In: Proceedings of 2005 International Conference on Machine Learning and Cybernetics, vol. 7, pp. 4277–4280 (August 18–21, 2005)

Hush, D., Scovel, C.: Polynomial-time decomposition algorithms for support vector machines. Machine Learning 51, 51–71 (2003)

Platt, J.C.: Sequential minimal optimization: A fast algorithm for training support vector machines, Microsoft Research, Technical Report MST-TR-98-14 (1998)

Maruyama, K.-I., Maruyama, M., Miyao, H., Nakano, Y.: A method to make multiple hypotheses with high cumulative recognition rate using SVMs. Pattern Recognition 37, 241–251 (2004)

Debnath, R., Takahashi, H.: An efficient method for tuning kernel parameter of the support vector machine. In: IEEE International Symposium on Communications and Information Technology, vol. 2, pp. 1023–1028 (October 26–29, 2004)

Bi, L.-P., Huang, H., Zheng, Z.-Y., Song, H.-T.: New Heuristic for Determination Gaussian Kernel’s Parameter. In: Proceedings of 2005 International Conference on Machine Learning and Cybernetics, vol. 7, pp. 4299–4304 (August 18–21, 2005)

Takahashi, F., Abe, S.: Optimizing directed acyclic graph support vector machines. In: ANNPR 2003. Proc. Artificial Neural Networks in Pattern Recognition, pp. 166–170 (September 2003)

Phetkaew, T., Kijsirikul, B., Rivepiboon, W.: Reordering adaptive directed acyclic graphs: An improved algorithm for multiclass support vector machines. In: IJCNN 2003. Proc. Internat. Joint Conf. on Neural Networks, vol. 2, pp. 1605–1610 (2003)

Wu, K.-P., Wang, S.-D.: Choosing the Kernel parameters of Support Vector Machines According to the Inter-cluster Distance. In: IJCNN 2006. Proc. Internat. Joint Conf. on Neural Networks (2006)

Bezdek, C., Pal, N.R.: Some New Indexes of Cluster Validity. IEEE Transaction on Systems, Man, And Cybernetics-Part B: Cybernetics 28(3), 301–315 (1998)

Michie, D., Spiegelhalter, D.J., Taylor, C.C.: Machine learning, neural and statistical classification (1994), [Online available]: http://www.amsta.leeds.ac.uk/~charles/statlog/

Chang, C.-C., Lin, C.-J.: LIBSVM: a library for support vector machines, [Online] available from World Wide Web: http://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/

Author information

Authors and Affiliations

Editor information

Rights and permissions

Copyright information

© 2007 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Wu, KP., Wang, SD. (2007). Choosing the Kernel Parameters for the Directed Acyclic Graph Support Vector Machines. In: Perner, P. (eds) Machine Learning and Data Mining in Pattern Recognition. MLDM 2007. Lecture Notes in Computer Science(), vol 4571. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-73499-4_21

Download citation

DOI: https://doi.org/10.1007/978-3-540-73499-4_21

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-73498-7

Online ISBN: 978-3-540-73499-4

eBook Packages: Computer ScienceComputer Science (R0)