Abstract

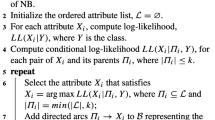

A Bayesian Network (BN) is a multivariate joint probability distribution graphical representation that can be induced from data. The induction of a BN is a NP problem. Two main approaches can be used for inducing a BN from data, namely, Conditional Independence (CI) and the Heuristic Search (HS) based algorithms. When a BN is induced for classification purposes (Bayesian Classifier - BC), it is possible to impose some specific constraints aiming at an increase in computational efficiency. In this paper a new CI based algorithm (MarkovPC) to induce BCs from data is proposed. MarkovPC uses the Markov Blanket concept in order to impose some constraints and optimize the traditional PC algorithm. Experiments performed with ALARM BN, as well as other UCI and artificial domains revealed that MarkovPC tends to execute fewer comparisons than the traditional PC. The experiments also show that the MarkovPC produces competitive classification rates when compared with both, PC and Naïve Bayes.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Beinlich, I., Suermondt, H.J., Chavez, R.M., Cooper, G.F.: The ALARM monitoring system: A case study with two probabilistic inference techniques for belief networks. In: Proc. of the Second European Conf. on Artificial Intelligence in Medicine, London, UK, vol. 38, pp. 247–256 (1989)

Blake, C.L., Merz, C.J.: UCI Repository of Machine Learning Databases, University of California, Department of Information and Computer Science, Irvine, CA (1998)

Cheng, J., Greiner, R., Kelly, J., Bell, D., Liu, W.: Learning Bayesian networks from data: an information-theory based approach. Artificial Intelligence 137(1), 43–90 (2002)

Chickering, D.M., Meek, C.: On the incompatibility of faithfulness and monotone DAG faithfulness. Artificial Intelligence 170, 653–666 (2006)

Druzdzel, M.J.: SMILE: Structural Modeling, Inference, and Learning Engine and GeNIe: A development environment for graphical decision-theoretic models (Intelligent Systems Demonstration). In: Proceedings of the Sixteenth National Conference on Artificial Intelligence (AAAI-99), Menlo Park, CA, pp. 902–903. AAAI Press/The MIT Press (1999)

Duda, R.O., Hart, P.E.: Pattern Classification and Scene Analysis. Wiley, New York (1973)

Hays, W.: Statistics. 5th edn. Wadsworth Publishing (1994)

Hruschka Jr., E.R., Hruschka, E.R., Ebecken, N.F.F.: Feature Selection by Bayesian Networks. In: Tawfik, A.Y., Goodwin, S.D. (eds.) Canadian AI 2004. LNCS (LNAI), vol. 3060, pp. 370–379. Springer, Heidelberg (2004)

Neapolitan, R.E.: Learning Bayesian networks. Prentice-Hall, Upper Saddle River, NJ (2003)

Pearl, J.: Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference. Morgan Kaufmann, San Mateo, CA (1988)

Spirtes, P., Meek, C.: Learning Bayesian networks with discrete variables from data. In: KDD 1995, pp. 294–299 (1995)

Spirtes, P., Glymour, C., Scheines, R.: Causation, Prediction, and Search. In: Adaptive Computation and Machine Learning, MIT Press, Cambridge, Massachusetts (2001)

Author information

Authors and Affiliations

Editor information

Rights and permissions

Copyright information

© 2007 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

de O. Galvão, S.D.C., Hruschka, E.R. (2007). A Markov Blanket Based Strategy to Optimize the Induction of Bayesian Classifiers When Using Conditional Independence Learning Algorithms. In: Song, I.Y., Eder, J., Nguyen, T.M. (eds) Data Warehousing and Knowledge Discovery. DaWaK 2007. Lecture Notes in Computer Science, vol 4654. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-74553-2_33

Download citation

DOI: https://doi.org/10.1007/978-3-540-74553-2_33

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-74552-5

Online ISBN: 978-3-540-74553-2

eBook Packages: Computer ScienceComputer Science (R0)