Summary

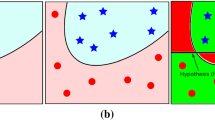

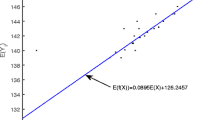

Support Vector Machines (SVM) is one of the most widely used technique in machines leaning. After the SVM algorithms process the data and produce some classification, it is desirable to learn how well this classification fits the data. There exist several measures of fit, among them the most widely used is kernel target alignment. These measures, however, assume that the data are known exactly. In reality, whether the data points come from measurements or from expert estimates, they are only known with uncertainty. As a result, even if we know that the classification perfectly fits the nominal data, this same classification can be a bad fit for the actual values (which are somewhat different from the nominal ones). In this paper, we show how to take this uncertainty into account when estimating the quality of the resulting classification.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Cristianini, N., et al.: On kernel-target alignment. In: Dietterich, T.G., Becker, S., Ghahramani, Z. (eds.) Advances in Neural Information Processing Systems 14, MIT Press, Cambridge, Massachusetts (2002)

Ferson, S., et al.: Computing variance for interval data is NP-hard. SIGACT News 33(2), 108–118 (2002)

Garey, M.R., Johnson, D.S.: Computers and Intractability, a Guide to the Theory of NP-Completeness. WH Freeman and Company, San Francisco, California (1979)

Kreinovich, V., et al.: Computational Complexity and Feasibility of Data Processing and Interval Computations. Kluwer, Dordrecht (1997)

Kreinovich, V., et al.: Interval versions of statistical techniques, with applications to environmental analysis, bioinformatics, and privacy in statistical databases. Journal of Computational and Applied Mathematics 199, 418–423 (2007)

Jaulin, L., et al.: Applied Interval Analysis: With Examples in Parameter and State Estimation, Robust Control and Robotics. Springer, London (2001)

Nguyen, C.H., Ho, T.B.: Kernel matrix evaluation. In: Manuela, M., Veloso, M.M. (eds.) Proceedings of the 20th International Joint Conference on Artificial Intelligence IJCAI 2007, Hyderabad, India, January 6–12, 2007, pp. 987–992 (2007)

Papadimitriou, C.H., Steiglitz, K.: Combinatorial Optimization: Algorithms and Complexity. Dover Publications, Inc., Mineola, New York (1998)

Rabinovich, S.G.: Measurement Errors and Uncertainty. Theory and Practice. Springer, Berlin (2005)

Schölkopf, B., Smola, A.J.: Learning with Kernels. MIT Press, Cambridge, Massachusetts (2002)

Shawe-Taylor, J., Cristianini, N.: Kernel Methods for Pattern Analysis. Cambridge University Press, New York (2004)

Vapnik, V.N.: The Nature of Statistical Learning Theory. Springer, New York (1995)

Wang, L., Chan, K.L.: Learning kernel parameters by using class separability measure. In: NIPS’s Sixth Kernel Machines Workshop, Whistler, Canada (2002)

Author information

Authors and Affiliations

Editor information

Rights and permissions

Copyright information

© 2008 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Nguyen, C.H., Ho, T.B., Kreinovich, V. (2008). Estimating Quality of Support Vector Machines Learning under Probabilistic and Interval Uncertainty: Algorithms and Computational Complexity. In: Huynh, VN., Nakamori, Y., Ono, H., Lawry, J., Kreinovich, V., Nguyen, H.T. (eds) Interval / Probabilistic Uncertainty and Non-Classical Logics. Advances in Soft Computing, vol 46. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-77664-2_6

Download citation

DOI: https://doi.org/10.1007/978-3-540-77664-2_6

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-77663-5

Online ISBN: 978-3-540-77664-2

eBook Packages: EngineeringEngineering (R0)