Abstract

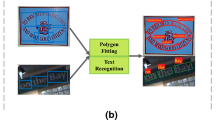

The increasing use of display units in avionics motivate the need for vision-based text recognition systems to assist humans. The system for generic displays proposed in this paper includes some of the usual text recognition steps, namely localization, extraction and enhancement, and optical character recognition. The proposal has been fully developed and tested on a multi-display simulator. The commercial OCR module from Matrox Imaging Library has been used to validate the textual displays segmentation proposal.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Abdel-Aziz, Y.I., Karara, H.M.: Direct linear transformation into object space coordinates in close-range photogrammetry. In: Proceedings of the Symposium on Close-Range Photogrametry, pp. 1–18 (1971)

Andersson, P., von Hofsten, C.: Readability of vertically vibrating aircraft displays. Displays 20, 23–30 (1999)

Chang, F., Chen, G.C., Lin, C.C., Lin, W.H.: Caption analysis and recognition for building video indexing system. Multimedia Systems 10(4), 344–355 (2005)

Faugeras, O.: Three-dimensional computer vision: A geometric viewpoint. MIT Press, Cambridge (1993)

Huang, S., Ahmadi, M., Sid-Ahmed, M.A.: A hidden Markov model-based character extraction method. Pattern Recognition (2008), doi:10.1016/j.patcog.2008.03.004

Jung, K., Kim, K.I., Jain, A.K.: Text information extraction in images and video: A survey. Pattern Recognition 37, 977–997 (2004)

Kittler, J., Illingworth, J.: Minimum error thresholding. Pattern Recognition 19, 41–47 (1986)

Lienhart, R.: Automatic text recognition in digital videos. In: Proceedings SPIE, Image and Video Processing IV, pp. 2666–2675 (1996)

Lin, C.J., Hsieh, Y.-H., Chen, H.-C., Chen, J.C.: Visual performance and fatigue in reading vibrating numeric displays. Displays (2008), doi:10.1016/j.displa.2007.12.004

Otsu, N.: A threshold selection method from gray-level histogram. IEEE Transactions on Systems, Man, and Cybernetics 9, 62–66 (1979)

Pikaz, A., Averbuch, A.: Digital image thresholding based on topological stable state. Pattern Recognition 29, 829–843 (1996)

Prewitt, J.M.S., Mendelsohn, M.L.: The analysis of cell images. Annals of the New York Academy of Sciences 128(3), 1035–1053 (1965)

Sato, T., Kanade, T., Hughes, E.K., Smith, M.A., Satoh, S.: Video OCR: indexing digital news libraries by recognition of superimposed caption. ACM Multimedia Systems Special Issue on Video Libraries 7(5), 385–395 (1998)

Sezgin, M., Sankur, B.: Survey over image thresholding techniques and quantitative performance evaluation. Journal of Electronic Imaging 13(1), 146–165 (2004)

Stein, G.P.: Accurate internal camera calibration using rotation with analysis of sources of error. In: Proceedings of the Fifth International Conference on Computer Vision, p. 230 (1995)

Stein, G.P.: Lens distortion calibration using point correspondences. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 602–608 (1997)

Tsai, R.Y.: A versatile camera calibration technique for high accuracy 3-d maching vision metrology using off-the-shelf TV cameras and lenses. IEEE Journal of Robotics & Automation 3, 323–344 (1987)

Wang, K., Kangas, J.A., Li, W.: Character segmentation of color images from digital camera. In: Proceedings of the International Conference on Document Analysis and Recognition, pp. 210–214 (2001)

Wang, J., Shi, F., Zhang, J., Liu, Y.: A new calibration model of camera lens distortion. Pattern Recognition 41(2), 607–615 (2008)

Wolf, C., Jolion, J.: Extraction and recognition of artificial text in multimedia documents. Pattern Analysis and Applications 6, 309–326 (2003)

Yan, H., Wu, J.: Character and line extraction from color map images using a multi-layer neural network. Pattern Recognition Letters 15, 97–103 (1994)

Zhu, K., Qi, F., Jiang, R., Xu, L., Kimachi, M., Wu, Y., Aizawa, T.: Using adaboost to detect and segment characters from natural scenes. In: Proceedings of the International Workshop on Camera-based Document Analysis and Recognition, pp. 52–58 (2005)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2009 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Castillo, J.C., López, M.T., Fernández-Caballero, A. (2009). Vision-Based Text Segmentation System for Generic Display Units. In: Mira, J., Ferrández, J.M., Álvarez, J.R., de la Paz, F., Toledo, F.J. (eds) Bioinspired Applications in Artificial and Natural Computation. IWINAC 2009. Lecture Notes in Computer Science, vol 5602. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-02267-8_25

Download citation

DOI: https://doi.org/10.1007/978-3-642-02267-8_25

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-02266-1

Online ISBN: 978-3-642-02267-8

eBook Packages: Computer ScienceComputer Science (R0)