Abstract

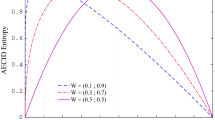

Binary decision trees based on univariate splits have traditionally employed so-called impurity functions as a means of searching for the best node splits. Such functions use estimates of the class distributions. In the present paper we introduce a new concept to binary tree design: instead of working with the class distributions of the data we work directly with the distribution of the errors originated by the node splits. Concretely, we search for the best splits using a minimum entropy-of-error (MEE) strategy. This strategy has recently been applied in other areas (e.g. regression, clustering, blind source separation, neural network training) with success. We show that MEE trees are capable of producing good results with often simpler trees, have interesting generalization properties and in the many experiments we have performed they could be used without pruning.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Asuncion, A., Newman, D.J.: UCI Machine Learning Repository. Univ. of California, SICS, Irvine, CA (2007), http://www.ics.uci.edu/~mlearn/MLRepository.html

Breiman, L., Friedman, J.H., Olshen, R.A., Stone, C.J.: Classification and Regression Trees. Chapman & Hall/CRC, Boca Raton (1993)

Devroye, L., Giörfi, L., Lugosi, G.: A Probabilistic Theory of Pattern Recognition. Springer, Heidelberg (1996)

Forina, M., Armanino, C.: Eigenvector Projection and Simplified Nonlinear Mapping of Fatty Acid Content of Italian Olive Oils. Ann. Chim. 72, 127–155 (1981)

Loh, W.-Y., Shih, Y.-S.: Split Selection Methods for Classification Trees. Statistica Sinica 7, 815–840 (1997)

Marques de Sá, J.P.: Applied Statistics Using SPSS, STATISTICA, MATLAB and R, 2nd edn. Springer, Heidelberg (2007)

Quinlan, J.R.: Induction of Decision Trees. Machine Learning 1, 81–106 (1986)

Quinlan, J.R.: C4.5 Programs for Machine Learning. Morgan Kaufmann, San Francisco (1993)

Silva, L.M., Felgueiras, C.S., Alexandre, L.A., Marques de Sá, J.: Error Entropy in Classification Problems: A Univariate Data Analysis. Neural Comp. 18, 2036–2061 (2006)

Silva, L.M., Embrechts, M.J., Santos, J.M., de Sá, J.M.: The influence of the risk functional in data classification with mLPs. In: Kůrková, V., Neruda, R., Koutník, J. (eds.) ICANN 2008, Part I. LNCS, vol. 5163, pp. 185–194. Springer, Heidelberg (2008)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2009 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

de Sá, J.P.M., Gama, J., Sebastião, R., Alexandre, L.A. (2009). Decision Trees Using the Minimum Entropy-of-Error Principle. In: Jiang, X., Petkov, N. (eds) Computer Analysis of Images and Patterns. CAIP 2009. Lecture Notes in Computer Science, vol 5702. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-03767-2_97

Download citation

DOI: https://doi.org/10.1007/978-3-642-03767-2_97

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-03766-5

Online ISBN: 978-3-642-03767-2

eBook Packages: Computer ScienceComputer Science (R0)