Abstract

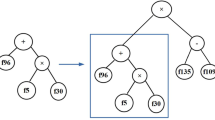

Existing learning and search algorithms can suffer a learning bias when dealing with unbalanced data sets. This paper proposes a Multi-Objective Genetic Programming (MOGP) approach to evolve a Pareto front of classifiers along the optimal trade-off surface representing minority and majority class accuracy for binary class imbalance problems. A major advantage of the MOGP approach is that by explicitly incorporating the learning bias into the search algorithm, a good set of well-performing classifiers can be evolved in a single experiment while canonical (single-solution) Genetic Programming (GP) requires some objective preference be a priori built into a fitness function. Our results show that a diverse set of solutions was found along the Pareto front which performed as well or better than canonical GP on four class imbalance problems.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Koza, J.R.: Genetic Programming: On the Programming of Computers by Means of Natural Selection. MIT Press, Cambridge (1992)

Weiss, G.M., Provost, F.: Learning when training data are costly: The effect of class distribution on tree induction. Journal of Artificial Intelligence Research 19, 315–354 (2003)

Chawla, N.V., Japkowicz, N., Kolcz, A.: Editorial: Special issue on learning from imbalanced data sets. ACM SIGKDD Explorations Newsletter 6, 1–6 (2004)

Bhowan, U., Johnston, M., Zhang, M.: Differentiating between individual class performance in genetic programming fitness for classification with unbalanced data. In: Proceedings of the 2009 IEEE Congress on Evolutionary Computation (2009)

Goldberg, D.E.: Genetic Algorithms in Search, Optimization and Machine Learning. Addison-Wesley, Reading (1989)

Coello, C., Lamont, G., Veldhuizen, D.: Evolutionary Algorithms for Solving Multi-Objective Problems, 2nd edn. Springer, US (2007)

Jong, E.D., Pollack, J.B.: Multi-objective methods for tree size control. Genetic Programming and Evolvable Machines 4(3), 211–233 (2003)

Parrot, D., Li, X., Ciesielski, V.: Multi-objective techniques in genetic programming for evolving classifiers. In: Proceedings of the 2005 Congress on Evolutionary Computation (CEC 2005), September 2005, pp. 1141–1148 (2005)

Deb, K., Pratap, A., Agarwal, S., Meyarivan, T.: A fast elitist multi-objective genetic algorithm: NSGA-II. IEEE Transactions on Evolutionary Computation 6, 182–197 (2000)

Bot, M.C.J., Boelelaan, D., Langdon, W.B.: Improving induction of linear classification trees with genetic programming. In: Genetic and Evolutionary Computation Conference, pp. 403–410. Morgan Kaufmann, San Francisco (2000)

Bradley, A.P.: The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognition 30, 1145–1159 (1997)

Blake, C., Merz, C.: UCI repository of machine learning databases (1998), http://archive.ics.uci.edu/ml

Sung, K.-K.: Learning and Example Selection for Object and Pattern Recognition. PhD thesis, AI Laboratory and Center for Biological and Computational Learning, MIT (1996)

Laumanns, M., Thiele, L., Deb, K., Zitzler, E.: Combining convergence and diversity in evolutionary multiobjective optimization. Evolutionary Compututation 10(3), 263–282 (2002)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2009 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Bhowan, U., Zhang, M., Johnston, M. (2009). Multi-Objective Genetic Programming for Classification with Unbalanced Data. In: Nicholson, A., Li, X. (eds) AI 2009: Advances in Artificial Intelligence. AI 2009. Lecture Notes in Computer Science(), vol 5866. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-10439-8_38

Download citation

DOI: https://doi.org/10.1007/978-3-642-10439-8_38

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-10438-1

Online ISBN: 978-3-642-10439-8

eBook Packages: Computer ScienceComputer Science (R0)