Abstract

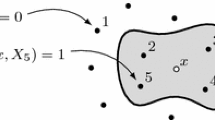

A major challenge in multi-agent reinforcement learning remains dealing with the large state spaces typically associated with realistic multi-agent systems. As the state space grows, agent policies become more and more complex and learning slows down. The presence of possibly redundant information is one of the causes of this issue. Current single-agent techniques are already very capable of learning optimal policies in large unknown environments. When multiple agents are present however, we are challenged by an increase of the state space, which is exponential in the number of agents. A solution to this problem lies in the use of Generalized Learning Automata (GLA). In this chapter we will first demonstrate how GLA can help take the correct actions in large unknown environments. Secondly, we introduce a general framework for multi-agent learning, where learning happens on two separate layers and agents learn when to observe each other. Within this framework we introduce a new algorithm, called 2observe, which uses a GLA-approach to distinguish between high risk states where the agents have to take each others presence into account and low risk states where they can act independently. Finally we apply this algorithm to a gridworld problem because of the similarities to some real-world problems, such as autonomous robot control.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Sutton, R.S., Precup, D., Singh, S.P.: Between MDPs and semi-MDPs: A framework for temporal abstraction in reinforcement learning. Artificial Intelligence 112(1-2), 181–211 (1999)

Stolle, M., Precup, D.: Learning options in reinforcement learning. In: Koenig, S., Holte, R.C. (eds.) SARA 2002. LNCS (LNAI), vol. 2371, pp. 212–223. Springer, Heidelberg (2002)

Hu, J., Wellman, M.P.: Nash q-learning for general-sum stochastic games. J. Mach. Learn. Res. 4, 1039–1069 (2003)

Greenwald, A., Hall, K.: Correlated-q learning. In: AAAI Spring Symposium, pp. 242–249. AAAI Press, Menlo Park (2003)

Vrancx, P., Verbeeck, K., Nowe, A.: Decentralized learning in markov games. IEEE Transactions on Systems, Man and Cybernetics (Part B: Cybernetics) 38(4), 976–981 (2008)

Vrancx, P., Verbeeck, K., Nowé, A.: Optimal convergence in multi-agent mdps. In: Apolloni, B., Howlett, R.J., Jain, L. (eds.) KES 2007, Part III. LNCS (LNAI), vol. 4694, pp. 107–114. Springer, Heidelberg (2007)

Boutilier, C.: Planning, learning and coordination in multiagent decision processes. In: Theoretical Aspects of Rationality and Knowledge, pp. 195–201 (1996)

Boutilier, C., Dearden, R., Goldszmidt, M.: Exploiting structure in policy construction. In: Mellish, C. (ed.) Proceedings of the 14th International Joint Conference on Artificial Intelligence, pp. 1104–1111. Morgan Kaufmann, San Francisco (1995)

Guestrin, C., Koller, D., Parr, R.: Multiagent planning with factored mdps. In: 14th Neural Information Processing Systems, NIPS-14 (2001)

Degris, T., Sigaud, O., Wuillemin, P.H.: Learning the structure of factored markov decision processes in reinforcement learning problems. In: Proceedings of the 23rd International Conference on Machine learning, New York, NY, USA, pp. 257–264 (2006)

Strehl, A.L., Diuk, C., Littman, M.L.: Efficient structure learning in factored-state mdps. In: AAAI, pp. 645–650. AAAI Press, Menlo Park (2007)

Abbeel, P., Koller, D., Ng, A.Y.: Learning factor graphs in polynomial time and sample complexity. Journal of Machine Learning Research 7, 1743–1788 (2006)

Russel, S., Norvig, P.: Artificial Intelligence, a Modern Approach. Prentice-Hall, Englewood Cliffs (1995)

Sutton, R.S., Barto, A.G.: Reinforcement Learning: An Introduction. MIT Press, Cambridge (1998)

Mitchell, T.: Machine Learning. McGraw-Hill Companies, New York (1997)

Kaelbling, L.P., Littman, M.L., Moore, A.P.: Reinforcement learning: A survey. Journal of Artificial Intelligence Research 4, 237–285 (1996)

Watkins, C.: Learning from Delayed Rewards. PhD thesis, University of Cambridge (1989)

Lin, L.: Programming robots using reinforcement learning and teaching. In: Proceedings of AAAI, vol. 91, pp. 781–786 (1991)

Chapman, D., Kaelbling, L.: Input generalization in delayed reinforcement learning: An algorithm and performance comparisons. In: Proceedings of the 12th International Joint Conference on Artificial Intelligence, pp. 726–731 (1991)

Guestrin, C., Hauskrecht, M., Kveton, B.: Solving factored mdps with continuous and discrete variables. In: Proceedings of the 20th conference on Uncertainty in artificial intelligence, pp. 235–242 (2004)

Sutton, R.S.: Reinforcement learning architectures. In: Proceedings ISKIT 1992 International Symposium on Neural Information Processing (1992)

Shapley, L.: Stochastic Games. Proceedings of the National Academy of Sciences 39, 1095–1100 (1953)

Claus, C., Boutilier, C.: The dynamics of reinforcement learning in cooperative multiagent systems. In: Proceedings of the Fifteenth National Conference on Artificial Intelligence, pp. 746–752. AAAI Press, Menlo Park (1998)

Phansalkar, V., Thathachar, M.: Local and global optimization algorithms for generalized learning automata. Neural Computation 7, 950–973 (1995)

Williams, R.: Simple Statistical Gradient-Following Algorithms for Connectionist Reinforcement Learning. Reinforcement Learning 8, 229–256 (1992)

Thathachar, M., Sastry, P.: Networks of Learning Automata: Techniques for Online Stochastic Optimization. Kluwer Academic Pub., Dordrecht (2004)

Kok, J.R., Vlassis, N.: Sparse tabular multiagent Q-learning. In: Nowé, A., Tom Lenaerts, K.S. (eds.) Proceedings of the Annual Machine Learning Conference of Belgium and the Netherlands, Brussels, Belgium, pp. 65–71 (January 2004)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2010 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

De Hauwere, YM., Vrancx, P., Nowé, A. (2010). Multi-Agent Systems and Large State Spaces. In: Hãkansson, A., Hartung, R., Nguyen, N.T. (eds) Agent and Multi-agent Technology for Internet and Enterprise Systems. Studies in Computational Intelligence, vol 289. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-13526-2_9

Download citation

DOI: https://doi.org/10.1007/978-3-642-13526-2_9

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-13525-5

Online ISBN: 978-3-642-13526-2

eBook Packages: EngineeringEngineering (R0)