Abstract

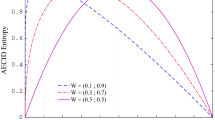

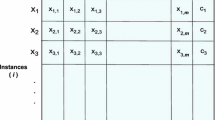

When using machine learning to solve real world problems, the class distribution used in the training set is important; not only in highly unbalanced data sets but in every data set. Weiss and Provost suggested that each domain has an optimal class distribution to be used for training. The aim of this work was to analyze the truthfulness of this hypothesis in the context of decision tree learners. With this aim we found the optimal class distribution for 30 databases and two decision tree learners, C4.5 and Consolidated Tree Construction algorithm (CTC), taking into account pruned and unpruned trees and based on two measures for evaluating discriminating capacity: AUC and error. The results confirmed that changes in the class distribution of the training samples improve the performance (AUC and error) of the classifiers. Therefore, the experimentation showed that there is an optimal class distribution for each database and this distribution depends on the used learning algorithm, whether the trees are pruned or not and the used evaluation criteria. Besides, results showed that CTC algorithm combined with optimal class distribution samples achieves more accurate learners, than any of the options of C4.5 and CTC with original distribution, with statistically significant differences.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Asuncion, A., Newman, D.J.: UCI Machine Learning Repository University of California, School of Information and Computer Science, Irvine (2007), http://www.ics.uci.edu/~mlearn/MLRepository.html

Chawla, N.V., Bowyer, K.W., Kegelmeyer, W.P.: SMOTE: Synthetic Minority Over-sampling Technique. Journal of Artificial Intelligence Research 16, 321–357 (2002)

Chawla, N.V.: C4.5 and Imbalanced Data sets: Investigating the effect of sampling method, probabilistic estimate, and decision tree structure. In: Proc. of the Workshop on Learning from Imbalanced Data Sets, ICML, Washington DC (2003)

Demšar, J.: Statistical Comparisons of Classifiers over Multiple Data Sets. Journal of Machine Learning Research 7, 1–30 (2006)

Drummond, C., Holte, R.C.: Exploiting the Cost (In)sensitivity of Decision Tree Splitting Criteria. In: Proc. of the 17th Int. Conf. on Machine Learning, pp. 239–246 (2000)

Estabrooks, A., Jo, T.J., Japkowicz, N.: A Multiple Resampling Method for Learning from Imbalanced Data Sets. Computational Intelligence 20(1), 18–36 (2004)

García, S., Herrera, F.: An Extension on Statistical Comparisons of Classifiers over Multiple Data Sets for all Pairwise Comparisons. JMLR 9, 2677–2694 (2008)

Japkowicz, N., Stephen, S.: The Class Imbalance Problem: A Systematic Study. Intelligent Data Analysis Journal 6(5) (2002)

Kennedy, R.L., Lee, Y., Van Roy, B., Reed, C.D., Lippmann, R.P.: Solving Data Mining Problems through Pattern Recognition. Prentice-Hall, Englewood Cliffs (1998)

Ling, C.X., Huang, J., Zhang, H.: AUC: a better measure than accuracy in comparing learning algorithms. In: Xiang, Y., Chaib-draa, B. (eds.) Canadian AI 2003. LNCS (LNAI), vol. 2671, pp. 329–341. Springer, Heidelberg (2003)

Marrocco, C., Duin, R.P.W., Tortorella, F.: Maximizing the area under the ROC curve by pairwise feature combination. Pattern Recognition 41(6), 1961–1974 (2008)

Orriols-Puig, A., Bernadó-Mansilla, E.: Evolutionary rule-based systems for imbalanced data sets. Soft Comput. 13, 213–225 (2009)

Pérez, J.M., Muguerza, J., Arbelaitz, O., Gurrutxaga, I.: A New Algorithm to Build Consolidated Trees: Study of the Error Rate and Steadiness. In: Advances in Soft Computing, Proc. of the International Intelligent Information Processing and Web Mining Conference (IIS: IIPWM’04). Zakopane, Poland, pp. 79–88 (2004)

Pérez, J.M., Muguerza, J., Arbelaitz, O., Gurrutxaga, I., Martín, J.I.: Combining multiple class distribution modified subsamples in a single tree. Pattern Recognition Letters 28(4), 414–422 (2007)

Quinlan, J.R.: C4.5: Programs for Machine Learning. In: Morgan Kaufmann Publishers Inc. (eds.), San Mateo (1993)

Weiss, G.M., Provost, F.: Learning when Training Data are Costly: The Effect of Class Distribution on Tree Induction. JAIR 19, 315–354 (2003)

Xu, L., Krzyzak, A., Suen, C.Y.: Methods of Combining Multiple Classifiers and Their Applications to Handwriting Recognition. IEEE Transactions on Systems, Man and Cybernetics SMC-22(3), 418–435 (1992)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2010 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Albisua, I. et al. (2010). Obtaining Optimal Class Distribution for Decision Trees: Comparative Analysis of CTC and C4.5. In: Meseguer, P., Mandow, L., Gasca, R.M. (eds) Current Topics in Artificial Intelligence. CAEPIA 2009. Lecture Notes in Computer Science(), vol 5988. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-14264-2_11

Download citation

DOI: https://doi.org/10.1007/978-3-642-14264-2_11

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-14263-5

Online ISBN: 978-3-642-14264-2

eBook Packages: Computer ScienceComputer Science (R0)