Abstract

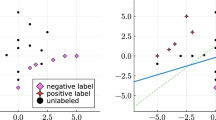

Classic MEB (minimum enclosing ball) models characteristics of each class for classification by extracting core vectors through a (1 + ε)-approximation problem solving. In this paper, we develop a new MEB system learning the core vectors set in a group manner, called group MEB (g-MEB). The g-MEB factorizes class characteristic in 3 aspects such as, reducing the sparseness in MEB by decomposing data space based on data distribution density, discriminating core vectors on class interaction hyperplanes, and enabling outliers detection to decrease noise affection. Experimental results show that the factorized core set from g-MEB delivers often apparently higher classification accuracies than the classic MEB.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Ben-Hur, A., Horn, D., Siegelmann, H.T., Vapnik, V.: Support vector clustering. J. Mach. Learn. Res. 2, 125–137 (2002)

Burges, C.J.: A tutorial on support vector machines for pattern recognition. Data Mining and Knowledge Discovery 2, 121–167 (1998)

Tsang, I.W., Kwok, J.T., Cheung, P.M.: Core vector machines: Fast svm training on very large data sets. Journal of Machine Learning Research 6, 363–392 (2005)

Bādoiu, M., Har-Peled, S., Indyk, P.: Approximate clustering via core-sets. In: STOC 2002: Proceedings of the Thiry-Fourth Annual ACM Symposium on Theory of Computing, pp. 250–257. ACM, New York (2002)

Welzl, E.: Smallest enclosing disks (balls and ellipsoids). In: Maurer, H.A. (ed.) New Results and New Trends in Computer Science. LNCS, vol. 555, pp. 359–370. Springer, Heidelberg (1991)

Pang, S., Kim, D., Bang, S.Y.: Face membership authentication using svm classification tree generated by membership-based lle data partition. IEEE Transactions on Neural Networks 16(2), 436–446 (2005)

Garcia, V., Alejo, R., Sinchez, J.S., Sotoca, J.M., Mollineda, R.A.: Combined effects of class imbalance and class overlap on instance-based classification (2008)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2010 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Chen, Y., Pang, S., Kasabov, N. (2010). Factorizing Class Characteristics via Group MEBs Construction. In: Wong, K.W., Mendis, B.S.U., Bouzerdoum, A. (eds) Neural Information Processing. Models and Applications. ICONIP 2010. Lecture Notes in Computer Science, vol 6444. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-17534-3_35

Download citation

DOI: https://doi.org/10.1007/978-3-642-17534-3_35

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-17533-6

Online ISBN: 978-3-642-17534-3

eBook Packages: Computer ScienceComputer Science (R0)