Abstract

There are hundreds of algorithms within data mining. Some of them are used to transform data, some to build classifiers, others for prediction, etc. Nobody knows well all these algorithms and nobody can know all the arcana of their behavior in all possible applications. How to find the best combination of transformation and final machine which solves given problem?

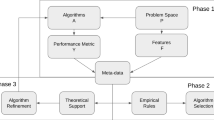

The solution is to use configurable and efficient meta-learning to solve data mining problems. Below, a general and flexible meta-learning system is presented. It can be used to solve different problems with computational intelligence, basing on learning from data.

The main ideas of our meta-learning algorithms lie in complexity controlled loop, searching for most adequate models and in using special functional specification of search spaces (the meta-learning spaces) combined with flexible way of defining the goal of meta-searching.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Jankowski, N., Grąbczewski, K.: Learning machines. In: Guyon, I., Gunn, S., Nikravesh, M., Zadeh, L. (eds.) Feature extraction, foundations and applications, pp. 29–64. Springer, Heidelberg (2006)

Guyon, I.: Nips 2003 workshop on feature extraction, http://www.clopinet.com/isabelle/Projects/NIPS2003 (2003)

Guyon, I., Gunn, S., Nikravesh, M., Zadeh, L.: Feature extraction, foundations and applications. Springer, Heidelberg (2006)

Guyon, I.: Performance prediction challenge (2006), http://www.modelselect.inf.ethz.ch

Chan, P., Stolfo, S.J.: On the accuracy of meta-learning for scalable data mining. Journal of Intelligent Information Systems 8, 5–28 (1996)

Prodromidis, A., Chan, P.: Meta-learning in distributed data mining systems: Issues and approaches. In: Kargupta, H., Chan, P. (eds.) Book on Advances of Distributed Data Mining. AAAI Press, Menlo Park (2000)

Todorovski, L., Dzeroski, S.: Combining classifiers with meta decision trees. Machine Learning Journal 50, 223–249 (2003)

Duch, W., Itert, L.: Committees of undemocratic competent models. In: Kaynak, O., Alpaydın, E., Oja, E., Xu, L. (eds.) ICANN 2003 and ICONIP 2003. LNCS, vol. 2714, pp. 33–36. Springer, Heidelberg (2003)

Jankowski, N., Grąbczewski, K.: Heterogenous committees with competence analysis. In: Nedjah, N., Mourelle, L., Vellasco, M., Abraham, A., Köppen, M. (eds.) Fifth International conference on Hybrid Intelligent Systems, Brasil, Rio de Janeiro, pp. 417–422. IEEE, Computer Society, Los Alamitos (2005)

Pfahringer, B., Bensusan, H., Giraud-Carrier, C.: Meta-learning by landmarking various learning algorithms. In: Proceedings of the Seventeenth International Conference on Machine Learning, pp. 743–750. Morgan Kaufmann, San Francisco (2000)

Brazdil, P., Soares, C., da Costa, J.P.: Ranking learning algorithms: Using IBL and meta-learning on accuracy and time results. Machine Learning 50, 251–277 (2003)

Bensusan, H., Giraud-Carrier, C., Kennedy, C.J.: A higher-order approach to meta-learning. In: Cussens, J., Frisch, A. (eds.) Proceedings of the Work-in-Progress Track at the 10th International Conference on Inductive Logic Programming, pp. 33–42 (2000)

Peng, Y., Falch, P., Soares, C., Brazdil, P.: Improved dataset characterisation for meta-learning. In: The 5th International Conference on Discovery Science, pp. 141–152. Springer, Luebeck (2002)

Kadlec, P., Gabrys, B.: Learnt topology gating artificial neural networks. In: IEEE World Congress on Computational Intelligence, pp. 2605–2612. IEEE Press, Los Alamitos (2008)

Smith-Miles, K.A.: Towards insightful algorithm selection for optimization using meta-learning concepts. In: IEEE World Congress on Computational Intelligence, pp. 4117–4123. IEEE Press, Los Alamitos (2008)

Kolmogorov, A.N.: Three approaches to the quantitative definition of information. Prob. Inf. Trans. 1, 1–7 (1965)

Li, M., Vitányi, P.: An Introduction to Kolmogorov Complexity and Its Applications. In: Text and Monographs in Computer Science. Springer, Heidelberg (1993)

Jankowski, N.: Applications of Levin’s universal optimal search algorithm. In: Kącki, E. (ed.) System Modeling Control 1995, vol. 3, pp. 34–40. Polish Society of Medical Informatics, Lódź (1995)

Bishop, C.M.: Neural Networks for Pattern Recognition. Oxford University Press, Oxford (1995)

Duda, R.O., Hart, P.E., Stork, D.G.: Pattern Classification, 2nd edn. Wiley, Chichester (2001)

Hastie, T., Tibshirani, R., Friedman, J.: The Elements of Statistical Learning: Data Mining, Inference, and Prediction. Springer Series in Statistics. Springer, Heidelberg (2001)

Rissanen, J.: Modeling by shortest data description. Automatica 14, 445–471 (1978)

Mitchell, T.: Machine learning. McGraw-Hill, New York (1997)

Werbose, P.J.: Beyond regression: New tools for prediction and analysis in the bahavioral sciences. PhD thesis. Harvard Univeristy, Cambridge, MA (1974)

Cover, T.M., Hart, P.E.: Nearest neighbor pattern classification. Institute of Electrical and Electronics Engineers Transactions on Information Theory 13, 21–27 (1967)

Boser, B.E., Guyon, I.M., Vapnik, V.: A training algorithm for optimal margin classifiers. In: Haussler, D. (ed.) Proceedings of the 5th Annual ACM Workshop on Computational Learning Theory. ACM Press, Pittsburgh (1992)

Vapnik, V.: Statistical Learning Theory. Wiley, Chichester (1998)

Grąbczewski, K., Duch, W.: The Separability of Split Value criterion. In: Proceedings of the 5th Conference on Neural Networks and Their Applications, Zakopane, Poland, pp. 201–208 (2000)

Grąbczewski, K., Jankowski, N.: Saving time and memory in computational intelligence system with machine unification and task spooling. Knowledge-Based Systems, 30 (2011) (in print)

Jankowski, N., Grąbczewski, K.: Increasing efficiency of data mining systems by machine unification and double machine cache. In: Rutkowski, L., Scherer, R., Tadeusiewicz, R., Zadeh, L.A., Zurada, J.M. (eds.) ICAISC 2010. LNCS, vol. 6113, pp. 380–387. Springer, Heidelberg (2010)

Grąbczewski, K., Jankowski, N.: Task management in advanced computational intelligence system. In: Rutkowski, L., Scherer, R., Tadeusiewicz, R., Zadeh, L.A., Zurada, J.M. (eds.) ICAISC 2010. LNCS, vol. 6113, pp. 331–338. Springer, Heidelberg (2010)

Jankowski, N., Grąbczewski, K.: Gained knowledge exchange and analysis for meta-learning. In: Proceedings of International Conference on Machine Learning and Cybernetics, Hong Kong, China, pp. 795–802. IEEE Press, Los Alamitos (2007)

Grąbczewski, K., Jankowski, N.: Meta-learning architecture for knowledge representation and management in computational intelligence. International Journal of Information Technology and Intelligent Computing 2, 27 (2007)

Merz, C.J., Murphy, P.M.: UCI repository of machine learning databases (1998), http://www.ics.uci.edu/~simmlearn/MLRepository.html

Kohonen, T.: Learning vector quantization for pattern recognition. Technical Report TKK-F-A601, Helsinki University of Technology, Espoo, Finland (1986)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2011 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Jankowski, N., Grąbczewski, K. (2011). Universal Meta-Learning Architecture and Algorithms. In: Jankowski, N., Duch, W., Gra̧bczewski, K. (eds) Meta-Learning in Computational Intelligence. Studies in Computational Intelligence, vol 358. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-20980-2_1

Download citation

DOI: https://doi.org/10.1007/978-3-642-20980-2_1

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-20979-6

Online ISBN: 978-3-642-20980-2

eBook Packages: EngineeringEngineering (R0)