Abstract

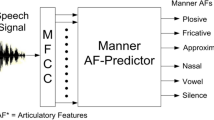

Speech sounds can be characterized by articulatory features. Articulatory features are typically estimated using a set of multilayer perceptrons (MLPs), i.e., a separate MLP is trained for each articulatory feature. In this paper, we investigate multitask learning (MTL) approach for joint estimation of articulatory features with and without phoneme classification as subtask. Our studies show that MTL MLP can estimate articulatory features compactly and efficiently by learning the inter-feature dependencies through a common hidden layer representation. Furthermore, adding phoneme as subtask while estimating articulatory features improves both articulatory feature estimation and phoneme recognition. On TIMIT phoneme recognition task, articulatory feature posterior probabilities obtained by MTL MLP achieve a phoneme recognition accuracy of 73.2%, while the phoneme posterior probabilities achieve an accuracy of 74.0%.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Aradilla, G., Vepa, J., Bourlard, H.: An Acoustic Model Based on Kullback-Leibler Divergence for Posterior Features. In: Proc. of ICASSP, pp. 657–660 (2007)

Caruana, R.: Multitask Learning. Machine Learning 28(1), 41–75 (1997)

Frankel, J., Çetin, O., Morgan, N.: Transfer Learning for Tandem ASR Feature Extraction. In: Proceedings of MLMI, pp. 227–236 (2007)

Frankel, J., Magimai-Doss, M., King, S., Livescu, K., Çetin, O.: Articulatory Feature Classifiers Trained on 2000 hours of Telephone Speech. In: Proc. of Interspeech (2007)

Frankel, J., Wester, M., King, S.: Articulatory feature recognition using dynamic Bayesian networks. Computer Speech & Language 21(4), 620–640 (2007)

Hosom, J.P.: Speaker-independent phoneme alignment using transition-dependent states. Speech Communication 51, 352–368 (2009)

King, S., Taylor, P.: Detection of Phonological Features in Continuous Speech using Neural Networks. Computer Speech and Language 14(4), 333–353 (2000)

Parveen, S., Green, P.: Multitask Learning in Connectionist Robust ASR using Recurrent Neural Networks. In: Proceedings of EUROSPEECH, pp. 1813–1816 (2003)

Pinto, J., Sivaram, G., Magimai-Doss, M., Hermansky, H., Bourlard, H.: Analysis of MLP based Hierarchical Phoneme Posterior Probability Estimator. IEEE Trans. on Audio, Speech, and Language Processing 19(2), 225–241 (2011)

Rasipuram, R., Magimai.-Doss, M.: Integrating Articulatory Features using Kullback-Leibler Divergence based Acoustic Model for Phoneme Recognition. In: Proc. of ICASSP (2011)

Richmond, K.: A Multitask Learning Perspective on Acoustic-Articulatory Inversion. In: Proc. of Interspeech (2007)

Stadermann, J., Koska, W., Rigoll, G.: Multi-task Learning Strategies for a Recurrent Neural Net in a Hybrid Tied-Posteriors Acoustic Model. In: Proc. of Interspeech, pp. 2993–2996 (2005)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2011 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Rasipuram, R., Magimai-Doss, M. (2011). Improving Articulatory Feature and Phoneme Recognition Using Multitask Learning. In: Honkela, T., Duch, W., Girolami, M., Kaski, S. (eds) Artificial Neural Networks and Machine Learning – ICANN 2011. ICANN 2011. Lecture Notes in Computer Science, vol 6791. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-21735-7_37

Download citation

DOI: https://doi.org/10.1007/978-3-642-21735-7_37

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-21734-0

Online ISBN: 978-3-642-21735-7

eBook Packages: Computer ScienceComputer Science (R0)