Abstract

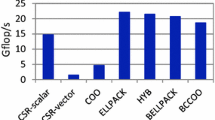

Sparse matrix vector multiplication is one of the most often used functions in scientific and engineering computing. Though, various storage schemes for sparse matrices have been proposed, the optimal storage scheme is dependent upon the matrix being stored. In this paper, we will propose an auto-selecting algorithm for sparse matrix vector multiplication on GPUs that automatically selects the optimal storage scheme. We evaluated our algorithm using a solver for systems of linear equations. As a result, we found that our algorithm was effective for many sparse matrices.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Bell, N., Garland, M.: Efficient Sparse Matrix-Vector Multiplication on CUDA. NVIDIA Technical Report NVR-2008-004, NVIDIA Corporation (2008)

Blelloch, G.E., Heroux, M.A., Zagha, M.: Segmented Operations for Sparse Matrix Computation on Vector Multiprocessors. Tech. rep., Tech. Rep. CMU-CS-93-173, School of Computer Science, Carnegie Mellon University, Pittsburgh, PA, USA (1993)

Cevahir, A., Nukada, A., Matsuoka, S.: High performance conjugate gradient solver on multi-gpu clusters using hypergraph partitioning. Computer Science - Research and Development 25, 83–91 (2010), http://dx.doi.org/10.1007/s00450-010-0112-6

Davis, T.A., Hu, Y.: The University of Florida Sparse Matrix Collection. ACM Trans. Math. Softw. (to appear)

Kajiyama, T., Nukada, A., Hasegawa, H., Suda, R., Nishida, A.: SILC: A Flexible and Environment-Independent Interface for Matrix Computation Libraries. In: Wyrzykowski, R., Dongarra, J., Meyer, N., Waśniewski, J. (eds.) PPAM 2005. LNCS, vol. 3911, pp. 928–935. Springer, Heidelberg (2006)

NVIDIA: CUSPARSE User Guide, http://developer.download.nvidia.com/compute/cuda/3_2/toolkit/docs/CUSPARSE_Library.pdf

NVIDIA: NVIDIA GPU Computing Developer Home Page, http://developer.nvidia.com/object/gpucomputing.html

Saad, Y.: SPARSKIT: a basic tool kit for sparse matrix computations - Version 2 (1994)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2011 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Kubota, Y., Takahashi, D. (2011). Optimization of Sparse Matrix-Vector Multiplication by Auto Selecting Storage Schemes on GPU. In: Murgante, B., Gervasi, O., Iglesias, A., Taniar, D., Apduhan, B.O. (eds) Computational Science and Its Applications - ICCSA 2011. ICCSA 2011. Lecture Notes in Computer Science, vol 6783. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-21887-3_42

Download citation

DOI: https://doi.org/10.1007/978-3-642-21887-3_42

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-21886-6

Online ISBN: 978-3-642-21887-3

eBook Packages: Computer ScienceComputer Science (R0)