Abstract

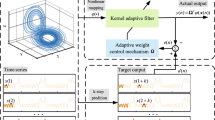

In this paper, inspired by Multiscale Geometric Analysis (MGA), a Sparse Ridgelet Kernel Regressor (SRKR) is constructed by combing ridgelet theory with kernel trick. Considering the preferable future of sequential learning over batch learning, we exploit the kernel method in an online setting using the sequential extreme learning scheme to predict nonlinear time-series successively. By using the dimensionality non-separable ridgelet kernels, SRKR is capable of processing the high-dimensional data more efficiently. The online learning algorithm of the examples, named Online Sequential Extreme Learning Algorithm (OS-ELA) is employed to rapidly produce a sequence of estimations. OS-ELA learn the training data one-by-one or chunk by chunk (with fixed or varying size), and discard them as long as the training procedure for those data is completed to keep the memory bounded in online learning. Evolution scheme is also incorporated to obtain a ‘good’ sparse regressor. Experiments are taken on some nonlinear time-series prediction problems, in which the examples are available one by one. Some comparisons are made and the experimental results show its efficiency and superiority to its counterparts.

This work is supported by the National Science Foundation of CHINA under grant no. 61072108, 60971112, 60601029, and the Basic Science Research Fund in Xidian University under Grant no. JY10000902041.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Lorentz, G.G., Golitschek, M.V., Makovoz, Y.: Constructive Approximation: Advanced Problems. Springer, Heidelberg (1996)

Härdle, W.: Applied nonparametric regression. Cambridge University Press, Cambridge (1990)

Bank, J.N., Omitaomu, O.A., Fernandez, S.J., Liu, Y.: Visualization and classification of power system frequency data streams. In: Proc. IEEE ICDM Workshop Spatial Spatiotemporal Data Mining, Miami, FL, December 6, pp. 650–655 (2009)

Aronszajn, N.: Theory of reproducing kernels. Trans. Amer. Math.Soc. 68 (1950)

Aizerman, M.A., Braverman, E.M., Rozonoer, L.I.: The method of potential functions for the problem of restoring the characteristic of a function converter from randomly observed points. Autom. Remote Control 25(12), 1546–1556 (1964)

Kimeldorf, G., Wahba, G.: Some results on Tchebycheffian spline functions. J. Math. Anal. Appl. 33, 82–95 (1971)

Wahba, G.: Spline Models for Observational Data. SIAM, Philadelphia (1990)

Duttweiler, D.L., Kailath, T.: An RKHS approach to detection and estimation theory: Some parameter estimation problems (Part V). IEEE Trans. Inf. Theory 19(1), 29–37 (1973)

Schölkopf, B., Burges, J.C., Smola, A.J.: Advances in Kernel Methods. MIT Press, Cambridge (1999)

Schölkopf, B., Smola, A.J.: Learning With Kernels. MIT Press, Cambridge (2002)

Theodoridis, S., Koutroumbas, K.: Pattern Recognition, 3rd edn. Academic Press, Amsterdam (2006)

Shawe-Taylor, J., Cristianini, N.: Kernel Methods for Pattern Analysis. Cambridge University Press, Cambridge (2004)

Schölkopf, B., Smola, A.J.: Learning With Kernels. MIT Press, Cambridge (2001)

Sebald, D.J., Bucklew, J.A.: Support vector machine techniques for nonlinear equalization. IEEE Trans. Signal Processing 48, 3217–3226 (2000)

Kivinen, J., Smola, A.J., Williamson, R.C.: Online learning with kernels. IEEE Trans. Signal Process. 52(8), 2165–2176 (2004)

Sebald, D.J., Bucklew, J.A.: Support vector machine techniques for nonlinear equalization. IEEE Trans. Signal Process. 48(11), 3217–3226 (2000)

Cauwenberghs, G., Poggio, T.: Incremental and decremental support vector machine learning. In: Adv. Neural Inf. Process. Syst. (NIPS), vol. 13, pp. 409–415. MIT Press, Cambridge (2000)

Laskov, P., Gehl, C., Krüger, S., Müller, K.-R.: Incremental support vector learning: Analysis, implementation and applications. J. Mach. Learn. Res. 7, 1909–1936 (2006)

Engel, Y., Mannor, S., Meir, R.: The kernel recursive least-squares algorithm. IEEE Trans. Signal Process. 52(8), 2275–2285 (2004)

Malipatil, A.V., Huang, Y.-F., Andra, S., Bennett, K.: Kernelized set-membership approach to nonlinear adaptive filtering. Proc. IEEE ICASSP IV, 149–152 (2005)

Crammer, K., Kandola, J., Singer, Y.: Online classification on a budget. In: Advances in Neural Information Processing Systems (2003)

Weston, J., Bordes, A., Bottou, L.: Online (and offline) on an even tighter budget. In: Cowell, G., Ghahramani, Z. (eds.) Proc. of AISTATS, pp. 413–420 (2005)

Kivinen, J., Smola, A., Williamson, R.: Online learning with kernels. IEEE Trans. on Signal Processing 52(8), 2165–2176 (2004)

Cheng, L., Vishwanathan, S.V.N., Schuurmans, D., Wang, S., Caelli, T.: Implicit online learning with kernels. In: Advances in Neural Information Processing Systems, vol. 19, pp. 249–256 (2007)

Dekel, O., Shalev-Shwartz, S., Singer, Y.: The Forgetron: A kernel-based perceptron on a budget. SIAM Journal on Computing 37(5), 1342–1372 (2007)

Cesa-Bianchi, N., Gentile, C.: Tracking the Best Hyperplane with a Simple Budget Perceptron. In: Lugosi, G., Simon, H.U. (eds.) COLT 2006. LNCS (LNAI), vol. 4005, pp. 483–498. Springer, Heidelberg (2006)

Langford, J., Li, L., Zhang, T.: Sparse online learning via truncated gradient. In: Advances in Neural Information Processing Systems, vol. 21, pp. 905–912 (2008)

Ma, J., James, T., Simon, P.: Accurate on-line support vector regression. Neural Comput. 15(11), 2683–2703 (2003)

Candès, E.J.: Ridgelets and Their Derivatives: Representation of Images with Edges, Curves and Surfaces. In: Schumaker, L.L., et al. (eds.). Vanderbilt University Press, Nashville (1999)

Candès, E.J.: Ridgelets and the Representation of Mutilated Sobolev Functions. SIAM Journal on Mathematical Analysis (2002)

Starck, J.L., Candès, E.J., Donoho, D.L.: The Curvelet Transform for Image Denoising. IEEE Transactions on Image Processing 11(6), 670–684 (2002)

Starck, J.L., Murtagh, F., Candès, E.J., Donoho, D.L.: Gray and Color Image Contrast Enhancement by the Curvelet Transform. IEEE Transaction on Image Processing 12(6), 706–717 (2003)

Yang, S., Wang, M., Jiao, L.: Ridgelet kernel regression. NeuroComputing 70, 3046–3055 (2007)

Huang, G.-B., Ding, X., Zhou, H.: Optimization Method Based Extreme Learning Machine for Classification. Neurocomputing 74, 155–163 (2010)

Huang, G.-B., Liang, N.-Y., Rong, H.-J., Saratchandran, P., Sundararajan, N.: On-Line Sequential Extreme Learning Machine. In: The IASTED International Conference on Computational Intelligence (CI 2005), Calgary, Canada, July 4-6 (2005)

Rong, H.-J., Huang, G.-B., Saratchandran, P., Sundararajan, N.: On-Line Sequential Fuzzy Extreme Learning Machine for Function Approximation and Classification Problems. IEEE Transactions on Systems, Man, and Cybernetics: Part B 39(4), 1067–1072 (2009)

Lan, Y., Soh, Y.C., Huang, G.-B.: Ensemble of Online Sequential Extreme Learning Machine. Neurocomputing 72, 3391–3395 (2009)

Candès: Ridgelets: estimating with ridge functions, techinal report (2003)

Yang, S., Wang, M., Jiao, L.: Geometrical Muti-resolution Network based on Ridgelet Frame. Signal Processing 87(4), 750–761 (2007)

Yang, S., Wang, M., Jiao, L.: A Linear Ridgelet Neural Network. NeuroComputing 73(1-3), 468–477 (2009)

Yang, S., Wang, M., Jiao, L.: Incremental Constructive Ridgelet Neural Network. NeuroComputing 72(1-3), 367–377 (2008)

Haykin, S.: Neural networks: a comprehensive foundation, 2nd edn. IEEE Computer Society Press, New York (1999)

Kimeldorf, G.S., Wahba, G.: Tchebycheffian spline functions. J. Math. Ana. Applic. 33, 82–95 (1971)

Clerc, M., Kennedy, J.: The particle swarm: Explosion, stability, and convergence in a multidimensional complex space. IEEE Trans. Evolutionary Computation 6, 58–73 (2002)

IEEE Std 1159-1995, IEEE recommended practice for monitoring electric power quality

Dugan, R.C., McGranaghan, M.F., Santoso, S., Beaty, H.W.: Electrical power system quality. McGraw-Hill, New York (2002)

Kaewarsa, S., et al.: Electrical Power and Energy Systems, vol. 30, pp. 254–260 (2008)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2012 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Yang, S., Zuo, D., Wang, M., Jiao, L. (2012). Online Sequential Extreme Learning of Sparse Ridgelet Kernel Regressor for Nonlinear Time-Series Prediction. In: Zhang, Y., Zhou, ZH., Zhang, C., Li, Y. (eds) Intelligent Science and Intelligent Data Engineering. IScIDE 2011. Lecture Notes in Computer Science, vol 7202. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-31919-8_3

Download citation

DOI: https://doi.org/10.1007/978-3-642-31919-8_3

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-31918-1

Online ISBN: 978-3-642-31919-8

eBook Packages: Computer ScienceComputer Science (R0)