Abstract

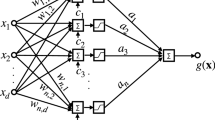

Theoretical results on approximation of multivariable functions by feedforward neural networks are surveyed. Some proofs of universal approximation capabilities of networks with perceptrons and radial units are sketched. Major tools for estimation of rates of decrease of approximation errors with increasing model complexity are proven. Properties of best approximation are discussed. Recent results on dependence of model complexity on input dimension are presented and some cases when multivariable functions can be tractably approximated are described

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Barron, A.R.: Neural net approximation. In: Narendra, K. (ed.) Proc. 7th Yale Workshop on Adaptive and Learning Systems, pp. 69–72. Yale University Press (1992)

Barron, A.R.: Universal approximation bounds for superpositions of a sigmoidal function. IEEE Trans. on Information Theory 39, 930–945 (1993)

Bellman, R.: Dynamic Programming. Princeton University Press, Princeton (1957)

Bochner, S., Chandrasekharan, K.: Fourier Transform. Princeton University Press, Princeton (1949)

Braess, D.: Nonlinear Approximation Theory. Springer (1986)

Breiman, L.: Hinging hyperplanes for regression, classification and function approximation. IEEE Trans. Inform. Theory 39(3), 999–1013 (1993)

Carrol, S.M., Dickinson, B.W.: Construction of neural nets using the Radon transform. In: Proc. the Int. Joint Conf. on Neural Networks, vol. 1, pp. 607–611 (1989)

Cheang, G.H.L., Barron, A.R.: A better approximation for balls. J. of Approximation Theory 104, 183–203 (2000)

Chui, C.K., Li, X., Mhaskar, H.N.: Neural networks for localized approximation. Math. of Computation 63, 607–623 (1994)

Courant, R., Hilbert, D.: Methods of Mathematical Physics, vol. II. Interscience, New York (1962)

Cybenko, G.: Approximation by superpositions of a sigmoidal function. Mathematics of Control, Signals, and Systems 2, 303–314 (1989)

Darken, C., Donahue, M., Gurvits, L., Sontag, E.: Rate of approximation results motivated by robust neural network learning. In: Proc. Sixth Annual ACM Conf. on Computational Learning Theory, pp. 303–309. ACM, New York (1993)

DeVore, R.A., Howard, R., Micchelli, C.: Optimal nonlinear approximation. Manuscripta Mathematica 63, 469–478 (1989)

Donahue, M., Gurvits, L., Darken, C., Sontag, E.: Rates of convex approximation in non-Hilbert spaces. Constructive Approximation 13, 187–220 (1997)

Girosi, F., Anzellotti, G.: Rates of convergence for Radial Basis Functions and neural networks. In: Mammone, R.J. (ed.) Artificial Neural Networks for Speech and Vision, pp. 97–113. Chapman & Hall (1993)

Girosi, F., Poggio, T.: Networks and the best approximation property. Biological Cybernetics 63, 169–176 (1990)

Giulini, S., Sanguineti, M.: Approximation schemes for functional optimization problems. J. of Optimization Theory and Applications 140, 33–54 (2009)

Gnecco, G., Sanguineti, M.: Estimates of variation with respect to a set and applications to optimization problems. J. of Optimization Theory and Applications 145, 53–75 (2010)

Gnecco, G., Sanguineti, M.: On a variational norm tailored to variable-basis approximation schemes. IEEE Trans. on Information Theory 57, 549–558 (2011)

Gribonval, R., Vandergheynst, P.: On the exponential convergence of matching pursuits in quasi-incoherent dictionaries. IEEE Trans. on Information Theory 52, 255–261 (2006)

Gurvits, L., Koiran, P.: Approximation and learning of convex superpositions. J. of Computer and System Sciences 55, 161–170 (1997)

Hartman, E.J., Keeler, J.D., Kowalski, J.M.: Layered neural networks with Gaussian hidden units as universal approximations. Neural Computation 2, 210–215 (1990)

Hewit, E., Stromberg, K.: Abstract Analysis. Springer, Berlin (1965)

Hornik, K., Stinchcombe, M., White, H.: Universal approximation of an unknown mapping and its derivatives using multilayer feedforward networks. Neural Networks 3, 551–560 (1990)

Ito, Y.: Representation of functions by superpositions of a step or sigmoidal function and their applications to neural network theory. Neural Networks 4, 385–394 (1991)

Ito, Y.: Finite mapping by neural networks and truth functions. Mathematical Scientist 17, 69–77 (1992)

Jones, L.K.: A simple lemma on greedy approximation in Hilbert space and convergence rates for projection pursuit regression and neural network training. Annals of Statistics 20, 608–613 (1992)

Juditsky, A., Hjalmarsson, H., Benveniste, A., Delyon, B., Ljung, L., Sjöberg, J., Zhang, Q.: Nonlinear black-box models in system identification: Mathematical foundations. Automatica 31, 1725–1750 (1995)

Kainen, P.C., Kůrková, V.: Quasiorthogonal dimension of Euclidean spaces. Applied Mathematics Letters 6, 7–10 (1993)

Kainen, P.C., Kůrková, V., Sanguineti, M.: Complexity of Gaussian radial basis networks approximating smooth functions. J. of Complexity 25, 63–74 (2009)

Kainen, P.C., Kurkova, V., Sanguineti, M.: Dependence of Computational Models on Input Dimension: Tractability of Approximation and Optimization Tasks. IEEE Transactions on Information Theory 58, 1203–1214 (2012)

Kainen, P.C., Kůrková, V., Vogt, A.: Geometry and topology of continuous best and near best approximations. J. of Approximation Theory 105, 252–262 (2000)

Kainen, P.C., Kůrková, V., Vogt, A.: Continuity of approximation by neural networks in L p -spaces. Annals of Operational Research 101, 143–147 (2001)

Kainen, P.C., Kůrková, V., Vogt, A.: Best approximation by linear combinations of characteristic functions of half-spaces. J. of Approximation Theory 122, 151–159 (2003)

Kainen, P.C., Kůrková, V., Vogt, A.: A Sobolev-type upper bound for rates of approximation by linear combinations of Heaviside plane waves. J. of Approximation Theory 147, 1–10 (2007)

Knuth, D.E.: Big omicron and big omega and big theta. SIGACT News 8, 18–24 (1976)

Kůrková, V.: Kolmogorov’s theorem and multilayer neural networks. Neural Networks 5, 501–506 (1992)

Kůrková, V.: Approximation of functions by perceptron networks with bounded number of hidden units. Neural Networks 8, 745–750 (1995)

Kůrková, V.: Dimension-independent rates of approximation by neural networks. In: Warwick, K., Kárný, M. (eds.) Computer-Intensive Methods in Control and Signal Processing. The Curse of Dimensionality, Birkhäuser, Boston, MA, pp. 261–270 (1997)

Kůrková, V.: Incremental approximation by neural networks. In: Warwick, K., Kárný, M., Kůrková, V. (eds.) Complexity: Neural Network Approach, pp. 177–188. Springer, London (1998)

Kůrková, V.: High-dimensional approximation and optimization by neural networks. In: Suykens, J., et al. (eds.) Advances in Learning Theory: Methods, Models and Applications, ch. 4, pp. 69–88. IOS Press, Amsterdam (2003)

Kůrková, V.: Minimization of error functionals over perceptron networks. Neural Computation 20, 252–270 (2008)

Kůrková, V., Kainen, P.C., Kreinovich, V.: Estimates of the number of hidden units and variation with respect to half-spaces. Neural Networks 10, 1061–1068 (1997)

Kůrková, V., Neruda, R.: Uniqueness of functional representations by Gaussian basis function networks. In: Proceedings of ICANN 1994, pp. 471–474. Springer, London (1994)

Kůrková, V., Sanguineti, M.: Bounds on rates of variable-basis and neural-network approximation. IEEE Trans. on Information Theory 47, 2659–2665 (2001)

Kůrková, V., Sanguineti, M.: Comparison of worst case errors in linear and neural network approximation. IEEE Trans. on Information Theory 48, 264–275 (2002)

Kůrková, V., Sanguineti, M.: Error estimates for approximate optimization by the extended Ritz method. SIAM J. on Optimization 15, 261–287 (2005)

Kůrková, V., Sanguineti, M.: Learning with generalization capability by kernel methods of bounded complexity. J. of Complexity 21, 350–367 (2005)

Kůrková, V., Sanguineti, M.: Estimates of covering numbers of convex sets with slowly decaying orthogonal subsets. Discrete Applied Mathematics 155, 1930–1942 (2007)

Kůrková, V., Sanguineti, M.: Approximate minimization of the regularized expected error over kernel models. Mathematics of Operations Research 33, 747–756 (2008)

Kůrková, V., Sanguineti, M.: Geometric upper bounds on rates of variable-basis approximation. IEEE Trans. on Information Theory 54, 5681–5688 (2008)

Kůrková, V., Savický, P., Hlaváčková, K.: Representations and rates of approximation of real–valued Boolean functions by neural networks. Neural Networks 11, 651–659 (1998)

Lavretsky, E.: On the geometric convergence of neural approximations. IEEE Trans. on Neural Networks 13, 274–282 (2002)

Leshno, M., Lin, V.Y., Pinkus, A., Schocken, S.: Multilayer feedforward networks with a nonpolynomial activation function can approximate any function. Neural Networks 6, 861–867 (1993)

Makovoz, Y.: Random approximants and neural networks. J. of Approximation Theory 85, 98–109 (1996)

Mhaskar, H.N.: Versatile Gaussian networks. In: Proc. of IEEE Workshop of Nonlinear Image Processing, pp. 70–73 (1995)

Mhaskar, H.N.: On the tractability of multivariate integration and approximation by neural networks. J. of Complexity 20, 561–590 (2004)

Micchelli, C.A.: Interpolation of scattered data: Distance matrices and conditionally positive definite functions. Constructive Approximation 2, 11–22 (1986)

Narendra, K.S., Mukhopadhyay, S.: Adaptive control using neural networks and approximate models. IEEE Trans. on Neural Networks 8, 475–485 (1997)

Park, J., Sandberg, I.W.: Universal approximation using radial–basis–function networks. Neural Computation 3, 246–257 (1991)

Park, J., Sandberg, I.W.: Approximation and radial-basis-function networks. Neural Computation 5, 305–316 (1993)

Pinkus, A.: Approximation theory of the MLP model in neural networks. Acta Numerica 8, 143–195 (1999)

Pisier, G.: Remarques sur un résultat non publié de B. Maurey. In: Séminaire d’Analyse Fonctionnelle 1980-1981, Palaiseau, France. École Polytechnique, Centre de Mathématiques, vol. I(12) (1981)

Rosenblatt, F.: The perceptron: A probabilistic model for information storage and organization of the brain. Psychological Review 65, 386–408 (1958)

Rudin, W.: Principles of Mathematical Analysis. McGraw-Hill (1964)

Sejnowski, T.J., Rosenberg, C.: Parallel networks that learn to pronounce English text. Complex Systems 1, 145–168 (1987)

Singer, I.: Best Approximation in Normed Linear Spaces by Elements of Linear Subspaces. Springer, Heidelberg (1970)

Smith, K.A.: Neural networks for combinatorial optimization: A review of more than a decade of research. INFORMS J. on Computing 11, 15–34 (1999)

Stinchcombe, M., White, H.: Approximation and learning unknown mappings using multilayer feedforward networks with bounded weights. In: Proc. Int. Joint Conf. on Neural Networks IJCNN 1990, pp. III7–III16 (1990)

Traub, J.F., Werschulz, A.G.: Complexity and Information. Cambridge University Press (1999)

Wasilkowski, G.W., Woźniakowski, H.: Complexity of weighted approximation over ℝd. J. of Complexity 17, 722–740 (2001)

Woźniakowski, H.: Tractability and strong tractability of linear multivariate problems. J. of Complexity 10, 96–128 (1994)

Zemanian, A.H.: Distribution Theory and Transform Analysis. Dover, New York (1987)

Zoppoli, R., Parisini, T., Sanguineti, M., Baglietto, M.: Neural Approximations for Optimal Control and Decision. Springer, London (in preparation)

Zoppoli, R., Sanguineti, M., Parisini, T.: Approximating networks and extended Ritz method for the solution of functional optimization problems. J. of Optimization Theory and Applications 112, 403–439 (2002)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Kainen, P.C., Kůrková, V., Sanguineti, M. (2013). Approximating Multivariable Functions by Feedforward Neural Nets. In: Bianchini, M., Maggini, M., Jain, L. (eds) Handbook on Neural Information Processing. Intelligent Systems Reference Library, vol 49. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-36657-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-642-36657-4_5

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-36656-7

Online ISBN: 978-3-642-36657-4

eBook Packages: EngineeringEngineering (R0)