Abstract

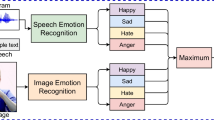

Real human-computer interaction systems based on different modalities face the problem that not all information channels are always available at regular time steps. Nevertheless an estimation of the current user state is required at anytime to enable the system to interact instantaneously based on the available modalities. A novel approach to decision fusion of fragmentary classifications is therefore proposed and empirically evaluated for audio and video signals of a corpus of non-acted user behavior. It is shown that visual and prosodic analysis successfully complement each other leading to an outstanding performance of the fusion architecture.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Bartlett, M., Littlewort, G., Vural, E., Lee, K., Cetin, M., Ercil, A., Movellan, J.: Data Mining Spontaneous Facial Behavior with Automatic Expression Coding. In: Esposito, A., Bourbakis, N.G., Avouris, N., Hatzilygeroudis, I. (eds.) HH and HM Interaction. LNCS (LNAI), vol. 5042, pp. 1–20. Springer, Heidelberg (2008), http://dx.doi.org/10.1007/978-3-540-70872-8_1

Batliner, A., Steidl, S., Schuller, B., Seppi, D., Vogt, T., Wagner, J., Devillers, L., Vidrascu, L., Aharonson, V., Kessous, L., Amir, N.: Whodunnit - Searching for the Most Important Feature Types Signalling Emotion-Related User States in Speech. Computer Speech and Language 25(1), 4–28 (2011)

Bishop, C.M.: Pattern Recognition and Machine Learning. In: Jordan, M., Kleinberg, J., Schölkopf, B. (eds.) Pattern Recognition and Machine Learning, Springer (2006)

Cowie, R., Cornelius, R.R.: Describing the Emotional States that are Expressed in Speech. J. on Speech Commun. 40(1-2), 5–32 (2003)

Diebel, J., Thrun, S.: An Application of Markov Random Fields to Range Sensing. In: Proc. of Advances in Neural Information Processing Systems (NIPS), vol. 18, pp. 291–298. MIT Press (2006)

Ekman, P., Friesen, W.: Facial Action Coding System: A Technique for the Measurement of Facial Movement. Consulting Psychologist Press, Palo Alto (1978)

Ganchev, T., Fakotakis, N., Kokkinakis, G.: Comparative evaluation of various mfcc implementations on the speaker verification task. In: Proc. of the SPECOM 2005, pp. 191–194 (2005), http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.75.8303

Glodek, M., Schels, M., Palm, G., Schwenker, F.: Multi-modal fusion based on classification using rejection option and markov fusion network. In: Proceedings of the International Conference on Pattern Recognition (ICPR). IEEE (to appear 2012)

Greenberg, S., Ainsworth, W.A., Popper, A.N., Fay, R.R., Mogran, N., Bourlard, H., Hermansky, H.: Automatic speech recognition: An auditory perspective. In: Speech Processing in the Auditory System, Springer Handbook of Auditory Research, vol. 18, pp. 309–338. Springer, New York (2004), http://dx.doi.org/10.1007/0-387-21575-1_6 , doi:10.1007/0-387-21575-16

Kanluan, I., Grimm, M., Kroschel, K.: Audio-visual Emotion Recognition using an Emotion Space Concept. In: Proceedings of the European Signal Processing Conference (EUSIPCO), Lausanne (2008)

Kelley, J.F.: An empirical methodology for writing user-friendly natural language computer applications. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI 1983, pp. 193–196. ACM, New York (1983), http://doi.acm.org/10.1145/800045.801609

Lausberg, H., Kryger, M.: Gestisches Verhalten als Indikator therapeutischer Prozesse in der verbalen Psychotherapie: Zur Funktion der Selbstberührungen und zur Repräsentation von Objektbeziehungen in gestischen Darstellungen. Psychotherapie-Wissenschaft 1(1) (2011), http://www.psychotherapie-wissenschaft.info/index.php/psy-wis/article/view/12

Mahmoud, M., Robinson, P.: Interpreting Hand-Over-Face Gestures. In: D’Mello, S., Graesser, A., Schuller, B., Martin, J.-C. (eds.) ACII 2011, Part II. LNCS, vol. 6975, pp. 248–255. Springer, Heidelberg (2011), http://dl.acm.org/citation.cfm?id=2062850.2062879

Metallinou, A., Lee, S., Narayanan, S.: Audio-visual Emotion Recognition using Gaussian Mixture Models for Face and Voice. In: Proc. of the IEEE Int. Symposium on Multimedia, Berkeley, CA, pp. 250–257 (December 2008)

Niese, R., Al-Hamadi, A., Panning, A., Brammen, D., Ebmeyer, U., Michaelis, B.: Towards pain recognition in Post-Operative phases using 3D-based features from video and support vector machines. International Journal of Digital Content Technology and its Applications (2009), http://www.aicit.org/JDCTA/paper_detail.html?q=92

Paleari, M., Huet, B., Chellali, R.: Towards Multimodal Emotion Recognition: A new Approach. In: Proceedings of the ACM International Conference on Image and Video Retrieval, Xi’an, China, July 5-7 (2010)

Palm, G., Glodek, M.: Towards emotion recognition in human computer interaction. In: Proceedings of the Italian Workshop on Neural Networks WIRN (to appear, 2012)

Panning, A., Al-Hamadi, A., Michaelis, B.: Active Shape Models on Adaptively Refined Mouth Emphasizing Color Images. In: WSCG Communication Papers, pp. 221–228 (2010)

Panning, A., Siegert, I., Al-Hamadi, A., Wendemuth, A., Rösner, D., Frommer, J., Krell, G., Michaelis, B.: Multimodal Affect Recognition in Spontaneous HCI Environment. In: IEEE International Conference on Signal Processing, Communications and Computings, ICPCC 2012 (to appear, 2012)

Rösner, D., Frommer, J., Friesen, R., Haase, M., Lange, J., Otto, M.: LAST MINUTE: a Multimodal Corpus of Speech-based User-Companion Interactions. In: Calzolari (Conference Chair), N., Choukri, K., Declerck, T., Dogan, M.U., Maegaard, B., Mariani, J., Odijk, J., Piperidis, S. (eds.) Proc. of the Eighth International Conference on Language Resources and Evaluation (LREC 2012). European Language Resources Association (ELRA) (May 2012)

Saeed, A., Niese, R., Al-Hamadi, A., Panning, A.: Hand-face-touch Measure: a Cue for Human Behavior Analysis. In: IEEE Int. Conf. on Intelligent Computing and Intelligent Systems, vol. 3, pp. 605–609 (2011)

Saeed, A., Niese, R., Al-Hamadi, A., Panning, A.: Hand-face-touch measure: a cue for human behavior analysis. In: 2011 IEEE International Conference on Intelligent Computing and Intelligent Systems (ICIS), vol. 3, pp. 605–609 (2011)

Schuller, B., Vlasenko, B., Eyben, F., Rigoll, G., Wendemuth, A.: Acoustic emotion recognition: A benchmark comparison of performances. In: Proc. of IEEE Workshop on Automatic Speech Recognition Understanding (ASRU), Merano, Italy, pp. 552–557 (December 2009)

Schuller, B., Valstar, M.F., Eyben, F., McKeown, G., Cowie, R., Pantic, M.: AVEC 2011–The First International Audio/Visual Emotion Challenge. In: D’Mello, S., Graesser, A., Schuller, B., Martin, J.-C. (eds.) ACII 2011, Part II. LNCS, vol. 6975, pp. 415–424. Springer, Heidelberg (2011)

Siegert, I., Böck, R., Philippou-Hübner, D., Vlasenko, B., Wendemuth, A.: Appropriate Emotional Labeling of Non-acted Speech Using Basic Emotions, Geneva Emotion Wheel and Self Assessment Manikins. In: Proceedings of the IEEE International Conference on Multimedia and Expo, ICME 2011, Barcelona, Spain (2011)

Soleymani, M., Pantic, M., Pun, T.: Multi-Modal Emotion Recognition in Response to Videos. IEEE Transactions on Affective Computing 99, Preprints (November 2011) (in press)

Vural, E., Çetin, M., Erçil, A., Littlewort, G., Bartlett, M., Movellan, J.: Machine learning systems for detecting driver drowsiness. In: Takeda, K., Erdogan, H., Hansen, J.H.L., Abut, H. (eds.) In-Vehicle Corpus and Signal Processing for Driver Behavior, pp. 97–110. Springer, US (2009), http://dx.doi.org/10.1007/978-0-387-79582-9_8

Wagner, J., Lingenfelser, F., André, E., Kim, J.: Exploring Fusion Methods for Multimodal Emotion Recognition with Missing Data. IEEE Trans. on Affective Computing 99, Preprints (2011)

Wendemuth, A., Biundo, S.: A Companion Technology for Cognitive Technical Systems. In: Esposito, A., Esposito, A.M., Vinciarelli, A., Hoffmann, R., Müller, V.C. (eds.) COST 2102. LNCS, vol. 7403, pp. 89–103. Springer, Heidelberg (2012)

Wolpaw, J.R., Birbaumer, N., McFarland, D.J., Pfurtscheller, G., Vaughan, T.M.: Brain-computer interfaces for communication and control.. Clinical Neurophysiology 113(6), 767–791 (2002), http://view.ncbi.nlm.nih.gov/pubmed/12048038

Wu, H.-Y., Rubinstein, M., Shih, E., Guttag, J., Durand, F., Freeman, W.T.: Eulerian video magnification for revealing subtle changes in the world. ACM Trans. Graph (Proceedings SIGGRAPH 2012) 31(4) (2012)

Young, S., Evermann, G., Gales, M., Hain, T., Kershaw, D., Liu, X., Moore, G., Odell, J., Ollason, D., Povey, D., Valtchev, V., Woodland, P.: The HTK book (for HTK Version 3.4). Cambridge University Engineering Department, Cambridge, UK (2006), http://nesl.ee.ucla.edu/projects/ibadge/docs/ASR/htk/htkbook.pdf

Zeng, Z., Pantic, M., Roisman, G., Huang, T.: A Survey of Affect Recognition Methods: Audio, Visual, and Spontaneous Expressions. IEEE Trans. on Pattern Analysis and Machine Intelligence 31(1), 39–58 (2009)

Zeng, Z., Tu, J., Pianfetti, B., Huang, T.: Audio-visual Affective Expression Recognition through Multi-stream Fused HMM. IEEE Trans. on Multimedia 4, 570–577 (2008)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Krell, G. et al. (2013). Fusion of Fragmentary Classifier Decisions for Affective State Recognition. In: Schwenker, F., Scherer, S., Morency, LP. (eds) Multimodal Pattern Recognition of Social Signals in Human-Computer-Interaction. MPRSS 2012. Lecture Notes in Computer Science(), vol 7742. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-37081-6_13

Download citation

DOI: https://doi.org/10.1007/978-3-642-37081-6_13

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-37080-9

Online ISBN: 978-3-642-37081-6

eBook Packages: Computer ScienceComputer Science (R0)