Abstract

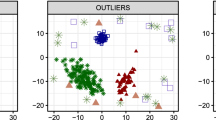

In several pattern classification problems, we encounter training datasets with an imbalanced class distribution and the presence of outliers, which can hinder the performance of classifiers. In this paper, we propose classification schemes based on the pre-processing of data using Novel Pattern Synthesis (NPS), with the aim to improve performance on such datasets. We provide a formal framework for characterizing the class imbalance and outlier elimination. Specifically, we look into the role of NPS in: Outlier elimination and handling class imbalance problem. In NPS, for every pattern its k-nearest neighbours are found and a weighted average of the neighbours is taken to form a synthesized pattern. It is found that the classification accuracy of minority class increases in the presence of synthesized patterns. However, finding nearest neighbours in high-dimensional datasets is challenging. Hence, we make use of Latent Dirichlet Allocation to reduce the dimensionality of the dataset. An extensive experimental evaluation carried out on 25 real-world imbalanced datasets shows that pre-processing of data using NPS is effective and has a greater impact on the classification accuracy over minority class for imbalanced learning. We also observed that NPS outperforms the state-of-the-art methods for imbalanced classification. Experiments on 9 real-world datasets with outliers, demonstrate that NPS approach not only substantially increases the detection performance, but is also relatively scalable in large datasets in comparison to the state-of-the-art outlier detection methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Yang, Q., Wu, X.: 10 challenging problems in data mining research. International Journal of Information Technology and Decision Making 5(4), 597–604 (2006)

Kubat, M., Holte, R.C., Matwin, S.: Machine learning for the detection of oil spills in satellite radar images. Machine Learning 30(2-3), 195–215 (1998)

Liu, Y., Loh, H.T., Sun, A.: Imbalanced text classification: A term weighting approach. Expert Syst. Appl. 36(1), 690–701 (2009)

Chawla, N.V., Bowyer, K.W., Hall, L.O., Kegelmeyer, W.P.: Smote: Synthetic minority over-sampling technique. J. Artif. Intell. Res (JAIR) 16, 321–357 (2002)

Chawla, N.V., Japkowicz, N., Kotcz, A.: Editorial: special issue on learning from imbalanced data sets. SIGKDD Explorations 6(1), 1–6 (2004)

Domingos, P.: Metacost: A general method for making classifiers cost-sensitive. In: KDD, pp. 155–164 (1999)

Hawkins, D.: Identification of outliers. Monographs on applied probability and statistics. Chapman and Hall, London (1980)

Hamamoto, Y., Uchimura, S., Tomita, S.: A bootstrap technique for nearest neighbor classifier design. IEEE Trans. Pattern Anal. Mach. Intell. 19(1), 73–79 (1997)

Duda, R.O., Hart, P.E., Stork, D.G.: Pattern Classification, 2nd edn. Wiley, New York (2001)

Raskutti, B., Kowalczyk, A.: Extreme re-balancing for svms: a case study. SIGKDD Explor. Newsl. 6(1), 60–69 (2004)

Cieslak, D.A., Chawla, N.V.: Learning decision trees for unbalanced data. In: Daelemans, W., Goethals, B., Morik, K. (eds.) ECML PKDD 2008, Part I. LNCS (LNAI), vol. 5211, pp. 241–256. Springer, Heidelberg (2008)

Japkowicz, N.: Class imbalances: Are we focusing on the right issue?, pp. 17–23 (2003)

Dehmeshki, J., Karaky, M., Casique, M.V.: A rule-based scheme for filtering examples from majority class in an imbalanced training set. In: Perner, P., Rosenfeld, A. (eds.) MLDM 2003. LNCS, vol. 2734, pp. 215–223. Springer, Heidelberg (2003)

Zhu, J., Hovy, E.H.: Active learning for word sense disambiguation with methods for addressing the class imbalance problem. In: EMNLP-CoNLL, pp. 783–790. ACL (2007)

Chawla, N., Lazarevic, A., Hall, L., Bowyer, K.: Smoteboost: Improving prediction of the minority class in boosting. In: Lavrač, N., Gamberger, D., Todorovski, L., Blockeel, H. (eds.) PKDD 2003. LNCS (LNAI), vol. 2838, pp. 107–119. Springer, Heidelberg (2003)

Cieslak, D.A., Hoens, T.R., Chawla, N.V., Kegelmeyer, W.P.: Hellinger distance decision trees are robust and skew-insensitive. Data Min. Knowl. Discov. 24(1), 136–158 (2012)

Breiman, L.: Random forests. Machine Learning 45, 5–32 (2001)

Liu, W., Chawla, S., Cieslak, D.A., Chawla, N.V.: A robust decision tree algorithm for imbalanced data sets. In: SDM, pp. 766–777. SIAM (2010)

Xu, L., Crammer, K., Schuurmans, D.: Robust support vector machine training via convex outlier ablation. In: AAAI. AAAI Press (2006)

Breunig, M.M., Kriegel, H.P., Ng, R.T., Sander, J.: Lof: Identifying density-based local outliers. In: Chen, W., Naughton, J.F., Bernstein, P.A. (eds.) SIGMOD Conference, pp. 93–104. ACM (2000)

Papadimitriou, S., Kitagawa, H., Gibbons, P., Faloutsos, C.: Loci: Fast outlier detection using the local correlation integral. In: Proceedings of the 19th International Conference on Data Engineering, pp. 315–326. IEEE (2003)

Tang, J., Chen, Z., Fu, A., Cheung, D.: Enhancing effectiveness of outlier detections for low density patterns. In: Advances in Knowledge Discovery and Data Mining, pp. 535–548 (2002)

Sun, P., Chawla, S.: On local spatial outliers. In: ICDM, pp. 209–216. IEEE Computer Society (2004)

Yang, J., Zhong, N., Yao, Y., Wang, J.: Local peculiarity factor and its application in outlier detection. In: Li, Y., Sarawagi, B.L. (eds.) KDD, pp. 776–784. ACM (2008)

Latecki, L.J., Lazarevic, A., Pokrajac, D.: Outlier detection with kernel density functions. In: Perner, P. (ed.) MLDM 2007. LNCS (LNAI), vol. 4571, pp. 61–75. Springer, Heidelberg (2007)

Lazarevic, A., Kumar, V.: Feature bagging for outlier detection. In: Grossman, R., Bayardo, R., Bennett, K.P. (eds.) KDD, pp. 157–166. ACM (2005)

Aggarwal, C.C., Hinneburg, A., Keim, D.A.: On the surprising behavior of distance metrics in high dimensional space. In: Van den Bussche, J., Vianu, V. (eds.) ICDT 2001. LNCS, vol. 1973, pp. 420–434. Springer, Heidelberg (2000)

Blei, D., Ng, A., Jordan, M.: Latent dirichlet allocation. The Journal of Machine Learning Research 3, 993–1022 (2003)

Breiman, L.: Bagging predictors. Machine Learning 24(2), 123–140 (1996)

Burges, C.J.C.: A tutorial on support vector machines for pattern recognition. Data Min. Knowl. Discov. 2(2), 121–167 (1998)

Hsu, C., Chen, M.: On the design and applicability of distance functions in high-dimensional data space. IEEE Transactions on Knowledge and Data Engineering 21(4), 523–536 (2009)

Drummond, C., Holte, R.C.: C4.5, class imbalance, and cost sensitivity: Why under-sampling beats over-sampling, pp. 1–8 (2003)

Drown, D.J., Khoshgoftaar, T.M., Seliya, N.: Evolutionary sampling and software quality modeling of high-assurance systems. IEEE Transactions on Systems, Man, and Cybernetics, Part A 39(5), 1097–1107 (2009)

Wu, X., Kumar, V., Ross Quinlan, J., Ghosh, J., Yang, Q., Motoda, H., McLachlan, G., Ng, A., Liu, B., Yu, P., et al.: Top 10 algorithms in data mining. Knowledge and Information Systems 14(1), 1–37 (2008)

Witten, I.H., Frank, E.: Data Mining: Practical Machine Learning Tools and Techniques, 2nd edn. The Morgan Kaufmann Series in Data Management Systems. Morgan Kaufmann Publishers, San Francisco (2005)

Huang, J., Ling, C.X.: Using AUC and accuracy in evaluating learning algorithms. IEEE Transactions on Knowledge and Data Engineering 17(3), 299–310 (2005)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Kokkula, S., Musti, N.M. (2013). Classification and Outlier Detection Based on Topic Based Pattern Synthesis. In: Perner, P. (eds) Machine Learning and Data Mining in Pattern Recognition. MLDM 2013. Lecture Notes in Computer Science(), vol 7988. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-39712-7_8

Download citation

DOI: https://doi.org/10.1007/978-3-642-39712-7_8

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-39711-0

Online ISBN: 978-3-642-39712-7

eBook Packages: Computer ScienceComputer Science (R0)