Abstract

Since the introduction of the backpropagation algorithm as a learning rule for neural networks much effort has been spent trying to develop faster alternatives. Normally, the proposed variations use a fixed strategy e.g. adaptively changing learning rates, or the use of second order information of the error surface. If the chosen heuristic does not fit the actual shape of the error surface, the computed weight changes will be far from the optimal ones.

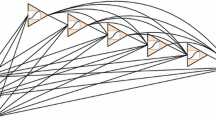

In this paper, we propose a hybrid learning algorithm, which basically uses adaptive step sizes for the weight changes, but adaptively includes second order information of the error surface if a valley of the error function is reached. The algorithm is a combination of RPROP and one dimensional secant steps of the kind used extensively by Quickprop: hence its name, QRPROP.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

R. Battiti. First and Second Order Methods for Learning: Between Steepest Descent and Newtons Method. Neural Networks, 4:141–166, 1992.

S. E. Fahlman. Faster-learning variations on back-propagation. In: D. Touietzky and T. Sejnowski G. Hinton, eds., Proceedings of the’88 Connectionist Models Summer School, pp. 38–51. Carnegie-Mellon-University, 1988.

M. Pfister and R. Rojas. Speeding — up Backpropagation — A Comparison of orthogonal Techniques. In Proceedings of the IJCNN ‘93 Nagoya, Japan, pages 517–523.

M. Riedmiller and H. Braun. RPROP — A Fast Adaptive Learning Algorithm. Technical Report. Universität Karlsruhe, 1992.

M. Riedmiller and H. Braun. RPROP — Description and Implementation Details. Technical Report. Universität Karlsruhe, 1994.

R. Rojas. Theorie der Neuronalen Netze — Eine systematische Einführung. Springer, 1993.

D. E. Rumelhart, G. E. Hinton, and R. J. Williams. Learning internal representations by error propagation. In: D. E. Rumelhart and J. McClelland, eds., Parallel Distributed Processing. MIT Press, 1986.

W. Schiffmann, M. Joost and R. Werner. Optimization of the Backpropagation Algorithm for Training Multilayer Perceptrons. Technical Report. Universität Koblenz, Institut für Physik, 1992.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 1994 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Pfister, M., Rojas, R. (1994). Hybrid Learning Algorithms for Feed-Forward Neural Networks. In: Reusch, B. (eds) Fuzzy Logik. Informatik aktuell. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-79386-8_8

Download citation

DOI: https://doi.org/10.1007/978-3-642-79386-8_8

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-58649-4

Online ISBN: 978-3-642-79386-8

eBook Packages: Springer Book Archive