Abstract

The drawback of many state-of-the-art classifiers is that their models are not easily interpretable. We recently introduced Representative Prototype Sets (RPS), which are simple base classifiers that allow for a systematic description of data sets by exhaustive enumeration of all possible classifiers.

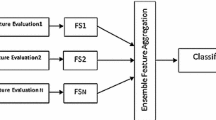

The major focus of the previous work was on a descriptive characterization of low-cardinality data sets. In the context of prediction, a lack of accuracy of the simple RPS model can be compensated by accumulating the decisions of several classifiers. Here, we now investigate ensembles of RPS base classifiers in a predictive setting on data sets of high dimensionality and low cardinality. The performance of several selection and fusion strategies is evaluated. We visualize the decisions of the ensembles in an exemplary scenario and illustrate links between visual data set inspection and prediction.

Christoph Müssel and Ludwig Lausser have contributed equally to this work.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bittner, M., Meltzer, P., Chen, Y., Jiang, Y., Seftor, E., Hendrix, M., et al. (2000). Molecular classification of cutaneous malignant melanoma by gene expression profiling. Nature, 406, 536–540.

Breiman, L. (1996). Bagging predictors. Machine Learning, 24, 123–140.

Brighton, H., & Mellish, C. (2002). Advances in instance selection for instance-based learning algorithms. Data Mining and Knowledge Discovery, 6, 153–172.

Dasarathy, B. (1991). Nearest neighbor (NN) norms: NN pattern classification techniques. Los Alamitos: IEEE Computer Society.

Fix, E., & Hodges, J. (1951). Discriminatory analysis: Nonparametric discrimination: Consistency properties. Technical Report Project 21-49-004, Report Number 4, USAF School of Aviation Medicine, Randolf Field, TX.

Freund, Y., & Schapire, R. (1995). A decision-theoretic generalization of on-line learning and an application to boosting. In P. Vitányi (Ed.), Computational learning theory. Lecture notes in artificial intelligence (Vol. 904, pp. 23–37). Berlin: Springer.

Hart, P. (1968). The condensed nearest neighbor rule. IEEE Transactions on Information Theory, 14, 515–516.

Huang, Y., & Suen, C. (1995). A method of combining multiple experts for the recognition of unconstrained handwritten numerals. IEEE Transactions on Pattern Analysis and Machine Intelligence, 17, 90–94.

Kohonen, T. (1988). Learning vector quantization. Neural Networks, 1, 303.

Lausser, L., Müssel, C., & Kestler, H.A. (2012). Representative prototype sets for data characterization and classification. In N. Mana, F. Schwenker, & E. Trentin (Eds.), Artificial neural networks in pattern recognition (ANNPR12). Lecture notes in artificial intelligence (Vol. 7477, pp. 36–47). Berlin: Springer.

Lausser, L., Müssel, C., & Kestler, H. A. (2014). Identifying predictive hubs to condense the training set of k-nearest neighbour classifiers. Computational Statistics, 29(1–2), 81–95.

Müssel, C., Lausser, L., Maucher, M., & Kestler H. A. (2012). Multi-objective parameter selection for classifiers. Journal of Statistical Software, 46(5), 1–27.

Notterman, D., Alon, U., Sierk, A., & Levine, A. (2001). Transcriptional gene expression profiles of colorectal adenoma, adenocarcinoma, and normal tissue examined by oligonucleotide arrays. Cancer Research, 61, 3124–3130.

Schwenker, F., & Kestler, H. A. (2002) Analysis of support vectors helps to identify borderline patients in classification studies. In Computers in cardiology (pp. 305–308). Piscataway: IEEE Press.

Shipp, M., Ross, K., Tamayo, P., Weng, A., Kutok, J., Aguiar, R. C., et al. (2002). Diffuse large b-cell lymphoma outcome prediction by gene-expression profiling and supervised machine learning. Nature Medicine, 8, 68–74.

Tibshirani, R., Hastie, T., Narasimhan, B., & Chu, G. (2002). Diagnosis of multiple cancer types by shrunken centroids of gene expression. Proceedings of the National Academy of Sciences, 99, 6567–6572.

Vapnik, V. (1998). Statistical learning theory. New York: Wiley.

Wang, Q., Wen, Y.-G., Li, D.-P., Xia, J., Zhou, C.-Z., Yan, D.-W., et al. (2012). Upregulated INHBA expression is associated with poor survival in gastric cancer. Medical Oncology, 29, 77–83.

Webb, A. R. (2002). Statistical pattern recognition (2nd ed.). Chichester: Wiley.

Acknowledgements

This work is supported by the German Science Foundation (SFB 1074, Project Z1) to HAK, and the Federal Ministry of Education and Research (BMBF, Gerontosys II, Forschungskern SyStaR, project ID 0315894A) to HAK.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Müssel, C., Lausser, L., Kestler, H.A. (2015). Ensembles of Representative Prototype Sets for Classification and Data Set Analysis. In: Lausen, B., Krolak-Schwerdt, S., Böhmer, M. (eds) Data Science, Learning by Latent Structures, and Knowledge Discovery. Studies in Classification, Data Analysis, and Knowledge Organization. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-44983-7_29

Download citation

DOI: https://doi.org/10.1007/978-3-662-44983-7_29

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-44982-0

Online ISBN: 978-3-662-44983-7

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)