Abstract

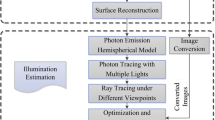

The integration of virtual objects to appear as part of the real world is the base of photo-realistic augmented reality (AR) scene development. The physical illumination information, environment features, and virtual objects shading materials combined are considered to reach a perceptually coherent final scene. Other research investigated the problem while assuming availability of scene geometry beforehand, pre-computation of light location, or offline execution. In this paper, we incorporated our previous work of direct light detection with real scene understanding features to provide occlusion, plane detection, and scene reconstruction for improved photo-realism. The whole system tackles several problems at once which consists of: (1) physics-based light polarization, (2) location of incident lights detection, (3) reflected lights simulation, (4) shading materials definition, (5) real-world geometric understanding. A validation of the system is performed by evaluating the geometric reconstruction accuracy, direct illumination pose, performance cost, and human perception.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Alhakamy, A., Tuceryan, M.: AR360: dynamic illumination for augmented reality with real-time interaction. In: Proceedings of The 2019 IEEE 2nd International Conference on Information and Computer Technologies (ICICT), pp. 170–175. IEEE Press (2019). https://doi.org/10.1109/INFOCT.2019.87109822

Alhakamy, A., Tuceryan, M.: CubeMap360: interactive global illumination for augmented reality in dynamic environment. In: 2019 Southeast Conference IEEE Press, pp. 1–8. https://doi.org/10.1109/SoutheastCon42311.2019.9020588

Alhakamy, A., Tuceryan, M.: An empirical evaluation of the performance of real-time illumination approaches: realistic scenes in augmented reality. In: De Paolis, L.T., Bourdot, P. (eds.) AVR 2019. LNCS, vol. 11614, pp. 179–195. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-25999-0_16

Alhakamy, A., Tuceryan, M.: Polarization-based illumination detection for coherent augmented reality scene rendering in dynamic environments. In: Gavrilova, M., Chang, J., Thalmann, N.M., Hitzer, E., Ishikawa, H. (eds.) CGI 2019. LNCS, vol. 11542, pp. 3–14. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-22514-8_1

Tuceryan, M., et al.: Calibration requirements and procedures for a monitor-based augmented reality system. IEEE Trans. Visual Comput. Graphics 1(3), 255–273 (1995)

Breen, D.E., Whitaker, R.T., Rose, E., Tuceryan, M.: Interactive occlusion and automatic object placement for augmented reality. In: Computer Graphics Forum, vol. 15, pp. 11–22. Wiley Online Library (1996)

Winston, P.H., Horn, B.: The psychology of computer vision, Chap. Obtaining shape from shading information. McGraw-Hill Companies, New York (1975)

Brom, J.M., Rioux, F.: Polarized light and quantum mechanics: an optical analog of the stern-gerlach experiment. Chem. Educ. 7(4), 200–204 (2002)

Chen, H., Wolff, L.B.: Polarization phase-based method for material classification in computer vision. Int. J. Comput. Vision 28(1), 73–83 (1998)

Dai, Y., Hou, W.: Research on configuration arrangement of spatial interface in mobile phone augmented reality environment. In: Tang, Y., Zu, Q., Rodríguez García, J.G. (eds.) HCC 2018. LNCS, vol. 11354, pp. 48–59. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-15127-0_5

Debevec, P.: Rendering synthetic objects into real scenes: bridging traditional and image-based graphics with global illumination and high dynamic range photography. In: Proceedings of ACM SIGGRAPH 1998 the 25th Annual Conference on Computer Graphics and Interactive Techniques, vol. 10, pp. 189–198. ACM (1998). https://doi.org/0-89791-999-8

Dey, T.K.: Curve and Surface Reconstruction: Algorithms with Mathematical Analysis, vol. 23. Cambridge University Press, Cambridge (2006)

Fan, C.L., Lee, J., Lo, W.C., Huang, C.Y., Chen, K.T., Hsu, C.H.: Fixation prediction for 360 video streaming in head-mounted virtual reality. In: Proceedings of the 27th Workshop on Network and Operating Systems Support for Digital Audio and Video, pp. 67–72. ACM (2017)

Franke, T.A.: Delta light propagation volumes for mixed reality. In: 2013 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 125–132 (Oct 2013). https://doi.org/10.1109/ISMAR.2013.6671772

Gruber, L., Langlotz, T., Sen, P., Hoherer, T., Schmalstieg, D.: Efficient and robustradiance transfer for probeless photorealistic augmented reality. In: 2014 IEEE Virtual Reality (VR), pp. 15–20. IEEE (2014)

Gruber, L., Richter-Trummer, T., Schmalstieg, D.: Real-time photometric registration from arbitrary geometry. In: 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 119–128. IEEE (2012)

Gruber, L., Ventura, J., Schmalstieg, D.: Image-space illumination for augmented reality in dynamic environments. In: 2015 IEEE Virtual Reality (VR), pp. 127–134. IEEE (2015)

Handa, A., Whelan, T., McDonald, J., Davison, A.J.: A benchmark for RGB-D visual odometry, 3D reconstruction and slam. In: 2014 IEEE international conference on Robotics and automation (ICRA), pp. 1524–1531. IEEE (2014)

Jiddi, S., Robert, P., Marchand, E.: Estimation of position and intensity of dynamic light sources using cast shadows on textured real surfaces. In: 2018 25th IEEE International Conference on Image Processing (ICIP), pp. 1063–1067, October 2018. https://doi.org/10.1109/ICIP.2018.8451078

Kán, P.: High-quality real-time global illumination in augmented reality. Ph.D. thesis (2014)

Kán, P., Kaufmann, H.: High-quality reflections, refractions, and caustics in augmented reality and their contribution to visual coherence. In: 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 99–108. IEEE (2012)

Keller, A.: Instant Radiosity. In: Proceedings of the 24th annual conference on Computer graphics and interactive techniques, pp. 49–56. ACM Press/Addison-Wesley Publishing Co. (1997)

Meilland, M., Barat, C., Comport, A.: 3D high dynamic range dense visual slam and its application to real-time object re-lighting. In: 2013 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 143–152, October 2013.https://doi.org/10.1109/ISMAR.2013.6671774

Mladenov, B., Damiani, L., Giribone, P., Revetria, R.: A short review of the SDKs and wearable devices to be used for ar application for industrial working environment. Proc. World Congress Eng. Comput. Sci. 1, 23–25 (2018)

Nerurkar, E., Lynen, S., Zhao, S.: System and method for concurrent odometry and mapping, 23 November 2017, uS Patent App. 15/595,617

Ngo Thanh, T., Nagahara, H., Taniguchi, R.i.: Shape and light directions from shading and polarization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2310–2318 (2015)

Nowrouzezahrai, D., Geiger, S., Mitchell, K., Sumner, R., Jarosz, W., Gross, M.: Light factorization for mixed-frequency shadows in augmented reality. In: 201110th IEEE International Symposium on Mixed and Augmented Reality, pp. 173–179, October 2011. https://doi.org/10.1109/ISMAR.2011.6092384

Okabe, A., Boots, B., Sugihara, K., Chiu, S.N.: Spatial Tessellations: Concepts and Applications of Voronoi Diagrams, vol. 501. Wiley, Hoboken (2009)

Parisotto, E., Chaplot, D.S., Zhang, J., Salakhutdinov, R.: Global pose estimation with an attention-based recurrent network. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 350–35009, June 2018. https://doi.org/10.1109/CVPRW.2018.00061

Ramamoorthi, R., Hanrahan, P.: An efficient representation for irradiance environment maps. In: Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, pp. 497–500. ACM (2001)

Rettenmund, D., Fehr, M., Cavegn, S., Nebiker, S.: Accurate visual localization in outdoor and indoor environments exploiting 3d image spaces as spatial reference. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. XLII-1, 355–362 (2018). https://doi.org/10.5194/isprs-archives-XLII-1-355-2018

Rhee, T., Petikam, L., Allen, B., Chalmers, A.: Mr360: mixed reality rendering for360 panoramic videos. IEEE Trans. Visual. Comput. Graphics 23(4), 1379–1388 (2017)

Rohmer, K., Grosch, T.: Tiled frustum culling for differential rendering on mobile devices. In: 2015 IEEE International Symposium on Mixed and Augmented Reality, pp. 37–42, September 2015. https://doi.org/10.1109/ISMAR.2015.13

Rohmer, K., Jendersie, J., Grosch, T.: Natural environment illumination: coherent interactive augmented reality for mobile and non-mobile devices. IEEE Trans. Visual Comput. Graphics 23(11), 2474–2484 (2017). https://doi.org/10.1109/TVCG.2017.2734426

Rohmer, K., Büschell, W., Dachselt, R., Grosch, T.: Interactive near-field illumination for photorealistic augmented reality on mobile devices. In: 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), pp. 29–38. IEEE (2014)

Schneider, T., Li, M., Burri, M., Nieto, J., Siegwart, R., Gilitschenski, I.: Visual-inertial self-calibration on informative motion segments. In: 2017 IEEE International Conference on Robotics and Automation (ICRA), pp. 6487–6494. IEEE(2017)

Shen, L., Zhao, Y., Peng, Q., Chan, J.C.W., Kong, S.G.: An iterative image de-hazing method with polarization. IEEE Trans. Multimed. 21(5), 1093–1107 (2018)

Wang, J., Liu, H., Cong, L., Xiahou, Z., Wang, L.: CNN-monofusion: on-line monocular dense reconstruction using learned depth from single view. In: 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), pp. 57–62, October 2018. https://doi.org/10.1109/ISMAR-Adjunct.2018.00034

Weingarten, J., Siegwart, R.: EKF-based 3D slam for structured environment reconstruction. In: 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 3834–3839. IEEE (2005)

Yen, S.C., Fan, C.L., Hsu, C.H.: Streaming \(360^{\circ }\); videos to head-mounted virtual reality using dash over quic transport protocol. In: Proceedings of the 24th ACM Workshop on Packet Video, PV 2019, pp. 7–12. ACM, New York (2019). https://doi.org/10.1145/3304114.3325616, http://doi.acm.org/10.1145/3304114.3325616

Zerner, M.C.: Semiempirical molecular orbital methods. Rev. Comput. Chem. 2, 313–365 (1991)

Zhang, C.: Cufusion2: accurate and denoised volumetric 3d object re-construction using depth cameras. IEEE Access 7, 49882–49893 (2019). https://doi.org/10.1109/ACCESS.2019.2911119

Acknowledgement

The first author is very grateful for the PhD committee support and encouragement: Dr. Mihran Tuceryan, my committee chair; Dr. Shiaofen Fang; Dr. Jiang Yu Zheng; Dr. Snehasis Mukhopadhyay. The completion of this research could not have been accomplished without the sponsor of the Saudi Arabian Cultural Mission (SACM). Also, the authors would like to thank CGI 2019 committee for their invitation to submit an extended version of the paper [4] on Transactions on Computer Science.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer-Verlag GmbH Germany, part of Springer Nature

About this chapter

Cite this chapter

Alhakamy, A., Tuceryan, M. (2020). Physical Environment Reconstruction Beyond Light Polarization for Coherent Augmented Reality Scene on Mobile Devices. In: Gavrilova, M., Tan, C., Chang, J., Thalmann, N. (eds) Transactions on Computational Science XXXVII. Lecture Notes in Computer Science(), vol 12230. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-61983-4_2

Download citation

DOI: https://doi.org/10.1007/978-3-662-61983-4_2

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-61982-7

Online ISBN: 978-3-662-61983-4

eBook Packages: Computer ScienceComputer Science (R0)