Abstract

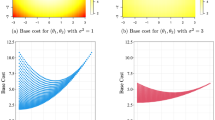

Assignment methods are at the heart of many algorithms for unsupervised learning and clustering — in particular, the well-known K-means and Expectation-Maximization (EM) algorithms. In this work, we study several different methods of assignment, including the “hard” assignments used by K-means and the “soft” assignments used by EM. While it is known that K-means minimizes the distortion on the data and EM maximizes the likelihood, little is known about the systematic differences of behavior between the two algorithms. Here we shed light on these differences via an information-theoretic analysis. The cornerstone of our results is a simple decomposition of the expected distortion, showing that K-means (and its extension for inferring general parametric densities from unlabeled sample data) must implicitly manage a trade-off between how similar the data assigned to each cluster are, and how the data are balanced among the clusters. How well the data are balanced is measured by the entropy of the partition defined by the hard assignments. In addition to letting us predict and verify systematic differences between K-means and EM on specific examples, the decomposition allows us to give a rather general argument showing that K-means will consistently find densities with less “overlap” than EM. We also study a third natural assignment method that we call posterior assignment, that is close in spirit to the soft assignments of EM, but leads to a surprisingly different algorithm.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

T.M. Cover and J.A. Thomas. Elements of Information Theory. Wiley-Interscience, 1991.

A.P. Dempster, N.M. Laird, and D.B. Rubin. Maximum-likelihood from incomplete data ia the em algorithm. Journal of the Royal Statistical Society B, 39: 1–39, 1977.

R.O. Duda and P.E. Hart. Pattern Classification and Scene Analysis. John Wiley andSons, 1973.

A. Gersho. On the structure of vector quantizers. IEEE Transactions on Information Theory, 28 (2): 157–166, 1982.

J. Hertz, A. Krogh, and R.G. Palmer. Introduction to the Theory of Neural Computation. Addison-Wesley, 1991.

S. L. Lauritzen. The ЕM algorithm for graphical association models with missing data. Computational Statistics and Data Analysis, 19: 191–201, 1995.

J. MacQueen. Some methods for classification and analysis of multivariate observations. In Proceedings of the Fifth Berkeley Symposium on Mathematics, Statistics and Probability, volume 1, pages 281–296, 1967.

L. Rabiner and B. Juang. Fundamentals of Speech Recognition. Prentice Hall, 1993.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 1998 Springer Science+Business Media Dordrecht

About this chapter

Cite this chapter

Kearns, M., Mansour, Y., Ng, A.Y. (1998). An Information-Theoretic Analysis of Hard and Soft Assignment Methods for Clustering. In: Jordan, M.I. (eds) Learning in Graphical Models. NATO ASI Series, vol 89. Springer, Dordrecht. https://doi.org/10.1007/978-94-011-5014-9_18

Download citation

DOI: https://doi.org/10.1007/978-94-011-5014-9_18

Publisher Name: Springer, Dordrecht

Print ISBN: 978-94-010-6104-9

Online ISBN: 978-94-011-5014-9

eBook Packages: Springer Book Archive