Abstract

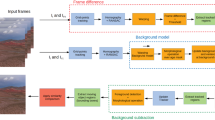

Dynamic background updation is one of the major challenging situation in moving object detection, where we do not have a fix reference background model. The background model maintained needs to be updated as and when moving objects add and leave the background. This paper proposes a redefined codebook model which aims at eliminating the ghost regions left behind when a non-permanent background object starts to move. The background codewords which were routinely deleted from the set of codewords in codebook model are retained in this method while deleting the foreground codewords leading to ghost elimination. This method also reduces memory requirements significantly without effecting object detection, as only the foreground codewords are deleted and not background. The method has been tested for robust detection on various videos with multiple and different kinds of moving backgrounds. Compared to existing multimode modeling techniques our algorithm eliminates the ghost regions left behind when non permanent background objects starts to move. For performance evaluation, we have used similarity measure on video sequences having dynamic backgrounds and compared with three widely used background subtraction algorithms.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

K. Toyama, J. Krumma, B. Brumitt, and B. Meyers. “Wallflower: principles and practice of background maintenance” In ICCV99, 1999.

Wren, Christopher Richard, et al. “Pfinder: Real-time tracking of the human body.” IEEE Transactions on Pattern Analysis and Machine Intelligence, pp: 780–785, 1997.

Stauffer, Chris, and W. Eric L. Grimson. “Adaptive background mixture models for real-time tracking.” IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 1999.

Zivkovic, Zoran. “Improved adaptive Gaussian mixture model for background subtraction.” Proceedings of the 17th International Conference on Pattern Recognition, ICPR Vol. 2. IEEE, 2004.

KaewTraKulPong, Pakorn, and Richard Bowden. “An improved adaptive background mixture model for real-time tracking with shadow detection.” Video-based surveillance systems. Springer US, 135–144, 2002.

Mittal, Anurag, and Nikos Paragios. “Motion-based background subtraction using adaptive kernel density estimation.” Computer Vision and Pattern Recognition, 2004. CVPR 2004. Proceedings of the 2004 IEEE Computer Society Conference on. Vol. 2. IEEE, 2004.

Kim, Kyungnam, et al. “Real-time foregroundbackground segmentation using codebook model.” Real-time imaging 11.3, pp: 172–185, 2005.

Sigari, Mohamad Hoseyn, and Mahmood Fathy. “Real-time background modeling/subtraction using two-layer codebook model.” Proceedings of the International MultiConference of Engineers and Computer Scientists. Vol. 1. 2008.

Guo, Jing-Ming, et al. “Hierarchical method for foreground detection using codebook model.” IEEE Transactions on Circuits and Systems for Video Technology, pp: 804–815, 2011.

Barnich, Olivier, and Marc Van Droogenbroeck. “ViBe: A universal background subtraction algorithm for video sequences.” IEEE Transactions on Image Processing, pp:1709–1724, 2011.

Goyette, N.; Jodoin, P.; Porikli, F.; Konrad, J.; Ishwar, P., “Changedetection. net: A new change detection benchmark dataset,” Computer Vision and Pattern Recognition Workshops (CVPRW), 2012 IEEE Computer Society Conference on, pp: 16–21, June 2012.

Li, L., Huang, W., Gu, I.Y.H., Tian, Q., “Statistical modeling of complex backgrounds for foreground object detection” IEEE Transactions on Image Processing, pp: 1459–1472, 2004.

Y. Wang, P.-M. Jodoin, F. Porikli, J. Konrad, Y. Benezeth, and P. Ishwar, CDnet 2014: “An Expanded Change Detection Benchmark Dataset”, in Proc. IEEE Workshop on Change Detection (CDW-2014) at CVPR-2014, pp. 387–394. 2014

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Science+Business Media Singapore

About this paper

Cite this paper

Sharma, V., Nain, N., Badal, T. (2017). A Redefined Codebook Model for Dynamic Backgrounds. In: Raman, B., Kumar, S., Roy, P., Sen, D. (eds) Proceedings of International Conference on Computer Vision and Image Processing. Advances in Intelligent Systems and Computing, vol 460. Springer, Singapore. https://doi.org/10.1007/978-981-10-2107-7_42

Download citation

DOI: https://doi.org/10.1007/978-981-10-2107-7_42

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-2106-0

Online ISBN: 978-981-10-2107-7

eBook Packages: EngineeringEngineering (R0)