Abstract

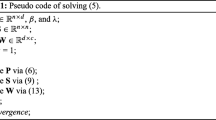

Clustering analysis is one of the most important tasks in statistics, machine learning, and image processing. Compared to those clustering methods based on Euclidean geometry, spectral clustering has no limitations on the shape of data and can detect linearly non-separable pattern. Due to the high computation complexity of spectral clustering, it is difficult to handle large-scale data sets. Recently, several methods have been proposed to accelerate spectral clustering. Among these methods, landmark-based spectral clustering is one of the most direct methods without losing much information embedded in the data sets. Unfortunately, the existing landmark-based spectral clustering methods do not utilize the prior knowledge embedded in a given similarity function. To address the aforementioned challenges, a landmark-based spectral clustering method with local similarity representation is proposed. The proposed method firstly encodes the original data points with their most ‘similar’ landmarks by using a given similarity function. Then the proposed method performs singular value decomposition on the encoded data points to get the spectral embedded data points. Finally run k-means on the embedded data points to get the clustering results. Extensive experiments show the effectiveness and efficiency of the proposed method.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Von Luxburg, U., Belkin, M., Bousquet, O.: Consistency of spectral clustering. Ann. Stat. 36(2), 555–586 (2008)

Liu, X., Zhou, S., Wang, Y., Li, M., Dou, Y., Zhu, E., Yin, J.: Optimal neighborhood kernel clustering with multiple kernels. In: Proceedings of the 31st Conference on Artificial Intelligence, pp. 2266–2272 (2017)

Wu, Y., Zhang, B., Yi, X., Tang, Y.: Communication-motion planning for wireless relay-assisted multi-robot system. IEEE Wirel. Commun. Lett. 5(6), 568–571 (2016)

Achanta, R., Shaji, A., Smith, K., Lucchi, A., Fua, P., Süsstrunk, S.: SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 34(11), 2274–2282 (2012)

Li, Z., Chen, J.: Superpixel segmentation using linear spectral clustering. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1356–1363 (2015)

Hartigan, J.A., Wong, M.A.: A k-means clustering algorithm. Appl. Stat. 28(1), 100–108 (1979)

Fowlkes, C., Belongie, S., Chung, F., Malik, J.: Spectral grouping using the nystrom method. IEEE Trans. Pattern Anal. Mach. Intell. 26(2), 214–225 (2004)

Yan, D., Huang, L., Jordan, M.I.: Fast approximate spectral clustering. In: International Conference on Knowledge Discovery and Data Mining, pp. 1–23 (2009)

Chen, X.: Large scale spectral clustering with landmark-based representation. In: Proceedings of the Twenty-Fifth AAAI Conference on Artificial Intelligence Large, number Chung 1997, pp. 313–318 (2011)

Wang, J., Yang, J., Yu, K., Lv, F., Huang, T.: Locality-constrained linear coding for image classification. In: Computer Vision and Pattern Recognition, pp. 27–30 (2014)

Wang, M., Fu, W., Hao, S., Tao, D., Wu, X.: Scalable semi-supervised learning by efficient anchor graph regularization. IEEE Trans. Knowl. Data Eng. 28(7), 1864–1877 (2016)

Liu, W., He, J., Chang, S.-F.: Large graph construction for scalable semi-supervised learning. In: Proceedings of the 25th International Conference on Machine Learning, pp. 679–689 (2010)

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., Duchesnay, E.: Scikit-learn: machine learning in python. J. Mach. Learn. Res. 12, 2825–2830 (2011)

Acknowledgment

The authors would like to thank the financial support of National Natural Science Foundation of China (Project NO. 61672528, 61403405, 61232016, 61170287).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Yin, W., Zhu, E., Zhu, X., Yin, J. (2017). Landmark-Based Spectral Clustering with Local Similarity Representation. In: Du, D., Li, L., Zhu, E., He, K. (eds) Theoretical Computer Science. NCTCS 2017. Communications in Computer and Information Science, vol 768. Springer, Singapore. https://doi.org/10.1007/978-981-10-6893-5_15

Download citation

DOI: https://doi.org/10.1007/978-981-10-6893-5_15

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-6892-8

Online ISBN: 978-981-10-6893-5

eBook Packages: Computer ScienceComputer Science (R0)