Abstract

In recent years, human posture recognition based on Kinect gradually has been paid more attention. However, the current researches and methods have drawbacks, such as low recognition accuracy and less recognizable postures. This paper proposed a novel method. The method utilized image processing technique, BP neural network technique, skeleton data and depth data captured by Kinect v2 to recognize postures. We distinguished four types of postures (sitting cross-legged, kneeling or sitting, standing, and other postures) by using the natural ratios of human body parts, and judged the kneeling and sitting postures by calculating the 3D spatial relation of the feature points. Finally, we applied BP neural network to recognize the lying and bending postures. The experimental results indicated that the robustness and timeliness of our method was strong, the recognition accuracy was 98.98%.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Human posture recognition plays a very important role in the field of human-computer interaction, as the low-cost somatosensory device Kinect was provided by Microsoft, many researches and experiments based on Kinect have been conducted by several institutions or scholars, aim to find a better way for human posture recognition or human-computer Interaction. These researches achieved good effect.

Some human posture recognition methods used depth image. The authors of [13, 14] predicted 3D positions of body joints from a single depth image. The paper [15] combined several image processing techniques by using the depth images from a Kinect to recognize the five distinct human postures. Literature [12] estimated human postures of the upper limbs and upper limbs motion by using depth images.

Some human posture recognition methods used skeleton data. In the paper [5], four types of features (lying, sitting, standing, bending) were extracted from skeleton data, used to recognize the body posture. The paper [10] proposed a human posture detection and recognition algorithm based on geometric features and 3D skeleton data. The postures are classified by Support Vector Machines classifier.

Some human posture recognition methods used depth image and skeleton data. Literature [11] utilized human anatomy marks and human skeleton model, estimated human posture by using depth image and the Geodesic Distance. The authors of [16] obtained 3D body features from 3D coordinate information using depth image, identified 3D human posture human with the models of skeleton joint.

Kinect v2 improved a lot contrast to Kinect v1, but the skeleton data from Kinect v2 is not entirely accurate, for instance, the skeleton data may be incorrect when some skeleton joints overlapped the others, as shown in Fig. 1(a) and (b). In order to solve this problem, some researchers proposed several methods. The authors of [17] proposed a repair method, but this method only solve the problem of the occlusion of single joint; The literatures [7, 12] recognized posture only by using depth data, and the paper [15] did not use Kinect SDK for human posture recognition. However, the methods have limitations, such as less recognizable postures and low recognition accuracy. A novel hybrid method was proposed in this paper. Depth images and skeleton data were obtained with the method by using Kinect SDK 2.0. Combined with other methods and anthropometry, six postures (standing, sitting cross-legged, sitting, kneeling, bending and lying) could be recognized.

This paper is organized as follows: Sect. 2 introduces our method. Section 3 describes and discusses the experimental content, steps and results. Finally, the conclusion of our method is described in Sect. 4.

2 Our Method

2.1 Generation of the Body Height and the Body Center of Gravity

Kinect v2 can recognize and directly output the depth data of humanoid area, without the need to distinguish the foreground and background [2]. We extracted the humanoid area by using Kinect SDK 2.0, and calculated the body center of gravity (\(x_{c}\),\(y_{c}\)), \(x_{c}\) is the average value of all of the x-axis values of the null-black pixels, and \(y_{c}\) is the average value of all of the y-axis values of the null-black pixels.

We obtained human contour by Candy Operator, as shown in Fig. 2, the red rectangle in (a) is the center of gravity of the human body area, the red rectangle in (b) is the center of gravity of the human contour, and the height of the body contour is the difference value (on the y-axis) between the highest point and the lowest point of the body contour. When the center of gravity is not in the human body area, draw two lines (the horizontal line and the vertical line) at the origin point (the center of gravity), as shown in Fig. 2(c), there is a line has two points of intersection with the human contour, the new center of gravity is the blue point (the center position of the two points of intersection).

2.2 Head Localization and Calculation of the Head Height

Our method firstly distinguished two types of the postures (1. The head is on the top of the body; 2. The head is not on the top of the body) by the head position. Because the skeleton data may be incorrect, so we positioned the head by using the depth image and the skeleton data simultaneously. Note that the resolutions of the depth image and the skeleton image were both set to 512 * 424, because our method required accurate pixel values. The general judging process according to (1) whether the head point is in the human body area, and (2) whether the slope of connection-line (connect the head coordinate and the neck coordinate) is less than −1 (or greater than 1).

When the head is not on the top of the body, the posture recognition is processed by BP neural network (see Sect. 2.5), otherwise, the postures will be recognized according to the natural ratios of human body parts.

In order to obtain the head information and the neck information, we measured the related data of 100 (50 males, 50 females) healthy person (18 to 60 years old) when they facing the Kinect at different directions. We calculated the equation by statistical analysis, as follows:

where \(H_{h}\) is the head height (data is from the depth image), \(L_{hn}\) is the height difference of the head point and the neck point (data is from the skeleton image).

2.3 Estimation of Human Posture When the Head Is on the Top of the Body

The knowledge of anthropometry shows that there are several certain ratios among all of the human body parts. Chinese National Standard GB10000-88 [1] and the related research literature [18] proved that the ratios among the human body parts changed only a little in the past 20 years.

We calculated the ratio of the posture height to the head height (data is from [1]) in every percentile, as shown in Table 1, the average values of these ratios are shown in the P column. It proved that the differences of the three ratios are relatively large. However, it is difficult to judge the kneeling height and the sitting height. The way of distinguishing the postures as follows: (1) If \(P \geqslant 6.5\), the posture is standing; (2) If \(6.0 > P \geqslant 5.0\), the posture is kneeling or sitting; (3) If \(4.5 > P \geqslant 3.3\), the posture is sitting cross-legged.

2.4 Distinguishing the Postures of Kneeling or Sitting by Specific Points

We obtained two coordinates, \(P_{h}(x_{h},y_{h},z_{h})\) is corresponding to the central point \(D_{h}\) of the head, and \(P_{e} (x_{e},y_{e},z_{e}) \)is corresponding to the bottom point \(D_{e}\) of the human area. Our method distinguished the kneeling or sitting posture by the 3D spatial relation of the two coordinates, and the head radius was set as:

where \(H_{h}\) is the head height, \(W_{h}\) is the head width, \(L_{h}\) is the head length.

In the 2D image, \(D_{h}\) is the center of the head in the human body area; In the 3D space, \(P_{h}\) is the center point of the head corresponds to \(D_{h}\) (the coordinate of \(P_{h}\) is \((x_{h},y_{h},z_{h})\)), and \(P_{hs}\) is the point of the head surface corresponds to \(D_{h}\) (the coordinate of \(P_{hs}\) is \((x_{hs},y_{hs},z_{hs})\)), \(P_{h}\) can be calculated by the following equation:

As shown in Fig. 3(a), \(P_{e}\) is the point corresponds to the bottom point of the human body area in the 2D image. \(P_{h^{'}}\) is the projected point of \(P_{h}\) in X-Z plane, and the coordinate of \(P_{h^{'}}\) is \((x_{h},y_{e},z_{h})\). The distance between \(P_{e}\) and \(P_{h^{'}}\) is calculated by Eq. (4).

\(P_{e}\) can be confirmed as the foot when \(L_{eh^{'}}\) exceeds a threshold (one head height \(H_{h}\)). However, at this time, the posture maybe the kneeling or sitting posture, as shown in Fig. 3(b) and (c). We proposed two ways to judge the two postures as follows:

-

(I)

Draw a circle, the circle center is at the right above \(P_{e}\), the radius is \(H_{h}\), the distance between \(P_{e}\) and the circle center is also \(H_{h}\). If there are no human body parts in the circle, then the posture is kneeling, otherwise, the posture is sitting.

-

(II)

Draw a circle, the circle center is at the right above \(P_{h^{'}}\), the radius is \(R_{h}\), and the distance between \(P_{h^{'}}\) and the circle center is \(H_{h}\). If there are no human body parts in the circle, then the posture is sitting, otherwise, the posture is kneeling.

2.5 Posture Recognition by Using BP Neural Network

BP Neural Network

BP neural network is based on error backpropagation (BP) algorithm for its training, and this neural network is a kind of feed-forward multilayer network. BP neural network comprises one input layer, one or more hidden layer and one output layer. Numerous hidden layers require considerable training time. According to Kolmogorov theory, a three-layer BP network (input, hidden and output layers) can approximate any continuous function [3, 6] in the condition of reasonable structure and appropriate weights. Therefore, we selected the three-layer BP neural network.

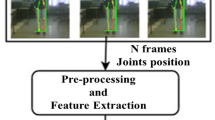

Extraction of Feature Vectors

We chose and transformed (from the Cartesian coordinate system to the Polar coordinate system) the feature vectors by using the methods of Literature [15]. As shown in Fig. 4(a). In order to distinguish the feature vectors better, we need to specify the order of the feature vectors. Therefore, we set a disk with four regions, as shown in Fig. 4(b).

Because the feature vectors of the lying or bending posture must be in Region 1, 2, 3 and 4, so the order of the feature vectors can be arranged as follows:

-

(1)

In Region 1, if there is no feature vectors, we set \(L_{1}=0\) and \(T_1=0\); If a vector angle is closer to \(180^{\circ }\) than the others, then this feature vector is called \(V_1\), and we set \(L_1=R_1\) and \(T_1=\theta _1\).

-

(2)

In Region 2, if there is no feature vectors, we set \(L_2=0\) and \(T_2=0\); If a vector angle is closer to \(360^{\circ }(0^{\circ })\) than the others, then this feature vector is called \(V_2\), and we set \(L_2=R_2\) and \(T_2=\theta _2\).

-

(3)

In Region 3, if there is no feature vectors, we set \(L_3=0\) and \(T_3=0\); If a vector angle is closer to \(315^{\circ }\) than the others, then this feature vector is called \(V_3\), and we set \(L_3=R_3\) and \(T_3=\theta _3\).

-

(4)

In Region 4, if there is no feature vectors, we set \(L_4=0\) and \(T_4=0\); If a vector angle is closer to \(225^{\circ }\) than the others, then this feature vector is called \(V_4\), and we set \(L_4=R_4\) and \(T_4=\theta _4\).The specifying process of the order of the feature vectors is finished.

Figure 5 shows the ordered feature vectors.

We set \(\overline{R_{i}} = L_{i}/R_{max}\), \(\overline{\theta _{i}} =T_{i}/360\), where \(R_{max}\) denotes the maximum value of all of the R value. In this case, no matter what the height of the human body area is, the feature vectors are ratio values. Therefore, our method can be applied to different persons. As shown in Table 2, the eight feature vectors are the input neurons for BP neural network.

The Training of the BP Neural Network

We conducted many experiments based on several empirical formulas in [3, 4, 6], and confirmed that the number of hidden layer neurons is 5. As shown in Fig. 6 (a), the eight types of the postural samples are the training data.

In this paper, the learning step size is set to 0.01, the accuracy of training target is set to 0.001; as shown in Fig. 6(b), the best training performance is 0.009805 at epoch 59.

3 Experimental Results and Discussion

In this paper, the hardware environment as follows: Lenovo E51 laptop computer with \(Intel^{\circledR }Core^{TM}\) i7-6500 - CPU@2.60 Hz and 16G memory, Kinect 2.0 for Windows; System environment: the 64 - bit Windows 10 enterprise edition; Software environment: Visual Studio.net 2012, Kinect SDK 2.0.

We recruited four healthy adults (2 male and 2 female) who have different heights and weights. Among them, one male and one female are not only the participants of all the experiments, but also the models for the training samples of BP neural network. The distance of the ground and the Kinect v2 is 80 cm, and the distances of the Kinect v2 and the participants are 2.0 m–4.2 m.

Two participants (1 male and 1 female) posed two postures (bending and lying) in four directions (\(\pm 45^{\circ }\) and \(\pm 90^{\circ }\)), and each participant posed 5 times in every direction. The eight types (as shown in Fig. 6(a)) of input samples are obtained and input to the BP neural network for training, and then the training result set is saved.

We classified all the participants into three sets, Set1 is the set of the models who posed postures for training samples, and Set2 is the set of the other participants who did not pose postures for training samples, Set3 is the set of all the participants. We recognized six postures (standing, sitting cross-legged, kneeling, sitting, lying, and bending) of each participant in two environments (indoor and outdoor). Each participant oriented to the Kinect v2 in different direction, and then posed six postures several times. The directions and real scenes of postures are shown as Fig. 7. The experimental statistic values are shown in Table 3, where Average denotes the average accuracy of six postures in one experiment; Accuracy i \((i=1,2,3)\) denotes the accuracy of one posture in the \(i-th\) set.

Our program comprises of five parts, Part 1-Part 4 of our program corresponds to the contents of the Sects. 2.1–2.4; Part 5 corresponds to the process of BP neutral network (the recognition of bending and lying). Table 4 shows the running time of the program. The average runtime is very short (within 30 ms), and meets the demands of real-time.

Literature [15] utilized the ratio of the upper body width to the lower body width to distinguish kneeling posture, and the accuracy is 99.69%, but the person subject must pose kneeling posture in some fixed direction (\(\pm 45^{\circ }\), \(\pm 90^{\circ }\)). The authors of [9] recognized human postures by using four methods, the best accuracy is 100% when they used BP neutral network, but the method only can recognize three postures (standing, lying and sitting), because the method of [9] could not extract effective feature vectors of the other postures. The methods of [10] and [8] were respectively proposed to recognize 10 postures. The three postures (standing, sitting and bending) in our paper are similar to the three postures (standing, sitting on chair, and bending forward) in [10] and the three postures (standing up, sitting, and picking up) in [8]. Literature [10] utilized a polynomial kernel SVM classifier and the angular features of the postures, the accuracy is 95.87%. The method of [8] was based on HMM (hidden Markov model) and 3D joints from depth images.

We compared these methods with our method, as shown in Table 5.

However, our method has limitations. For example, when the person orients to the Kinect and poses bending and lying postures in some specific directions, such as \(0^{\circ }\), maybe lead to misjudgment about the two postures, because the feature vector is unobvious and cannot be extracted precisely.

4 Conclusions

This paper proposed a novel method which innovatively used the related knowledge of anthropometry and the BP neural network, this method can recognize six postures (standing, sitting cross-legged, kneeling, sitting, lying, and bending) when the person orients to the Kinect in different direction. The average recognition accuracy is 98.98%. We preliminarily judged four postures which the head is on the top of the body by using the ratio of the body parts; the feature vectors of the other two postures (lying and bending) were extracted, and the two postures were recognized by BP neural network. However, our method can only recognized the lying and bending postures in a small number of directions (from \(\pm 45^{\circ }\) to \(\pm 90^{\circ }\)), because a few of body joints are obscured when we used only one Kinect, lead to extract feature vectors difficultly. In the further work, we will use two or more Kinect, thus when the person orients to any Kinect in any the directions, more human postures can be recognized.

References

China_national_institute_of_standardization: Human Dimensions of Chinese Adults (GB10000-88). Standards press of China (1988)

Hua, L., Chao, Z., Wei, Q., Cheng, H., Hong-Yu, Z., Ting-Ting, L.: An automatic matting algorithms of human figure based on kinect depth image. J. Changchun Univ. Sci. Technol. 39, 81–84 (2016)

Hua-Yu, S., Zhao-Xia, W., Cheng-Yao, G., Juan, Q., Fu-Bin, Y., Wei, X.: Determining the number of BP neural network hidden layer units. J. Tianjin Univ. Technol. 24, 13–15 (2008)

Jadid, M.N., Fairbairn, D.R.: The application of neural network techniques to structural analysis by implementing an adaptive finite-element mesh generation. AI EDAM : Artif. Intell. Eng. Design Anal. Manuf. 8(3), 177–191 (1994)

Le, T.L., Nguyen, M.Q., Nguyen, T.T.M.: Human posture recognition using human skeleton provided by kinect. In: International Conference on Computing, Management and Telecommunications, pp. 340–345 (2013)

Li-Qun, H.: Artificial Neural Network Tutorial. Beijing University of Posts and Telecommunications Press (2006)

Lu, X., Chia-Chih, C., Aggarwal, J.K.: Human detection using depth information by kinect. In: Computer Vision and Pattern Recognition Workshops, pp. 15–22 (2011)

Lu, X., Chia-Chih, C., Aggarwal, J.K.: View invariant human action recognition using histograms of 3D joints. In: Computer Vision and Pattern Recognition Workshops, pp. 20–27 (2012)

Patsadu, O., Nukoolkit, C., Watanapa, B.: Human gesture recognition using kinect camera. In: International Joint Conference on Computer Science and Software Engineering, pp. 28–32 (2012)

Pisharady, P.K., Saerbeck, M.: Kinect based body posture detection and recognition system. In: Proceedings of SPIE - The International Society for Optical Engineering, vol. 8768, no. 1, pp. 87687F–87687F-5 (2013)

Schwarz, L.A., Mkhitaryan, A., Mateus, D., Navab, N.: Human skeleton tracking from depth data using geodesic distances and optical flow. Image Vis. Comput. 30(3), 217–226 (2012)

Shih-Chung, H., Jun-Yang, H., Wei-Chia, K., Chung-Lin, H.: Human body motion parameters capturing using kinect. Mach. Vis. App. 26(7–8), 919–932 (2015)

Shotton, J., Fitzgibbon, A., Cook, M., Sharp, T., Finocchio, M., Moore, R., Kipman, A., Blake, A.: Real-time human pose recognition in parts from single depth images. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1297–1304 (2011)

Shotton, J., Kipman, A., Finocchio, M., Finocchio, M., Blake, A., Moore, R., Moore, R.: Real-time human pose recognition in parts from single depth images. Commun. ACM 56(1), 116–124 (2013)

Wen-June, W., Jun-Wei, C., Shih-Fu, H., Rong Jyue, W.: Human posture recognition based on images captured by the kinect sensor. Int. J. Adv. Robot. Syst. 13(2), 1 (2016)

Xiao, Z., Meng-Yin, F., Yi, Y., Ning-Yi, L.: 3D human postures recognition using kinect. In: International Conference on Intelligent Human-Machine Systems and Cybernetics, pp. 344–347 (2012)

Xin-Di, L., Yun-Long, W., Yan, H., Guo-Qiang, Z.: Research on the algorithm of human single joint point repair based on kinect (a chinese article). Tech. Autom. App. (a Chinese journal) 35(4), 96–98 (2016)

Yan, Y., Jie, Y., Chun-Sheng, M., Jin-Huan, Z.: Analysis on difference in anthropomety dimensions between East and West human bodies. Stand. Sci. 7, 10–14 (2015)

Acknowledgments

This project is supported by the National Natural Science Foundation of China (Grant No. 61602058).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Li, B., Bai, B., Han, C., Long, H., Zhao, L. (2017). Novel Hybrid Method for Human Posture Recognition Based on Kinect V2. In: Yang, J., et al. Computer Vision. CCCV 2017. Communications in Computer and Information Science, vol 771. Springer, Singapore. https://doi.org/10.1007/978-981-10-7299-4_27

Download citation

DOI: https://doi.org/10.1007/978-981-10-7299-4_27

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7298-7

Online ISBN: 978-981-10-7299-4

eBook Packages: Computer ScienceComputer Science (R0)