Abstract

Face recognition is a challenging issue due to various appearances under different conditions of the face of a person. Meanwhile, conventional face representation methods always lead to high computational complexity. To overcome these shortcomings, in this paper, we propose a novel discriminative projection and representation method for face recognition. This method tries to seek a discriminative representation of the face image on a low-dimension space. Our method consists of two stages, namely face projection and face representation. In the face projection stage, a mapping matrix is produced by jointly maximizing the covariance of dissimilar samples and minimizing the covariance of similar samples. In the face representation stage, the representation result for each face image is obtained by minimizing the sum of representation results of each class. The proposed method achieves two-fold discriminative properties and provides a computational efficient algorithm. The experiments evaluated on diverse face datasets demonstrate that the proposed method has great superiority for face recognition task.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Discriminant Projection

- Remaining Face Images

- Dual Augmented Lagrangian Method (DALM)

- Dis Cover Similarities

- FERET Face Dataset

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Identifying a person from a large number of face images is the principal task of face recognition [1]. Up till now, numerous methods have been proposed and used for solving the identification problem. The commonly used classification methods include k-nearest neighbor (NN) [2, 3], support vector machine (SVM) [4, 5], and sparse representation [6, 7], among which the study of the representation-based methods has been an attractive topic in the past decades due to its distinct performance in image classification such as face recognition [8], gait recognition [9], action recognition [10], and image representation [11].

The adaptation is an important aspect for evaluating the performance of the recognition method in real-world applications. Conventional methods, such as SVM and Neural Network etc., aim at training a specific classifier from a given dataset. Therefore, these classifiers are vulnerable for face variations. Recently, sparse representation classification (SRC) [12] method has provided an effective way to raise the performance of face recognition on diverse conditions. SRC aims to find the minimum residuals between a test sample and a linear representation of the training samples in different classes for classification. The weighted coefficients indicate the contribution of each class and are referred to as the sparse solution obtained via \(l_1\) regularization. However, the \(l_1\) regularization often needs an iterative process for numerical optimization, which leads to heavy computational burden for real-time applications. Consequently, the \(l_2\) regularization-based representation is proposed to overcome this drawback. The collaborative representation classification (CRC) [13] method is a typical method of the regularization-based representation. CRC is computational efficiency due to its closed-form solution. Current study [14] shows that the sparse property cannot be well guaranteed if native \(l_2\) regularization is directly applied on the classification model. However, the study of CRC demonstrates that the collaborative representation plays more important role in classification compared with the sparsity. In addition to CRC, the robust regression for classification [15], two-phase test sample sparse representation [16], discriminative sparse representation [17] etc. are effective classification methods based on regularization. However, these methods still suffer from the issues such as insufficiently discriminative ability and heavy computational burden.

To seeking an efficient discriminative representation for face images, in this paper, we propose a novel discriminative projection and representation method for face representation and recognition. The proposed method can achieve twofold discriminative properties due to the special design of objective function. The proposed method consists of two stages, including face projection and face representation. On the face projection stage, our method produces a projection matrix by jointly minimizing the similar covariance and maximizing dissimilar covariance, and this matrix maps the face images into a discriminative low-dimension space which has the minimum similarity of samples. Moreover, we employ the quadruplets [18] to construct both covariances, which integrate the similarity of the samples to improve the distinctiveness of projection matrix. On the face representation stage, we produce the discriminative representation for the test sample by obtaining the minimum sum of representation results of all classes on the low-dimension space, which enables the representation results of different classes to be the lowest correlated. Moreover, the proposed method is very efficient in computation due to the closed-form solutions in both steps. The experiments are conducted for evaluating the superiority of the proposed method over other state-of-the-art methods.

The other parts of the paper are organized as follows. Section 2 introduces the related works. Section 3 describes the proposed method. Section 4 offers the experimental results, and we conclude this paper in Sect. 5.

2 Related Works

In this section, we briefly introduce the background of \(l_2\) regularization representation and Linear Discriminant Analysis. Let set \(X=\{x_i|x_i \in \mathbb {R}^n \}\) as a training sample set, which has \(n\) training samples. If there are \(c\) classes and each class has \(s\) samples, \(X\) can be denoted as matrix \(X=[X_1,\dots ,X_j,\dots ,X_c]=[x_1, \dots , x_{s(j-1)+1}, \dots , x_{sj}, \dots , x_n],j=1,\dots ,c\). vector \(y\) is denoted as test sample.

2.1 \(l_2\) Regularization-Based Representation

We take CRC as an example to describe the classification procedure of \(l_2\) regularization representation. CRC represent a test sample using a linear combination of all classes training samples to, which can be written as following equation [13]

where \( q_i, i=1, \dots , N \) is representation coefficient.

The solution of Eq. (1) is \(Q^*=(X^T X+\mu I)^{-1}X^T y\), where \(\mu \) is a small positive constant and \(I\) is an identity matrix, and \(Q^*\) also is referred to as representation coefficients. Let \(Q_i^*\) be the coefficient vector of the \(i\)-th class, regularized class-specific representation residual \(r_i=\frac{||y-X_iQ_i^*||_2}{||Q_i^*||_2}\) is used for classification. The label of \(y\) is obtained by \(lable(y)=\arg \min _i r_i\).

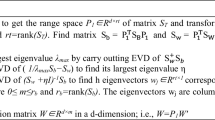

2.2 Linear Discriminant Analysis

LDA aims to produce a projection by jointly minimizing within-class scatter and maximizing between-class scatter, and then project the data into low-dimension space. Therefore, LDA firstly needs to produce between-class scatter matrix \({S_b}\) and within-class scatter matrix \({S_w}\) respectively as follows:

and

where \({m_i}\) and \(\bar{m}\) are the mean vectors of the i-class and all classes samples respectively. The objective function of LDA is [18]

where P is the projection matrix, and \(tr( \cdot )\) denotes the trace of matrix. Finally, we can obtain P by solving the following eigenvector problem:

where \(\lambda \) is eigenvalue.

3 The Proposed Method

To simultaneously obtain distinctiveness and efficiency, our method represents a face image on a low-dimension space by integrating discriminative projection and \({l_2}\) regularization-based representation. Therefore, we exploit a projection to utilize the embedded discriminative information in the low-dimensional space under the regularization-based representation framework. The proposed objective function is represented as

where \(\alpha \) is a balance factor, and \(Q = [{Q_1}, \ldots ,{Q_c}]\) is the representation coefficient. P is the projection matrix.

To solve P and Q, in this work, we divide the optimization of model (6) into two independent stages, including face projection and face representation:

-

(1)

Face projection: To compute a projection matrix that transforms face images into low-dimensional features which have the minimum similar covariance and maximum dissimilar covariance. The projection matrix has a closed-form solution.

-

(2)

Face representation: To produce a discriminative representation for each sample on the new low-dimensional space via \({l_2}\) regularization. This discriminative representation is obtained by a special design of the regularization term. Moreover, this stage also generates a closed-form solution.

3.1 Face Projection

Original face image set \(X = \{ {x_i}\left| {{x_i} \in {\mathbb {R}^m},i = 1,} \right. \cdots ,n\} \) lies in an m-dimension space. In order to find a representation for X in a d-dimension space, we take mapping \(f:{\mathbb {R}^m} \rightarrow {\mathbb {R}^d},(d < m)\) as projection function in pursuit of low-dimensional features. In this paper, instead of directly obtaining the projection via (6), we attempt to find the discriminative projection by solving a subspace problem which can obtain a closed-form solution. We use projection matrix P to denote the projection by f, which is obtained by jointly minimizing the similar covariance and maximizing the dissimilar covariance. To calculate these two covariances, we construct a similar set S of the pairs of samples from the same class and a dissimilar set D of the pairs of samples from different classes respectively. These two sets can be expressed as

and

where \(D(\cdot ,\cdot )\) is the distance of sample pair and \(\delta \) is a margin coefficient. Consequently, projection matrix P can be achieved via minimizing the expectation of the pairs from similar set and maximizing the expectation of the pair from dissimilar set, which maps the samples to a subspace that has the minimum difference of similar samples and maximum difference of dissimilar samples simultaneously. It is expressed as the following loss function:

where \(\mu \) is a balance parameter. x and \(x'\) are the pairs of samples.

To construct the similar and dissimilar sets, we extract the quadruplets from the sample set. A quadruplet [18] is that four samples \({x_i}\), \({x_j}\), \({x_k}\), and \({x_l}\) from the sample set and act in this way:

Thus, for a sample x, within-class similar sample pair \(({x_i},{x_j})\) is employed to produce similar set S and between-class dissimilar sample pair \(({x_k},{x_l})\) is employed to construct the dissimilar set D respectively. As a result, Eq. (9) can be rewritten as

It is observed that

and

where \(\sum \nolimits _S { = E\{ {( {{x_i} - {x_j}} ){{( {{x_i} - {x_j}} )}^T}| S .} \}}\) and \(\sum \nolimits _D { = E\{{( {{x_k} - {x_l}} ){{( {{x_k} - {x_l}} )}^T}| D .} \}} \) are the covariance matrices of similar pairs and dissimilar pairs respectively. This leads Eq. (11) to

Finally, we have

where \({\sum _R} = {\sum _S}\sum _D^{ - 1}\) is a ratio matrix between similar and dissimilar covariance matrices. It is obvious that \({\sum _R}\) is a semidefinite matrix and can be implemented by singular value decomposition (SVD) for obtaining an orthogonal matrix. Thus, orthogonal matrix P maps the samples into the space spanned by the d smallest eigenvectors, which has minimum similarity of sample.

3.2 Face Representation

On this stage, we can represent face images in the low-dimensional space. As a result, given projection matrix P, training sample X and test sample y can be projected onto a low-dimensional space via \(F = {P^T}X\) and \(v = {P^T}y\). The objective function in Eq. (6) is rewritten as the following formula:

Because of the convexity and differentiability of Eq. (16), we can obtain the optimal solution by taking the derivative with respect to Q and setting it to 0. The computational procedure is presented as follows:

Let \(f(Q) = \left\| {v - FQ} \right\| _2^2 + \alpha \sum \limits _{i = 1}^c {\left\| {{F_i}{Q_i}} \right\| _2^2} \). The derivative with respect to Q of the first term of f(Q) is

Then we need to determine the derivative of the second term \(\frac{d}{{dQ}}\left( {\alpha \sum \limits _{i = 1}^c {\left\| {{F_i}{Q_i}} \right\| _2^2} } \right) \). Because \(g(Q) = \alpha \sum \limits _{i = 1}^c {\left\| {{F_i}{Q_i}} \right\| _2^2}\) does not explicitly contain Q, it needs to compute partial derivative \(\frac{{dg}}{{d{Q_k}}}\), and combine all \(\frac{{dg}}{{d{Q_k}}}\) \((k = 1, \ldots ,c)\) to achieve \(\frac{{dg}}{{dQ}}\).

g(Q) is composed of c terms which are dependent of \({Q_k}\). It firstly calculates the c partial derivatives \(\frac{{dg}}{{d{Q_k}}}\) as follows:

Thus the derivative \(\frac{{dg}}{{dQ}}\) is

Let \(M = \left( {\begin{array}{*{20}{c}} {F_1^T{F_1}}&{} \cdots &{}O\\ \vdots &{} \ddots &{} \vdots \\ O&{} \cdots &{}{F_c^T{F_c}} \end{array}} \right) \), the derivative of the second term is \(\frac{{dg}}{{dQ}} = 2\alpha cMQ\).

Combining the derivatives of the first and second terms, the derivative of Eq. (16) is \(\frac{{df}}{{dB}} = - 2{F^T}(v - FQ) + 2\alpha cMQ\). Thus, setting the derivative to zero, we have

which leads to

As a result, optimal Q is a closed-form solution.

3.3 Classification

The proposed method classifies the samples in a new low-dimensional space. Thus, training sample X and test sample y should be projected as F and v by projection matrix P. Finally, we use Eq. (21) to compute representation coefficient Q of the projected test sample v. Then, a test sample v is classified to the k-th class according to the following procedure,

In summary, we describe a full classification algorithm in Algorithm 1.

3.4 Computational Complexity Analysis

The major computational cost of the proposed method lies in the matrix operation. For \(n \times n\) input matrix, the computational complexity of our problem consists of two parts, including projection matrix P computation and representation coefficient Q optimization. To obtain the projection matrix, the total cost is about \(O({n^3})\). To obtain representation coefficient Q, we need to calculate Eq. (21) for each test sample, whose dimension is d. The total computational complexity of calculating k test samples is about \(O(d{n^2} + kdn)\). As a result, the total computational complexity of the proposed method is about \(O({n^3} + d{n^2} + kdn)\). It is pointed out that the projection matrix and representation coefficient matrix are computed completely only once and can be used for all test samples. Although the proposed method needs two computational steps, it is more efficient than the iterative methods.

4 Experimental Results

In this section, we conduct the experiments on face datasets to present the effectiveness of the proposed method. The tested datasets contain the FERET [19], Extended Yale B [20], and CMU Multi-PIE [27] datasets. Meanwhile, several state-of-the-art recognition methods including CRC [13], L1LS [21], FISTA [24], Homotopy [22], Dual augmented lagrangian method (DALM) [23], INNC [25], and a fusion classification method (FCM) [26] are used for experimental comparison. The test of Linear discriminant analysis [18] integrated with the nearest neighbor classifier is also involved in our experiments.

4.1 Dimension and Parameter Selections

The feature dimension is one of the important factors that affect the classification accuracy. Usually, the classification accuracy varies greatly under different dimensions. However, we find that the performance of the proposed method is not greatly affected by the variation of the dimension. Figure 1 shows the results of the classification accuracy under different dimensions on the FERET dataset. It is obvious that the proposed method achieves nearly stable classification accuracies for various dimensions under different numbers of training samples per class. In our experiments, the range of feature dimension is [200, 1000].

There is only one parameter \(\alpha \) in the proposed algorithm. It is a factor of balancing the effect on the two terms in the object function. We choose the optimal value of \(\alpha \) for each dataset among five candidate values, 0.01, 0.1, 1, 10, and 100. The search procedure can quickly find the optimal value which leads to the best classification accuracy. The proposed method can maintain a stable classification performance when the value of \(\alpha \) varies. Figure 2 presents the relationship between \(\alpha \) and the classification accuracy on the FERET dataset respectively. It is seen that almost stable classification rates are obtained when the value of \(\alpha \) varies in a proper range for a certain dataset.

4.2 Experiments on the FERET Face Dataset

This experiment is conducted on the FERET face dataset [19] which contains 1400 face images from 200 subjects. Figure 3 shows some face images from this dataset. Every face image was resized to a 40 by 40 pixels. The first 3, 4, and 5 face images of each subject and the remaining face images were used as the training samples and test samples respectively. Parameter \(\alpha \) was set to 10 and the dimension is 1000. The experimental results are presented in Table 1. From the results, we know that the proposed method achieves better recognition rate than other classification methods, which implies that the proposed method can capture more discriminative information for feature representation.

4.3 Experiments on the Extended Yale B Face Dataset

The Extended Yale B [20] face dataset contains 2414 single frontal facial images of 38 individuals. These images were captured under various controlled lighting conditions. The size of an image was 192168 pixels. In our experiments, all images were cropped and resized to 8496 pixels. Figure 4 shows some face images from the Extended Yale B face dataset. The first 10, 20, 30, and 40 face images of each subject were treated as original training samples and the remaining face images were viewed as testing samples. Parameter \(\alpha \) was set to 0.001 and the dimension is 1000. The experimental results are presented in Table 2. It is obvious that the proposed method increases nearly 10 percents recognition rates under different conditions, compared with other methods. More importantly, the superior recognition rates are obtained under lower dimension (1000), compared with other methods under original dimension (8064). It means that the proposed method can capture more discriminative low-dimension feature under different conditions.

4.4 Experiments on the CMU Multi-PIE Face Dataset

In this subsection, we evaluated the performance of our method on the CMU Multi-PIE face dataset [27]. The CMU Multi-PIE face dataset is composed of face image of 337 persons with variations of poses, expressions and illuminations. Figure 5 shows some face images from this dataset. We select a subset composed of 249 persons under 20 different illumination conditions with a frontal pose and 7 different illumination conditions with a smile expression. All images are cropped and resized to 4030 pixels. We choose face images corresponding to the first 3, 5, 7, 9 illuminations from the 20 illuminations and only one image from 7 smiling images as training samples and use remaining images as testing samples. Parameter \(\alpha \) is set to 0.00001 and the dimension is 1000. Table 3 lists the classification accuracy on four testing sets obtained using different methods. From the results, it can be seen that our method obtains better classification accuracy than other methods. In other words, our method is more robust to variations of illuminations, poses and expressions.

5 Conclusion

Aiming at seeking an efficient discriminative representation for face images, we proposed a novel discriminative projection and representation method for face recognition. This method obtains the superiority of effective and efficient recognition by using a specific regularization term and projection matrix of the objective function. The projection produced by minimizing similar covariance and maximizing dissimilar covariance can obtain the features which have the minimum similarity of samples. The discriminative representation result is obtained by minimizing the correlation of samples. Therefore, the proposed method possesses two-fold discriminative properties, which is very helpful to improve the classification accuracy. In addition, the proposed method provides a computational efficient algorithm for face recognition tasks.

References

Turk, M.A., Pentland, A.P.: Face recognition using eigenfaces. In: Proceedings IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 1991, pp. 586–591 (2002)

Cover, T., Hart, P.: Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 13(1), 21–27 (1967)

Prasad, J.R., Kulkarni, U.: Gujrati character recognition using weighted k-NN and mean 2 distance measure. Int. J. Mach. Learn. Cybernet. 6(1), 69–82 (2015)

Vapnik, V.N.: The Nature of Statistical Learning Theory. Springer, New York (1995)

Tian, Y., Qi, Z., Ju, X., Shi, Y., Liu, X.: Nonparallel support vector machines for pattern classification. IEEE Trans. Cybern. 44(7), 1067 (2014)

Zhang, Z., Xu, Y., Yang, J., Li, X., Zhang, D.: A survey of sparse representation: algorithms and applications. IEEE Access 3, 490–530 (2017)

Wagner, A., Wright, J., Ganesh, A., Zhou, Z., Mobahi, H., Ma, Y.: Toward a practical face recognition system: robust alignment and illumination by sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 34(2), 372–386 (2011)

Wright, J., Yang, A.Y., Ganesh, A., Sastry, S.S., Ma, Y.: Robust face recognition via sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 31(2), 210–227 (2008)

Lai, Z., Xu, Y., Jin, Z., Zhang, D.: Human gait recognition via sparse discriminant projection learning. IEEE Trans. Circ. Syst. Video Technol. 24(10), 1651–1662 (2014)

Liu, H., Tang, H., Xiao, W., Guo, Z.Y., Tian, L., Gao, Y.: Sequential bag-of-words model for human action classification. CAAI Trans. Intell. Technol. 1(2), 125–136 (2016)

Wright, J., Ma, Y., Mairal, J., Sapiro, G., Huang, T.S., Yan, S.: Sparse representation for computer vision and pattern recognition. Proc. IEEE 98(6), 1031–1044 (2010)

Zhang, L., Yang, M., Feng, X.: Sparse representation or collaborative representation: which helps face recognition? In: IEEE International Conference on Computer Vision, pp. 471–478 (2012)

Baraniuk, R.G.: Compressive sensing. IEEE Sig. Process. Magazine 24(4), 118–121 (2007)

Naseem, I., Togneri, R., Bennamoun, M.: Robust regression for face recognition. In: International Conference on Pattern Recognition, pp. 1156–1159 (2010)

Xu, Y., Zhang, D., Yang, J., Yang, J.Y.: A two-phase test sample sparse representation method for use with face recognition. IEEE Trans. Circ. Syst. Video Technol. 21(9), 1255–1262 (2011)

Xu, Y., Zhong, Z., Yang, J., You, J., Zhang, D.: A new discriminative sparse representation method for robust face recognition via \(l_{2}\) regularization. IEEE Trans. Neural Netw. Learn. Syst. 28(10), 2233–2242 (2017)

Belhumeur, P.N., Hespanha, J.P., Kriegman, D.J.: Eigenfaces vs. fisherfaces: recognition using class specific linear projection. In: Buxton, B., Cipolla, R. (eds.) ECCV 1996. LNCS, vol. 1064, pp. 43–58. Springer, Heidelberg (1996). https://doi.org/10.1007/BFb0015522

Law, M.T., Thome, N., Cord, M.: Learning a distance metric from relative comparisons between quadruplets of images. Int. J. Comput. Vision 121, 1–30 (2016)

Phillips, P.J., Moon, H., Rizvi, S.A., Rauss, P.J.: The FERET evaluation methodology for face-recognition algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 22(10), 1090–1104 (2000)

Georghiades, A.S., Belhumeur, P.N., Kriegman, D.J.: From few to many: illumination cone models for face recognition under variable lighting and pose. IEEE Trans. Pattern Anal. Mach. Intell. 23(6), 643–660 (2001)

Kim, S.J., Koh, K., Lustig, M., Boyd, S., Gorinevsky, D.: An interior-point method for large-scale l 1-regularized least squares. IEEE J. Sel. Top. Sig. Process. 1(4), 606–617 (2007)

Yang, A.Y., Sastry, S.S., Ganesh, A., Ma, Y.: Fast \(l_1\)-minimization algorithms and an application in robust face recognition: a review. In: IEEE International Conference on Image Processing, pp. 1849–1852 (2010)

Yang, A.Y., Zhou, Z., Balasubramanian, A.G., Sastry, S.S., Ma, Y.: Fast \(l_1\) minimization algorithms for robust face recognition. IEEE Trans. Image Process. 22(8), 3234 (2013)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Xu, Y., Zhu, Q., Chen, Y., Pan, J.S.: An improvement to the nearest neighbor classifier and face recognition experiments. Int. J. Innov. Comput. Inf. Control 9(2), 543–554 (2013)

Liu, Z., Pu, J., Huang, T., Qiu, Y.: A novel classification method for palmprint recognition based on reconstruction error and normalized distance. Appl. Intell. 39(2), 307–314 (2013)

Gross, R., Matthews, I., Cohn, J., Kanade, T., Baker, S.: Multi-PIE. Image Vis. Comput. 28(5), 807–813 (2010)

Acknowledgement

This work was supported in part by the National Natural Science Foundation of China under Grant 61332011, and partially supported by Guangdong Province high-level personnel of special support program (No. 2016TX03X164).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Zhong, Z., Zhang, Z., Xu, Y. (2017). Projective Representation Learning for Discriminative Face Recognition. In: Yang, J., et al. Computer Vision. CCCV 2017. Communications in Computer and Information Science, vol 772. Springer, Singapore. https://doi.org/10.1007/978-981-10-7302-1_1

Download citation

DOI: https://doi.org/10.1007/978-981-10-7302-1_1

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7301-4

Online ISBN: 978-981-10-7302-1

eBook Packages: Computer ScienceComputer Science (R0)