Abstract

A novel layer based image fusion method is proposed in this paper. It exploits and utilizes the implicated patterns among source images with two parts: (i) proposed a more precise model roots in transfer learning and coupled dictionary for layering source images; (ii) designed appropriate fusion scheme which bases on multi-scale transformation for recombining layers into final fused image efficiently. Rigorous experimental comparison in subjective and objective demonstrates that proposed image fusion method achieves better result in visual perception and computer process.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Due to limitation of single sensor, multi-sensor images are often used for providing comprehensive description of target. Image fusion combines multi-sensor images into a single more comprehensive fused image for visual perception and computer process. Image fusion has been widely used in many applications, such as visible and infrared photography, medical detection, remote imagery and so on.

In early studies, researchers usually directly define significance and match measure of image content in original or transform domain. A series of approaches work in different domains that multi-scale transformation [1,2,3,4] and spare representation [5, 6] are the most typical types. And many approaches make effort to define better indexes and fusion rule [7]. More detailed introduction about these algorithm could be found in the excellent review of Li [8].

Since multi-sensor images provide descriptions of same target with different sensor types and environment, some relationships could be expected to exist among them [9]. The same target leads to redundancy while variety sensor types and environment generate complementary in information. Some novel approaches are designed working with redundancy and complementarity of source images. With sophisticated manual definition of this relationship. For example, satellites multi-spectrum and panchromatic images fusion of SIRF [10], infrared-visible image fusion [11] and MRSR in multi-focus image fusion [12] are proved superior to previous work in result. Some approaches design algorithm automatically reveal implicated relationship among source images [13, 14].

In this paper, inspired by [13, 15], a novel algorithm is proposed for exploiting redundancy and complementarity via transfer learning, coupled dictionary and some specific prior knowledge. After separated information of source images, a fusion scheme was designed for obtaining final fused image.

The rest of this paper is organized as follows. Introduction and discussion about related works are exhibited in Sect. 2. Section 3 describes our work in detail and Sect. 4 compares proposed method with state-of-the-art algorithm.

2 Related Work

2.1 Layer Division Based Image Fusion

The relationship of redundancy and complementarity could be mathematically modeled in the following form with probabilistic view. In order to simplify discussion, we only consider the situation with only two source images.

This formulation divides original image content into two parts. Left part of both equations represent marginal distribution of source images \(x_1\) and \(x_2\).\(P(x_1\overline{x_2})\) and \(P(x_2\overline{x_1})\) are individual components while \(P(x_1x_2)\) is correlated component. Essentially, the core task of exploiting redundancy and complementarity is to estimate individual and correlated components across two images.

Yu [13] actually extracts features of source images through K-SVD dictionary and labels them by proposed joint spare representation (JSR). Finally, correlated layer and individual layer are reconstructed with labeled features respectively. It is an approximation of correlated components and individual components with K-SVD dictionary atoms respectively.

\(\theta \) is all atoms in K-SVD dictionary while \(\theta _C\) and \(\theta _I\) are correlated and individual features. \(f(\cdot )\) represents spare reconstruction procedure for estimating ideal layer division.

The more precise dictionary, the better divided layers [15]. Unfortunately, existing dictionary training algorithm cannot guarantee its feature is orthogonal, exactly precise and no reconstructive error. As illustrated in Fig. 1, these defects make its model not very precise.

In consideration of difficulties in estimating individual parts and correlated parts directly, we propose a novel model to estimate posterior probability since marginal probability is known. We reformulate (1) and (2) to the following form.

In this form, more precise estimation of union probability is obtained with fusion of posterior probability. This task has been conducted for many years in transfer learning technique and coupled dictionary which are introduced in Sect. 2.2.

2.2 Introduction of Transfer Learning and Coupled Dictionary

Transfer learning is a technique which aims to help improving performance in target task through transfer knowledge from a source task [16]. The core of transfer learning is the mechanism to extract and transfer knowledge of source to target. Some work [17, 18] focus on transferring knowledge across two unlabeled domains. It is reported that transfer learning hasn’t been used in image fusion tasks though it highly matches image fusion.

Coupled dictionary training is often used to observe feature spaces for associating cross-domain image data and jointly improve presentative ability of each dictionary [19]. Rui Gao proposed a multi-focus image fusion approach with coupled dictionary [20].

3 Proposed Method

3.1 Transfer Learning and Coupled Dictionary Based Layer Division

In Sect. 2.1, the problem is converted to estimation of \(P(x_1|x_2)\) and \(P(x_2|x_1)\) in (4) and (5). In this model, both images will be divided into two layers due to asymmetric of \(P(x_1|x_2)\)and \(P(x_2|x_1)\).

Let \(D_1\) is the feature dictionary which is trained from image \(x_1\), it is reasonable to treat this dictionary \(D_1\) as source knowledge in transfer learning [18]. Reconstructing \(x_2\) with \(D_1\), correlated component could be reconstructed well while individual component presumably lose. With same process in \(x_1\) and \(x_2\), the optimal goal could formulate as following:

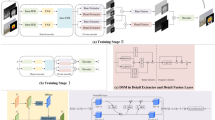

\(X_1\) is the sample matrix of \(x_1\) which consists of vectorized patches obtained from sliding windows. A1 and A2 are the coefficient matrixes. \(l(A_1,A_2,D_1,D_2)\) is regularization condition presents some expected properties for dictionary and reconstructive process. The scheme of layer division is illustrated in Fig. 2.

3.2 Feature Extraction and Exchanged Representation

Learning dictionary D is the process for obtaining a series features which determine the accuracy in layer division. Unfortunately, based on discussion in Sect. 2.1, reconstructive error still influences the result of layer division in proposed model. Instead of designing a brand new sophisticated algorithm for seeking better dictionary, inspired by [12], an alternative method is taken in this paper.

Undoubtedly, original image patches could transfer knowledge between images and do not lose any information. However, in procedure of knowledge transfer that original image patches are not good at distinguishing features. In order to ensure that each component could be presented correctly in any layer images, it is necessary to ensure that elements in all layer images is positive for consistency with original patches.

Our optimal formulation is changed to the following form. Logical notation means the logical relation between corresponding elements in both matrixes:

A general condition in image fusion field is that source images has been pre-registered before further processing, and so does our work. This condition guarantees a property in image fusion that correlated knowledge which depicts the same phenomenon existing in same region of both source images. So, the j-th patch of \(X_1\) only share correlated knowledge with j-th patch of \(X_2\). We could rewrite our optimal formulation (7) with this property as the following form.

In this formulation, \(\alpha _{1_{j}}\) is the j-th column of \(A_1\). The first regularization with \(\lambda _1\) ensures a patch accept knowledge from corresponding patch in another image. \(\lambda _2\) controls tolerance that patch accept knowledge from other patch. Third and fourth regularization determine penalty on negative element of layer images. When these parameter are infinite, the optimal solve actually occur when any element is equal between each column of \(X_1\) and \(X_2\).

Definition of coupled dictionary problem is similar to (8), except for exchanged position of \(D_1\) and \(D_2\). In this paper, we proposed a novel algorithm to solve this optimization reference to property mentioned in [19].

3.3 Fusion Scheme Base on Proposed Layer Division Method

Indeed, correlated layer and individual layer store redundant and complementary information respectively. The ideal situation is that fused image inherits all of redundant and complementary information directly. Due to possible incompatibility between complementary components, the better fusion rule in reality is to chose more informative one. Furthermore, complementary information is no longer influenced by redundant information when its information is measured. Hence the complementary component in fused image looks enhanced compare with source images.

Instead of spare representation, which cannot handle high frequency information efficiently [7], multi-scale transformation is much better to corresponding layers. Our fusion scheme is designed with DTCWT [2] and NSCT [4] as Fig. 3.

4 Experiment

Infrared-visible (IR-VI) image pair and multi-focus image pair are chosen in experiment due to their distinctive property in distribution of individual and correlated components. A comparison of divided layers between work in [13] and proposed method is held in the first part. Then subjective and objective comparisons of final fused image are held.

4.1 Layer Division Results and Discussion

Approach of [13] works with sliding windows in size 8\(\,\times \,\)8 and K-SVD dictionary in size 64\(\,\times \,\)500. In proposed method, on account of low similarity in IR-VI images but high similarity in multi-focus images, sliding windows with size 3\(\,\times \,\)3 and 16\(\,\times \,\)16 are applied in them respectively.

In Figs. 4 and 5, divided layers of proposed method and [13] are illustrated. Obviously, more clear pattern is emerged in proposed methods divided layers and no clutter information exists in them. Individual layers of proposed method show some apparent dissimilarity compare to JSR approach.

4.2 Fusion Results and Comparison

Divided layers finally integrated into fused image with multi-scale transformation fusion scheme, so discrete wavelet transform (DWT), non-sampled contourlet transform (NSCT) are chosen for a comparative study. Level of multi-scale decomposition is 4 in both DWT and NSCT. Besides, a comparison among some approaches is held with optimal parameters as descriptions in their papers [7, 13].

In accordance with the point that multi-sensor images are used for comprehensively describe target, complementary information may be more important than redundant information. Because proposed approaches decrease interference between complementary information and redundant information in some degree, there are some details enhanced of results in Figs. 6 and 7. Dissimilar with some image enhanced algorithm, this emphasis of individual component does not generate artificial component.

In Fig. 6, proposed method inherit the elliptical structure of fence in the gallery, bracket of left traffic light and line on the road when other approaches lost them due to only reserving information of single one source. We mark these regions with red box. Besides, fused image of proposed method clearly depicts the details of stools in front of bar, windows lattice and backpack of pedestrian who walk pass the bar.

In the fused scenario consisted of multi-focus images, proposed method obtain sharper and clearer results than other methods. By carefully comparison border of focus regions, no shadow or oversharp edge exists in results. We also mark some important regions with red box. Proposed model makes gradient information more significant while gradient is individual component among source images.

According to the work of Liu [21], four objective metrics which emphasis on different views are chosen for evaluating and comparing proposed method. \(Q_{VIFF}\) [22] simulates human vision when \(Q_e\) comes from information theory. \(Q_{SF}\) [23] presents the richness of gradient information and \(Q_{SSIM}\) [22] measure the similarity of structure between fused image and source images.

According to Table 1, high scores in \(Q_{VIFF}\), \(Q_e\) and \(Q_{SF}\) are obtained by results of proposed method in both IR-VI and multi-focus scenarios. Due to the emphasis of individual component, proposed method does not keep a consistent structure with single source image decreases its score in \(Q_{SSIM}\) (Fig. 8).

5 Conclusion

In this paper, we proposed a novel approach for dividing image into correlated layer and individual layer. Compared with previous work, our layer is better in revealing implicit pattern among source images. For layer division task, we make use of existing morphology transformation to fuse divided layer respectively and combine them into final fused image. The experimental results show that proposed method is competitive to state-of-the-art approaches. Since misalignment, noise and moving objection are presented differently in each images, robust approach is the focus in our future work.

References

Zhang, Z., Blum, R.S.: A categorization of multiscale-decomposition-based image fusion schemes with a performance study for a digital camera application. In: Proceedings of the IEEE, vol. 87, p. 1315 (1999)

Lewis, J.J., O’Callaghan, R.J., Nikolov, S.G., Bull, D.R., Canagarajah, N.: Pixel- and region-based image fusion with complex wavelets. Inf. Fusion 8, 119 (2007)

Nencini, F., Garzelli, A., Baronti, S., Alparone, L.: Remote sensing image fusion using the curvelet transform. Inf. Fusion 8, 143 (2007)

Li, T., Wang, Y.: Biological image fusion using a NSCT based variable-weight method. Inf. Fusion 12, 85 (2011)

Yang, B., Li, S.: Multifocus image fusion and restoration with sparse representation. IEEE Trans. Instrum. Meas. 59, 884 (2010)

Kim, M., Han, D.K., Ko, H.: Joint patch clustering-based dictionary learning for multimodal image fusion. Inf. Fusion 27, 198 (2016)

Liu, Y., Liu, S., Wang, Z.: A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 24, 147 (2015)

Li, S., Kang, X., Fang, L., Hu, J., Yin, H.: Pixel-level image fusion: a survey of the state of the art. Inf. Fusion 33, 100 (2017)

Kong, W.W., Lei, Y., Ren, M.M.: Fusion technique for infrared and visible images based on improved quantum theory model. In: Zha, H., Chen, X., Wang, L., Miao, Q. (eds.) CCCV 2015. CCIS, vol. 546, pp. 1–11. Springer, Heidelberg (2015). https://doi.org/10.1007/978-3-662-48558-3_1

Chen, C., Li, Y., Liu, W., Huang, J.: SIRF: simultaneous satellite image registration and fusion in a unified framework. IEEE Trans. Image Process. 24, 4213 (2015)

Ma, J., Chen, C., Li, C., Huang, J.: Infrared and visible image fusion via gradient transfer and total variation minimization. Inf. Fusion 31, 100 (2016)

Zhang, Q., Levine, M.D.: Robust multi-focus image fusion using multi-task sparse representation and spatial context. IEEE Trans. Image Process. 25, 2045 (2016)

Yu, N., Qiu, T., Bi, F., Wang, A.: Image features extraction and fusion based on joint sparse representation. IEEE J. Sel. Top. Signal Process. 5, 1074 (2011)

Son, C., Zhang, X.: Layer-based approach for image pair fusion. IEEE Trans. Image Process. 25, 2866 (2016)

Panagakis, Y., Nicolaou, M.A., Zafeiriou, S., Pantic, M.: Robust correlated and individual component analysis. IEEE Trans. Pattern Anal. Mach. Intell. 38, 1665 (2016)

Pan, S.J., Yang, Q.: A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22, 1345 (2010)

Ando, R.K., Zhang, T.: A framework for learning predictive structures from multiple tasks and unlabeled data. J. Mach. Learn. Res. 6, 1817 (2005)

Dai, W., Yang, Q., Xue, G., Yu, Y.: Self-taught clustering. In: ICML 2008, 8 p. Helsinki, USA (2008)

Huang, D., Wang, Y.F.: Coupled dictionary and feature space learning with applications to cross-domain image synthesis and recognition. In: IEEE International Conference on Computer Vision, 2496 p. (2013)

Gao, R., Vorobyov, S.A., Zhao, H.: Multi-focus image fusion via coupled dictionary training. In: International Conference on Acoustics Speech and Signal Processing, 1666 p. (2016)

Liu, Z., Blasch, E., Xue, Z., Zhao, J., Laganiere, R., Wu, W.: Objective assessment of multiresolution image fusion algorithms for context enhancement in night vision: a comparative study. IEEE Trans. Pattern Anal. Mach. Intell. 34, 94 (2012)

Han, Y., Cai, Y., Cao, Y., Xu, X.: A new image fusion performance metric based on visual information fidelity. Inf. Fusion 14, 127 (2013)

Zheng, Y., Essock, E.A., Hansen, B.C., Haun, A.M.: A new metric based on extended spatial frequency and its application to DWT based fusion algorithms. Inf. Fusion 8, 177 (2007)

Acknowledgments

The authors thank Glenn Easley, Yu Liu, Zheng Liu for sharing the code of their works. The work is supported by the Fundamental Research Funds for the Central Universities of China (No. ZYGX2015J122 and No. ZYGX2015KYQD032), New Characteristic Teaching Material Construction (No. Y03094023701019427), National Natural Science Foundation of China (No.61701078), and the Scientific Research Foundation for the Returned Overseas Chinese Scholars, State Education Ministry [2015] 1098.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Hu, K., Sun, B., Deng, Q., Yang, Q. (2017). A Novel Layer Based Image Fusion Approach via Transfer Learning and Coupled Dictionary. In: Yang, J., et al. Computer Vision. CCCV 2017. Communications in Computer and Information Science, vol 772. Springer, Singapore. https://doi.org/10.1007/978-981-10-7302-1_17

Download citation

DOI: https://doi.org/10.1007/978-981-10-7302-1_17

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7301-4

Online ISBN: 978-981-10-7302-1

eBook Packages: Computer ScienceComputer Science (R0)