Abstract

A way of convolution neural network (CNN) embedded fine-tune based on the image contents is proposed to evaluate the image aesthetic quality in this paper. Our approach can not only solve the problem of small-scale data but also quantify the image aesthetic quality. First, we chose Alexnet and VGG_S to compare which is more suitable for image aesthetic quality evaluation task. Second, to further boost the image aesthetic quality classification performance, we employ the image content to train aesthetic quality classification models. But the training samples become smaller and only using once fine-tune can not make full use of the small-scale dataset. Third, to solve the problem in second step, a way of using twice fine-tune continually based on the aesthetic quality label and content label respective, is proposed. At last, the categorization probability of the trained CNN models is used to evaluate the image aesthetic quality. We experiment on the small-scale dataset Photo Quality. The experiment results show that the classification accuracy rates of our approach are higher than the existing image aesthetic quality evaluation approaches.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Along with the widespread use of networks and mobile devices such as mobile phone, the number of images increases rapidly. A large number of images are loaded on various social networks every day. To help people exhibit higher aesthetic quality images and explore the aesthetic cognitive ability of computers, image aesthetic quality evaluation is becoming more and more important. Image aesthetic quality evaluation aims to classify the images to high or low aesthetic quality. As shown in Fig. 1, high aesthetic quality images bring more comfortable visual effect to people than the low aesthetic quality images.

In the recent decade, how to make the computers distinguish the image aesthetic quality from the mass images by themselves becomes the main research direction. The approaches of image aesthetic quality evaluation can be divided into traditional hand-craft [3, 4, 15, 21, 22, 24, 26, 32, 33] and deep learning CNN [5, 8, 20, 23, 30, 31, 38].

Traditional hand-craft approaches have been aiming at some objective factors of affecting the image aesthetic quality. People have extracted many visual features, including low-level image statistics, such as edge distribution and color histograms, and high-level image graphic rules, such as the rule of thirds and golden ratio. In [3], Datta et al. proposed 56 global features referred to structure, color, light and so on. They used linear regression to quantify the image aesthetics. In [15], Ke et al. proposed a principled approach to design high level global features. They used the perceptual factors that distinguish between professional photos and snapshots to design high level global semantic features and measure the perceptual differences. In [22], Luo et al. began to use local features. They proposed the salient regions from a photo based on professional photography techniques to formulate a number of high-level semantic features based on the quotient of salient and background. The classification rate of their method was 93%. In [21], Luo et al. proposed three classes of local feature and two classes of global feature to automatically classify image aesthetic quality. They used receiver operating characteristic (ROC) curve to prove that these features are excellent for image aesthetic quality evaluation. Shao et al. [26] used Gabor wave transformation, class imbalance and total scene understanding to extract the main part and local features of the different category images. They used hue histogram and color pie to extract global color features at the same time. Dhar et al. [4] mainly analyzed the high-level features that can reflect the image aesthetic quality. They proposed three classes of feature: structure, content and light. They also used this approach to predict the interest feelings of images. In [24], Obrador et al. took focused on the features of structure. They proposed 55 structure features to evaluate the image aesthetics and the classification accuracy was close to the benchmark. In [32, 33], Wang et al. proposed 41 features, which referred to structure, color, light, global and local information to evaluate the aesthetic quality of image. Experiment results showed that these 41 features had high classification accuracy of the image aesthetic quality evaluation.

In 2006, Hinton et al. [10] has restarted the research direction of deep learning. In the next few years, CNN has achieved an excellent performance. Motive by the excellent performance in features extracting and autonomous Learning, CNN is wide employed in computer vision and image processing, such as handwritten numerals recognition [17], ImageNet Large Scale Visual Recognition Competition (ILSVRC) [9, 16, 27, 29], object detection and semantic segmentation [7, 19, 25], face recognition [28, 37], emotion recognition [2, 18, 35], image style recognition and transformation [6, 13], sentiment analysis [36]. Researchers are trying to use CNN for the aesthetic calculation and connotation exploration [5, 8, 20, 23, 30, 31, 38]. In [20], Lu et al. proposed a novel double-column CNN and used global view and fine-grained view to train. Their approach produced significantly 10% better results than the results achieved by hand-craft features on the AVA dataset [23]. Guo et al. [8] proposed PDCNN, a Paralleled CNN architecture. The results of their approach were better than the results of [21] on Photo Quality. The PDCNN can also overcome the problem of over-fitting and under-fitting. In [38], Zhou et al. used N-grams to describe the image text feature and used SVM to learn the weights of N-grams. Then they joined the text features extracted by N-grams into the visual features extracted by CNN, to get excellent classification accuracy on AVA dataset [23]. Besides, [5, 30, 31] use CNN to evaluate image aesthetic quality too.

At present, in many vision tasks, the main problem of using CNN is that the data scale is small, comparing with the large-scale dataset ImageNet which has million images. The problem also lingers in image aesthetic quality evaluation. The best ways of solving the problem are data-augmentation and fine-tune at present. Fine-tune from the pre-trained models on ImageNet is found to yield state-of-the-art performance for many vision tasks such as visual tracking [34], action recognition [14], object recognition [25], human pose estimation [19]. Therefore, this paper mainly use fine-tune to evaluate the images aesthetic quality. The main innovations and contributions in this paper can be summarized as follows:

-

1.

Image contents are employed to further boost the classification performance. We use fine-tune based on the image content to train the aesthetic quality classification models and analyze the influence of the image contents to aesthetic quality classification.

-

2.

Embedded fine-tune is proposed to solve the training data becoming smaller problem due to the limitation of image contents and further boost image classification performance. Embedded fine-tune has twice fine-tune and each fine-tune has different training samples. The results of the experiment show that embedded fine-tune improves the image aesthetic quality classification accuracy rates.

-

3.

The classification accuracy probability of the trained CNN models is used to make specific evaluation to image aesthetic quality, break up the situations that images only have high or low aesthetic label.

2 Relate Work

2.1 Alexnet and VGG_S Architecture

Feature learning is unified with classifier training using RGB images in CNN. As shown in Fig. 2 and Table 1, Alexnet [16] is the champion in ILSVRC2012. It has five convolution layers, two pooling layers and two full connect layers. The input image is divided into R, G, B three channels and the size of each channel is initialized \(256\times 256\). Rectified Linear Unit (ReLU) as the active function is used in Alexnet. Local Response Normalization (LRN) following ReLU aids generalization.

Data augmentation and Dropout are used to reduce over-fitting in Alexnet. The first data-augmentation way is that \(256\times 256\) input images are cut to ten \(227\times 227\) patches from four corners and the center and then mirrored them horizontally. These ten patches are predicted by the network’s softmax layer averagely. The second data-augmentation way is that alter the intensities of the R, G, B channels in training images. Specifically, PCA is performed on the set of RGB pixel values. Multiples of the found principal components with a random variable drawn from a Gaussian with mean 0 and standard deviation 0.1 are added to each training image.

In [11], Hiton et al. proposed dropout. If it’s set 0, the output probability of each hidden layer is 0.5. Then the neurons are “dropped out” in this way and they don’t contribute to the forward propagation or the back-propagation.

In [1], Chatfield et al. proposed VGG_S which also has five convolution layers, three pooling layers and two full connect layers. The differences are that the size of the extracted patches is changed from \(227\times 227\) to \(224\times 224\) and the kernel size, stride of the first convolution layer are smaller. But the error rate of VGG_S on ImageNet is lower than that of Alexnet.

2.2 Fine-Tune from ImageNet

As Fig. 3 shows, fine-tune takes a well pre-trained model, adapts the same architecture and retrains on the pre-trained model weights. It reflects a kind of semantic transfer from general to specific. Fine-tuning the last layer of CNN, the parameter is set higher than other layers because this layer is starting from random while the others are already trained and the output number is set to satisfy the target task when we use fine-tune. With the help of the excellent existing models, fine-tune will save much resource in some new researches.

3 The Proposed Approaches

3.1 Comparing Alexnet with VGG_S

To compare Alexnet and VGG_S which is more suitable for image aesthetic quality evaluation task and boost the image aesthetic quality evaluation classification performance, first, we mix Animal, Architecture, Human, Landscape, Night, Plant and Static images of the dataset together as training samples which are divided into high and low aesthetic quality. Second, we train Alexnet_All and VGG_S_All based on all training samples. Third, to get Alexnet_FT_All and VGG_S_FT_All, we fine-tune from Alexnet_Model and VGG_S_Model which are trained on ImageNet. At last, we use the test samples of each kind of content to test these four models respectively and find out which architecture is more suitable for image aesthetic quality classification task. The way of comparing Alexnet and VGG_S is described in Algorithm 1.

3.2 Training Models Based on Image Contents

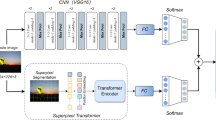

To further improve the image aesthetic quality evaluation classification performance, as Fig. 4 shows, we use fine-tune to train seven models with the training samples of seven kinds of content based on Alexnet_model or VGG_S_Model. The inside box is training Alexnet_model or VGG_S_Model and the outside box is training the models based on the image content. The CNN architecture used in training is the better one from Alexnet and VGG_S. We use CNN_i to represent the seven models we trained in all, where the i represents Animal, Architecture, Human, Landscape, Night, Plant or Static and CNN represents Alexnet or VGG_S. We also use testing samples of each kind of content to test the models of the same content.

3.3 Using Embedded Fine-Tune to Train Models

When we train the aesthetic quality classification models based on image contents, the training samples become smaller, so we propose embedded fine-tune. As Fig. 5 shows, the inside dashed box shows Alexnet_FT_All or VGG_S_FT_All training process. The outside dashed box shows fine-tune from Alexnet_FT_All or VGG_S_FT_All with training samples of each kind of content. We use EFCNN_i to represent the seven models we trained, where the i also represents Animal, Architecture, Human, Landscape, Night, Plant or Static and EFCNN represents Embedded Fine-tune Convolution Neural Network. The difference between these two fine-tune is that the first fine-tune uses the aesthetic quality label and the second fine-tune uses both aesthetic quality label and content label. The algorithm is as follows:

3.4 Image Aesthetic Quality Evaluation

The traditional way of image aesthetic quality evaluation only divides the images into high or low aesthetic quality. We will score images to make the image aesthetic quality evaluation more specific. The score is calculated by the output probability of trained models. We use Softmax with loss to calculate the probability at last, and use the probability to score the image aesthetic quality.

4 Experiment Results and Analysis

4.1 Photo Quality Dataset and Experiment Platform

In this paper, the images used in experiment come from a image dataset named photo quality. Photo Quality built by [21] is a small-scale dataset for photo aesthetic quality evaluation. The images are from a website which photos are taken by professional and amateurs photographers. A total of 17,613 photos are divided into high or low aesthetic quality after eight of the ten observers make the same judgment. According to the photo contents, all photos are divided into 7 categorization, named Animal, Architecture, Human, Landscape, Night, Plant and Static. As Table 2 shows, we filtered the dataset again and chose 15,562 images at last.

We use Caffe, a professional deep learning platform developed by Jia [12], as our experiment platform. Our GPU is GTX 1070 with 8G memory. In order to ensure the generality of the experiment results, we use three cross approach.

4.2 The Comparison Results Between Alexnet and VGG_S

For each content, we randomly extract 300 low aesthetic quality images and 100 high quality aesthetic images from photo quality as our testing samples. The rest of images are training samples. And we use the data-augmentation way of Alexnet to expand the training samples. As Table 3 shows, the classification results of Alexnet_FT_All and VGG_S_FT_All are better than Alexnet_All and VGG_S_All, and the classification accuracy rate of VGG_S_FT_All is an average of 0.87% higher than Alexnet_FT_All. These comparison results show that we can use fine-tune to improve the classification accuracy rate and VGG_S is more suitable for image aesthetic quality evaluation no matter with or without fine-tune. So we will use VGG_S in next experiments.

4.3 The Results Based on the Image Contents

We use the same training, testing samples and data-augmentation as the experiment of comparing Alexnet with VGG_S. Table 4 shows the comparison results between using image contents and not using image contents. VGG_S_FT_i is trained by VGG_S architecture where the i represents Animal, Architecture, Human, Landscape, Night or Static. The results show that the classification accuracy rate of each image content is higher than VGG_S_FT_All. It proves that training models based on image content can boost the classification performance. Although the training sample size is reduced, the quality of training samples is higher, the categorization accuracy rate is improved.

We also build a confuse matrix to prove the image content is an important factor in image aesthetic quality evaluation. As Fig. 6 shows, the highest classification accuracy rates of the matrix are on its diagonal while the others are much lower. Training models based on image content and using the same content image to testing models can improve the classification accuracy rate. It proves not only that the importance of image content in training process but also the necessity of classification according to image content before we use the model to classify images.

4.4 The Results of Embedded Fine-Tune

Embedded fine-tune is proposed through summarizing conclusions and problems of the first two experiments and its training/testing samples and data-augmentation are same as the first two experiments. We compare the results of embedded fine-tune with the first two experiments and then compare with the existing traditional approaches and CNN approaches.

As Fig. 7 and Table 5 show, the classification accuracy of EFCNN_i is average higher 0.88% than VGG_S_FT_i. The EFCNN_Plant is the highest with the classification accuracy of 1.71% higher than VGG_S_FT_Plant. The classification accuracy of EFCNN_i is average higher 2.08% than VGG_S_FT_All. The EFCNN_Landscape is the highest with the classification accuracy of 4.2% higher than VGG_S_FT_All. Embedded fine-tune can improve the classification performance because the second fine-tune is based on a binary classification problem as the same as the image aesthetic quality classification task. Besides, image contents are jointed to embedded fine-tune and embedded fine-tune makes full use of the small-scale dataset. In Fig. 8, we visualize the first convolution layer to show that embedded fine-tune can improve the classification accuracy rate. The textures of feature extracted by embedded fine-tune are clearer than those textures extracted no using embedded fine-tune.

Traditional approaches are more complex and they depend on the features which have been designed according to a particular dataset. The classification accuracies of each content of embedded fine-tune are much better than those results of [3, 15, 21, 22, 32, 33]. The gap between traditional approaches and the CNN approaches is growing ever wider.

We also compare embedded fine-tune with the existing CNN approaches. DVGG_S_i (Double VGG_S Adaption) is that we double the VGG_S and use the parallel networks adaption method proposed by Lu [20] where the i represents Animal, Architecture, Human, Landscape, Night or Static and PDCNN (Parallel Deep Convolution Neutral Network) proposed by Guo [8].

The categorization accuracy of EFCNN_i is an average of 2.33% higher than that of PDCNN. The EFCNN_Static is the highest with the categorization accuracy of 3.62% higher than PDCNN. The classification accuracy of EFCNN_i is on average 3.35% higher than that of DVGG_S_AD_i. The EFCNN_Plant is the highest with the classification accuracy of 5.5% higher than DVGG_S_AD_Plant.

PDCNN and DVGGS_AD_i use parallel network architectures to train models. The features extracted by the paralleled CNN architecture from the small-scale dataset are limit too because the data scale is too small to offer more features unless expand the number of the data. Fine-tune from the pre-trained on the large-scale dataset can get more useful features and transfer these features to the target tasks. Embedded fine-tune can not only get the features from the large-scale dataset but also from the target task dataset. So embedded fine-tune can break up the limitation of small-scale data.

4.5 Image Aesthetic Quality Evaluation

We use the classification probability of the trained EFCNN_i to score the image aesthetic quality. The score represents the level of high or low image aesthetic quality. As Fig. 9 shows, blue represents the high aesthetic quality score probability and orange represents the low. If high aesthetic quality score is higher than the low, this image is a high aesthetic quality image. Otherwise it’s a low aesthetic quality image.

5 Conclusions

This paper analyzes the effect of image content to image aesthetic quality evaluation. We propose embedded fine-tune to solve the problems that data become smaller and using once fine-tune can not make full use of all data of small-scale dataset when we train the image aesthetic quality classification models. The experiment results show that embedded fine-tune can solve the problem of small-scale and boost the image aesthetic quality evaluation performance. At last, classification probability is used to evaluate the image aesthetic quality. The evaluation makes image aesthetic quality more specific.

References

Chatfield, K., Simonyan, K., Vedaldi, A., Zisserman, A.: Return of the devil in the details: delving deep into convolutional nets. Comput. Sci. (2014)

Chu, X., Ouyang, W., Yang, W., Wang, X.: Multi-task recurrent neural network for immediacy prediction. In: IEEE International Conference on Computer Vision, pp. 3352–3360 (2015)

Datta, R., Joshi, D., Li, J., Wang, J.Z.: Studying aesthetics in photographic images using a computational approach. In: European Conference on Computer Vision, pp. 288–301 (2006)

Dhar, S., Ordonez, V., Berg, T.L.: High level describable attributes for predicting aesthetics and interestingness. IEEE Comput. Soc. 42(7), 1657–1664 (2011)

Dong, Z., Shen, X., Li, H., Tian, X.: Photo quality assessment with DCNN that understands image well. In: He, X., Luo, S., Tao, D., Xu, C., Yang, J., Hasan, M.A. (eds.) MMM 2015. LNCS, vol. 8936, pp. 524–535. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-14442-9_57

Gatys, L.A., Ecker, A.S., Bethge, M.: Image style transfer using convolutional neural networks. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 2414–2423 (2016)

Girshick, R.B., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Computer Vision and Pattern Recognition, pp. 580–587 (2013)

Guo, L., Li, F.: Image aesthetic evaluation using paralleled deep convolution neural network. Comput. Sci. (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Hinton, G.E., Osindero, S., Teh, Y.W.: A fast learning algorithm for deep belief nets. Neural Comput. 18(7), 1527–1554 (2014)

Hinton, G.E., Srivastava, N., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.R.: Improving neural networks by preventing co-adaptation of feature detectors. Comput. Sci. 3(4), 212–223 (2012)

Jia, Y., Shelhamer, E., Donahue, J., Karayev, S., Long, J., Girshick, R.B., Guadarrama, S., Darrell, T.: Caffe: convolutional architecture for fast feature embedding. CoRR (2014)

Karayev, S., Trentacoste, M., Han, H., Agarwala, A., Darrell, T., Hertzmann, A., Winnemoeller, H.: Recognizing image style. Comput. Sci. (2013)

Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., Li, F.F.: Large-scale video classification with convolutional neural networks. In: Computer Vision and Pattern Recognition, pp. 1725–1732 (2014)

Ke, Y., Tang, X., Jing, F.: The design of high-level features for photo quality assessment. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 419–426 (2006)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: International Conference on Neural Information Processing Systems, pp. 1097–1105 (2012)

Lecun, Y., Boser, B., Denker, J.S., Henderson, D., Howard, R.E., Hubbard, W., Jackel, L.D.: Backpropagation applied to handwritten zip code recognition. Neural Comput. 1(4), 541–551 (2014)

Levi, G., Hassner, T.: Emotion recognition in the wild via convolutional neural networks and mapped binary patterns. In: ACM on International Conference on Multimodal Interaction, pp. 503–510 (2015)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(4), 640 (2014)

Lu, X., Lin, Z., Jin, H., Yang, J., Wang, J.Z.: Rapid: rating pictorial aesthetics using deep learning. IEEE Trans. Multimed. 17(11), 2021–2034 (2015)

Luo, W., Wang, X., Tang, X.: Content-based photo quality assessment. In: IEEE International Conference on Computer Vision, pp. 2206–2213 (2011)

Luo, Y., Tang, X.: Photo and video quality evaluation: focusing on the subject. In: European Conference on Computer Vision, pp. 386–399 (2008)

Murray, N., Marchesotti, L., Perronnin, F.: AVA: a large-scale database for aesthetic visual analysis. In: Computer Vision and Pattern Recognition, pp. 2408–2415 (2012)

Obrador, P., Schmidt-Hackenberg, L., Oliver, N.: The role of image composition in image aesthetics. In: IEEE International Conference on Image Processing, pp. 3185–3188 (2010)

Ouyang, W., Loy, C.C., Tang, X., Wang, X., Zeng, X., Qiu, S., Luo, P., Tian, Y., Li, H., Yang, S.: DeepID-Net: deformable deep convolutional neural networks for object detection. IEEE Trans. Pattern Anal. Mach. Intell. PP(99), 1 (2016)

Shao, J., Zhou, Y.: Photo quality assessment in different categories. J. Comput. Inf. Syst. 9(8), 3209–3217 (2013)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. Comput. Sci. (2014)

Sun, Y., Wang, X., Tang, X.: Deep learning face representation from predicting 10,000 classes. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1891–1898 (2014)

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions. In: Computer Vision and Pattern Recognition, pp. 1–9 (2015)

Tian, X., Dong, Z., Yang, K., Mei, T.: Query-dependent aesthetic model with deep learning for photo quality assessment. IEEE Trans. Multimed. 17(11), 2035–2048 (2015)

Veerina, P.: Learning good taste: classifying aesthetic images. Technical report, Stanford University (2015)

Wang, C., Pu, Y., Xu, D., Zhu, J., Tao, Z.: Evaluating aesthetics quality in portrait photos. J. Softw. 20–28 (2015)

Wang, C., Pu, Y., Xu, D., Zhu, J., Tao, Z.: Evaluating aesthetics quality in scenery images. In: Proceeding of National Conference on Multimedia Technology, pp. 141–149 (2015)

Wang, L., Ouyang, W., Wang, X., Lu, H.: Visual tracking with fully convolutional networks. In: IEEE International Conference on Computer Vision, pp. 3119–3127 (2016)

You, Q., Yang, J.: Building a large scale dataset for image emotion recognition: the fine print and the benchmark. In: Thirtieth AAAI Conference on Artificial Intelligence, pp. 308–314 (2016)

You, Q., Yang, J., Yang, J., Yang, J.: Robust image sentiment analysis using progressively trained and domain transferred deep networks. In: Twenty-Ninth AAAI Conference on Artificial Intelligence, pp. 381–388 (2015)

Zhang, Z., Luo, P., Chen, C.L., Tang, X.: Facial landmark detection by deep multi-task learning. In: European Conference on Computer Vision, pp. 94–108 (2014)

Zhou, Y., Lu, X., Zhang, J., Wang, J.Z.: Joint image and text representation for aesthetics analysis. In: ACM on Multimedia Conference, pp. 262–266 (2016)

Acknowledgments

It is a project supported by Natural Science Foundation of China (No. 61271361, 61163019, 61462093, 61761046), the Research Foundation of Yunnan Province (2014FA021, 2014FB113), and Digital Media Technology Key Laboratory of Universities in Yunnan.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Li, Y., Pu, Y., Xu, D., Qian, W., Wang, L. (2017). Image Aesthetic Quality Evaluation Using Convolution Neural Network Embedded Fine-Tune. In: Yang, J., et al. Computer Vision. CCCV 2017. Communications in Computer and Information Science, vol 772. Springer, Singapore. https://doi.org/10.1007/978-981-10-7302-1_23

Download citation

DOI: https://doi.org/10.1007/978-981-10-7302-1_23

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7301-4

Online ISBN: 978-981-10-7302-1

eBook Packages: Computer ScienceComputer Science (R0)