Abstract

In recent years, many deep learning based methods were proposed to deal with the hyperspectral image (HSI) classification task. So far, most of these methods focus on the spectral integrality but neglect the contextual information among adjacent bands. In this paper, we propose to use the long short-term memory (LSTM) model with an end-to-end architecture for the HSI classification. Moreover, considering the high dimensionality of hyperspectral data, two novel grouping strategies are proposed to better learn the contextual features among adjacent bands. Compared with the traditional band-by-band strategy, the proposed methods prevent a very deep network for the HSI. In the experiments, two benchmark HSIs are utilized to evaluate the performance of proposed methods. The experimental results demonstrate that the proposed methods can yield a competitive performance compared with existing methods.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Long Short-Term Memory (LSTM)

- Recurrent Neural Network (RNN)

- Deep learning

- Feature extraction

- Hyperspectral image classification

1 Introduction

Different from the conventional image which only covers the human visual spectral range with RGB bands, hyperspectral image (HSI) can cover a much larger spectral range with hundreds of narrow spectral bands. It has been widely used in urban mapping, forest monitoring, environmental management and precision agriculture [1]. For most of these applications, HSI classification is a fundamental task, which predicts the class label of each pixel in the image.

Compared with traditional classification problems [12,13,14, 16, 17], HSI classification is more challenging due to the curse of dimensionality [7], which is also known as the Hughes phenomenon [9]. In order to alleviate this problem, dimensionality reduction methods are proposed, which can be divided into feature selection [3] and feature extraction (FE) [2] methods. The main purpose of feature selection is to preserve the most representative and crucial bands from the original dataset and discard those making no contribution to the classification. By designing suitable criteria, feature selection methods can eliminate redundancies among adjacent bands and improve the discriminability of different targets. FE, on the other hand, is used to find an appropriate feature mapping to transform the original high-dimensional feature space into a low-dimensional one, where different objects tend to be more separable.

Witnessing the achievements by deep learning methods in the fields of computer vision and artificial intelligence [10, 11], a promising way to extract deep features for hyperspectral data has become a feasible option. In [5], Chen et al. introduce the concept of deep learning into HSI classification for the first time, using a multilayer stacked autoencoders (SAEs) to extract deep features. After the pre-training stage, the deep networks are then fine-tuned with the reference data through a logistic regression classifier. In a like manner, a deep belief networks (DBNs) based spectral-spatial classification method for HSI is proposed in [6], where both the single layer restricted Boltzmann machine and multilayer DBN framework are analyzed in detail.

Despite the fact that these deep learning based methods possess better generalization ability compared with shallow methods, they mainly focus on the spectral integrality and directly classify the image in the whole feature space. However, the pixel vectors in the HSI are also sequential data, where the contextual information among adjacent bands is discriminative for the recognition of different objects.

To overcome the aforementioned drawbacks, we propose to use the long short-term memory (LSTM) model to extract spectral features, which is an updated version of recurrent neural networks (RNNs). Considering the high dimensionality of hyperspectral data, two novel grouping strategies are proposed to better learn the contextual features among adjacent bands. The major contributions of this study are summarized as follow.

-

1.

As far as we know, it is the first time that an end-to-end architecture for the HSI classification with the LSTM is proposed, which takes the contextual information among adjacent bands into consideration.

-

2.

Two novel grouping strategies are proposed to better learn the contextual features among adjacent bands for the LSTM. Compared with the traditional band-by-band strategy, proposed methods prevent a very deep network for the HSI.

The rest of this paper is organized as follows. Section 2 describes the spectral classification with the LSTM in detail. The information of data sets used in this study and the experimental results are given in Sect. 3. Conclusions and other discussions are summarized in Sect. 4.

2 Spectral Classification with LSTM

In this Section, we will first make a brief introduction to RNNs and the LSTM. Then, strategies for processing the spectral information with the LSTM are presented.

2.1 RNNs

RNNs [15] are important systems for processing sequential data, which allow cyclical connections between neural activations at different time steps.

The architecture of a recurrent neural network is shown as Fig. 1 Given a sequence of values \(x^{\left( 1\right) },x^{\left( 2\right) },\ldots ,x^{\left( \tau \right) }\), apply the following update equations for each time step from \(t=1\) to \(t=\tau \).

where \(b_a\) and \(b_o\) denote bias vectors. U, V and W are the weight matrices for input-to-hidden, hidden-to-output and hidden-to-hidden connections, respectively. \(x^{\left( t\right) }\), \(h^{\left( t\right) }\) and \(o^{\left( t\right) }\) are the input value, hidden value and output value at time t, respectively. The initialization of \(h^{\left( 0\right) }\) in (1) is specified with Gaussian values.

From (1) we can see that the hidden value of RNNs is determined by both the input signal at the current time step and the hidden value at the previous time step. In this manner, both the contextual information and the underlying pattern of the sequential data can be discovered. For the classification task, the softmax function can be added at the last time step to calculate the probability that the input data belongs to the ith category.

where \(\theta \) and b are the weight matrix and bias vector, respectively. k is the number of classes. The loss function of the whole network can be defined as

where \(y_i\) and \(\hat{y}_i\) denote the label and predicted label of the ith data, respectively. m is number of training samples. The optimization of a RNN can be accomplished by the mini-batch stochastic gradient descent with the back-propagation through time (BPTT) algorithm [19].

2.2 LSTM

The main challenge when training the RNN is the long-term dependencies that gradients tend to either vanish or explode during the back-propagation phase. To mitigate this problem, a gated RNN called LSTM is proposed in [8]. The core component of the LSTM is the memory cell which replaces the hidden unit in traditional RNNs. As shown in Fig. 2, there are four main elements in the memory cell, including an input gate, a forget gate, an output gate and a self-recurrent connection. The forward propagation of the LSTM for time step t is defined as follows.

Input gate:

Forget gate:

Output gate:

Cell state:

LSTM output:

where \(W_i\), \(W_f\), \(W_o\), \(W_c\), \(U_i\), \(U_f\), \(U_o\) and \(U_c\) are weight matrices. \(b_i\), \(b_f\), \(b_o\) and \(b_c\) are bias vectors. \(\sigma \left( x\right) =1/\left( 1+\mathrm{exp}\left( -x\right) \right) \) is the sigmoid function and \(\odot \) denotes the dot product.

Similar to traditional RNNs, the LSTM network can be trained by the mini-batch stochastic gradient descent with the BPTT algorithm. Refer to [8] for more detailed descriptions.

2.3 The Proposed Band Grouping Based LSTM Algorithm

Previous literatures have shown that the deep architecture possesses better generalization ability when dealing with the complicated spectral structure [5, 6]. While existing methods focus on the integrality of spectra, the LSTM network pays more attention to the contextual information among adjacent sequential data. Therefore, how to divide the hyperspectral vector into different sequences in a proper way is crucial to the performance of the network. A natural idea is to consider each band as a time step and input one band at a time. However, hyperspectral data usually has hundreds of bands, making the LSTM network too deep to train in such a circumstance. Thus, a suitable grouping strategy is needed.

Let n be the number of bands and \(\tau \) be the number of time steps in the LSTM. Then the sequence length of each time step is defined as \(m=floor\left( n/\tau \right) \), where \(floor\left( x\right) \) denotes rounding down x. For each pixel in the hyperspectral image, let \(z=\left[ z_1,z_2,\ldots ,z_i,\ldots ,z_n\right] \) be the spectral vector, where \(z_i\) is the reflectance of the ith band. The transformed sequences are then denoted by \(x=\left[ x_1,x_2,\ldots ,x_i,\ldots ,x_\tau \right] \) , where \(x_i\) is the sequence at the ith time step. In what follows, we introduce two grouping strategies proposed in this paper.

Grouping Strategy 1: Divide the spectral vector into different sequences according to the spectral order:

where \(x^{\left( i\right) }\) is the sequence at time i. As shown in Fig. 3(a), strategy 1 makes the signals inside a group continuous without any intervals and each group concentrates on a narrow spectral range. The spectral distance between different time steps will be relatively longer under such circumstances.

Grouping Strategy 2: Divide the spectral vector with a short interval:

Compared to strategy 1, each group in this case will cover a larger spectral range and the spectral distance between different time steps will be much shorter, as shown in Fig. 3(b).

After grouping the spectral vector z into different sequences \(x^{\left( 1\right) },\ldots ,x^{\left( \tau \right) }\), the LSTM network can be utilized to extract the contextual features among adjacent spectra. A fully connected (FC) layer and a softmax layer are added following the LSTM to accomplish the image classification. The complete spectral classification framework is illustrated as Fig. 4.

3 Experiment Results and Analysis

3.1 Data Description

In our experiments, two benchmark hyperspectral data sets, including the Pavia University and Indian Pines, are utilized to evaluate the performance of the proposed method.

The first data set is acquired by the Reflective Optics Systems Imaging Spectrometer (ROSIS) sensor over the Pavia University, northern Italy. This image consists of 103 spectral bands with 610 \(\times \) 340 pixels and it has a spectral coverage from 0.43 \(\upmu \)m to 0.86 \(\upmu \)m and a spatial resolution of 1.3 m. The training and test set are listed in Table 1.

The second data set is gathered by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor over the Indian Pines test site in Northwestern Indiana. After the removal of the water absorption bands, the image consists of 200 spectral bands with 145 \(\times \) 145 pixels. It has a spectral coverage from 0.4 \(\upmu \)m to 2.5 \(\upmu \)m and a spatial resolution of 20 m. The training and test set are listed in Table 2.

All the experiments in this paper are randomly repeated 30 times with different random training data. The overall accuracy (OA) and Kappa coefficient [1] are utilized to quantitatively estimate different methods. Both the average value and the standard deviation are reported. The experiments in this paper are implemented with an Intel i7-5820K 3.30-GHz processor with 32 GB of RAM and a NVIDIA GTX1080 graphic card.

3.2 Analysis About the LSTM

In this subsection, we first evaluate two grouping strategies proposed in this paper with different number of time steps. As shown in Fig. 5, strategy 2 outperforms strategy 1 on both data sets. The reason behind this phenomenon lies on two aspects. First, the sequence divided by strategy 2 covers a wider spectral range compared with strategy 1, which means more abundant spectral information is fed into the LSTM cell at each time step in the case of strategy 2. Second, the spectral distance between different time steps is much shorter in strategy 2. Under such a circumstance, it is easier for the LSTM to learn the contextual features among adjacent spectral bands. Besides, as the number of time steps increases, the OA has a trend of rising first then getting steady or decreasing. This result shows that a too deep architecture may not be suitable for the LSTM to extract spectral features. For all the data sets in this paper, we set the number of time steps as 3. The number of neurons in the FC layer is set as 128.

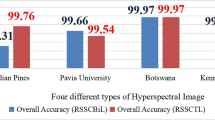

3.3 Classification Results

In this subsection, we will report the classification results of the proposed methods along with other approaches, including raw (classification with original spectral features using RBF-SVM), PCA (classification with first 20 PCs using RBF-SVM) and SAE [5] (spectral classification with SAE). We use the LibSVM [4, 18] for the SVM classification in our experiments. The range of the regularization parameters for the five-fold cross-validation is from \(2^{8}\) to \(2^{10}\). The LSTM is implemented under Theano 0.8.2 and other experiments in this paper are carried out under MATLAB 2012b. The classification maps of different methods are shown in Figs. 6 and 7 and the quantitative assessment is shown in Tables 3 and 4.

As shown in Tables 3 and 4, the LSTM with traditional band-by-band input fails to get a high accuracy and performs even worse than shallow methods in both data sets. By contrast, the LSTM with proposed grouping strategies yields better results and the overall accuracy can be improved about 5% to 13% on different data sets. The main reason here is that the band-by-band strategy would generate a too deep network and may result in information loss during the recurrent connection. Take the Pavia University data set for example. Since the number of time steps in this case will reach 103, after unfolding the LSTM, the depth of the network will also be 103, making it very hard for the network’s training. In general, the LSTM with proposed strategy 2 achieves the best results compared with other spectral FE methods.

4 Conclusions

In this paper, we have proposed a band grouping based LSTM algorithm for the HSI classification. The proposed method has the following characteristics. (1) Our method takes the contextual information among adjacent bands into consideration, which is ignored by existing methods. (2) Two novel grouping strategies are proposed to better train the LSTM. Compared with the traditional band-by-band strategy, proposed methods prevent a very deep network for the HSI and yield better results.

Since the proposed method mainly focuses on the deep spectral FE, our future work will try to take the deep spatial features into consideration.

References

Bioucas-Dias, J.M., Plaza, A., Camps-Valls, G., Scheunders, P., Nasrabadi, N., Chanussot, J.: Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 1(2), 6–36 (2013)

Bruce, L.M., Koger, C.H., Li, J.: Dimensionality reduction of hyperspectral data using discrete wavelet transform feature extraction. IEEE Trans. Geosci. Remote Sens. 40(10), 2331–2338 (2002)

Chang, C.I., Du, Q., Sun, T.L., Althouse, M.L.: A joint band prioritization and band-decorrelation approach to band selection for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 37(6), 2631–2641 (1999)

Chang, C.C., Lin, C.J.: LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. 2(3), 389–396 (2011)

Chen, Y., Lin, Z., Zhao, X., Wang, G., Gu, Y.: Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 7(6), 2094–2107 (2014)

Chen, Y., Zhao, X., Jia, X.: Spectral-spatial classification of hyperspectral data based on deep belief network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 8(6), 1–12 (2015)

Donoho, D.L.: High-dimensional data analysis: the curses and blessings of dimensionality. AMS Math Chall. Lect. 1, 32 (2000)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Hughes, G.: On the mean accuracy of statistical pattern recognizers. IEEE Trans. Inf. Theory 14(1), 55–63 (1968)

Ji, S., Xu, W., Yang, M., Yu, K.: 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 35(1), 221–31 (2013)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25(2), 2012 (2012)

Liu, T., Gong, M., Tao, D.: Large-cone nonnegative matrix factorization. IEEE Trans. Neural Netw. Learn. Syst. 28(9), 2129–2142 (2016)

Liu, T., Tao, D.: Classification with noisy labels by importance reweighting. IEEE Trans. Pattern Anal. Mach. Intell. 38(3), 447–461 (2016)

Liu, T., Tao, D., Song, M., Maybank, S.J.: Algorithm-dependent generalization bounds for multi-task learning. IEEE Trans. Pattern Anal. Mach. Intell. 39(2), 227–241 (2017)

Rumelhart, D.E., Hinton, G.E., Williams, R.J.: Learning representations by back-propagating errors. Nature 323, 533–536 (1986)

Tao, D., Li, X., Wu, X., Maybank, S.J.: General tensor discriminant analysis and gabor features for gait recognition. IEEE Trans. Pattern Anal. Mach. Intell. 29(10), 1700–1715 (2007)

Tao, D., Li, X., Wu, X., Maybank, S.J.: Geometric mean for subspace selection. IEEE Trans. Pattern Anal. Mach. Intell. 31(2), 260–274 (2009)

Tao, D., Tang, X., Li, X., Wu, X.: Asymmetric bagging and random subspace for support vector machines-based relevance feedback in image retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 28(7), 1088–1099 (2006)

Werbos, P.J.: Backpropagation through time: what it does and how to do it. Proc. IEEE 78(10), 1550–1560 (1990)

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China under Grants U1536204, 60473023, 61471274, and 41431175, China Postdoctoral Science Foundation under Grant No.2015M580753.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Xu, Y., Du, B., Zhang, L., Zhang, F. (2017). A Band Grouping Based LSTM Algorithm for Hyperspectral Image Classification. In: Yang, J., et al. Computer Vision. CCCV 2017. Communications in Computer and Information Science, vol 772. Springer, Singapore. https://doi.org/10.1007/978-981-10-7302-1_35

Download citation

DOI: https://doi.org/10.1007/978-981-10-7302-1_35

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7301-4

Online ISBN: 978-981-10-7302-1

eBook Packages: Computer ScienceComputer Science (R0)