Abstract

Monocular dense reconstruction plays more and more important role in AR application. In this paper, we present a new reconstruction system, which combines the Direct Sparse Odometry (DSO) and dense reconstruction into a uniform framework. The DSO can successfully track and build a semi-dense map even in low texture environment. The dense reconstruction is built on the fast superpixel segmentation and location consistency. However, a big gap between the semi-dense map and the dense reconstruction still needs to be bridged. To this end, we develop several elaborate methods including map points selection strategy, container for data sharing, and coordinate system transforming. We compare our system with a state-of-the-art monocular dense reconstruction system DPPTAM. The comparison experiments run on the public monocular visual odometry dataset. The experimental results show that our system has better performance and can run robustly, effectively in indoor and outdoor scenarios.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Monocular SLAM

- Dense reconstruction

- Map points selection

- Shared container

- Coordinate system transforming

1 Introduction

More and more AR applications introduce SLAM for camera tracking. However, only tracking camera position is still not sufficient for a satisfactory AR application, because the virtual model cannot have real interactions with real scene. For example, current AR applications always use the scene as 2D background, and the virtual model is levitated above the scene. This extremely influences the user experience.

For more realistic user experience, the 3D model of scene should be reconstructed. Thus, both scene and virtual model live in a 3D space, and they can interact smoothly.

Some dense reconstruction methods based on SLAM are proposed. For example, KinectFusion [7] and ElasticFusion [12] use the depth data from Kinect to track the 3D posture of the sensor and reconstruct the geometric 3D model of the physical scene in real time. However, the extra sensors need more cost, computation resource, and more power. Some monocular camera methods are also proposed. For example, DTAM [8] proposes a dense model to track and achieves real-time performance by using current commodity GPU. However, it is not suitable for AR application, because AR application usually runs on mobile device. DPPTAM [1] is a direct monocular dense reconstruction system based on single CPU, which has impressive results in some indoor scenarios. But as for some outdoor scenarios, especially when the direction of the camera movement is relatively large, it will lose camera tracking.

Therefore, we propose a new dense reconstruction system, by combing the Direct Sparse Odometry (DSO) [3] and dense reconstruction pipeline. DSO has robust performance for camera tracking, low computation cost and very precise optimization mechanism. For example, it can successfully track camera even in low texture or dark scene. Furthermore, it can also generate a semi-dense map and provide sufficient map points for further dense reconstruction. However, the DSO is not developed for dense reconstruction, there are still some big gaps between semi-map and dense reconstruction. First, we cannot distinguish which point to use because DSO generates four types of points in each keyframe. Second, it costs much memory to save all information of each keyframe. Simultaneously, we should keep important content after the shared data exchange. Third, the transformation between the image coordinate system and the camera coordinate system may have difference. Therefore, we design some elaborate strategies to bridge these gaps. We first design a map point selection in DSO. At the same time a container is proposed to exchange the shared data between DSO and dense mapping thread. We also unify the same coordinate system. We compare our algorithm with DPPTAM in a public monocular visual dataset. The results show that our algorithm is more robust both in outdoor and indoor environments.

2 Related Work

Several dense reconstruction methods have been proposed, which can be roughly divided into three categories. The first one is based on depth camera. KinectFusion [7] fuses the depth data streamed from a Kinect camera into a single global implicit surface model. Kitinuous [11] is a complete three-dimensional reconstruction system, which combines the loop closure detection and the loop optimization. ElasticFusion [12] can reconstruct surfel-based maps of room scale environments with a RGB-D camera. Besides, they both use the iterative closest point algorithm to calculate the camera pose. These algorithms need much computation resource. GPU support is always used in general case to make system real-time.

The second category is based on GPU. Methods in this category calculate every pixel to recover the structure and consequently have to use GPU to accelerate the computation. DTAM [8] refers to dense tracking and mapping in real-time, which based on direct tracking. It is very robust to feature deletion and image blurring. However, since DTAM recovers dense depth maps for each pixel and adopts global optimization, the computational complexity is very huge. Even with GPU acceleration, the efficiency of the expansion is still low. REMODE [9] refers to probabilistic, monocular dense reconstruction in real time which combines Bayesian estimation and recent development on convex optimization to get a more accurate depth map. The approach runs on a CUDA-based laptop computer.

The third category is based on CPU. But the dense reconstruction algorithm based on CPU is a big challenge. Fastfusion [10] is a pure reconstruction algorithm which can fully generates mesh map and runs in a CPU. INTEL instruction set is used to accelerate calculation. However, the algorithm must rely on rotation and translation matrix which are generated by a SLAM system or visual odometry. At the same time the accurate depth value is needed first by a RGB-D camera before reconstruction. In Greene et al. [6] proposed a multi-resolution depth estimation and spatial smoothing process to determine the scale of the different texture. This approach increases the reconstruction density and quality, and saves more computational resource simultaneously. DPPTAM [1] refers to dense piecewise planar tracking and mapping from a monocular sequence. Alejo Concha et al. made the assumption that homogeneous-color regions belong to approximately planar areas. And then they combined low texture planar areas of the superpixel segmentation with semi-dense depth map. The highly textured image areas in DPPTAM are mapped using standard direct mapping techniques in [4].

3 DSO-Dense Reconstruction System

3.1 System Review

Our system consists of three parts. The first part is the DSO system, we can call it as the front end. It provides camera positions, key frames and semi-dense maps to the system. The second part is the dense reconstruction pipeline, which consists of super-pixel consistency, robust plane estimation, dense mapping and dense map. This part is learned from DPPTAM and is called as the back end. However, because the DSO is not developed for dense reconstruction, the first two parts cannot directly be combined. Therefore, a bridge part is necessary, which is the third part of our system. In this part, we design a map point selection strategy to choose those map points that are suitable for determining the positional relationship between the points and the contour. We also design a container that extracts data from the front end, and then transfers these data to the backend. Furthermore, due to the difference of coordinate system between front end and back end, our bridge part also transforms the coordinate system for them (Fig. 1).

3.2 The Front End

When a new frame is coming, high-gradient points are extracted and the photometric error between a reference frame and a target frame is calculated. The weighted sum of squared differences (SSD) is calculated over a small neighborhood of pixels. Then the full photometric error over all frames and points is counted [3]. In order to obtain a more accurate camera position, a sliding window with 7 active keyframe is kept and the total photometric error is optimized in this sliding window using the Gauss-Newton algorithm. The keyframe creation strategy of [3] is used to keep important messages through all over the frames. Three criteria are considered to create keyframe:

Equation 1 is measured by the mean square optical flow when the field of view changes. \(\varvec{p}\), \(\varvec{{p}^{'}}\) represents for extracted points from the last keyframe to the latest frame, respectively. Equation 2 is measured by the mean flow without rotation when camera translation causes occlusions and disocclusions. In this situation, f may be small. \(\varvec{{{p}_{t}}^{'}}\) represents for the warped point position with rotation matrix. Equation 3 is measured by the relative brightness factor between two frames when the camera exposure time changes significantly, \({t}_{i}, {t}_{j}\) represent for the exposure times of the images and \({a}_{i}, {a}_{j}\) represent for the brightness transfer function parameters. A new keyframe is extracted when \({\omega }_{f}f+{\omega }_{{f}_{t}}{f}_{t}+{\omega }_{a}a>1\) and \({f}, {f}_{t}, a\) represent the relative weight between three indicators.

Keeping all keyframe data in the system seems unreality because of the limit of the memory. So the marginalization using the schur complement is needed. After marginalization we prepare the keyframe image, camera pose, camera parameters and semi-dense points into a data buffer which is constituted by a list container. Our container makes sure that the DSO thread and dense mapping thread can exchange data freely (see Sect. 3.5).

After selecting keyframes, the map points are formed by estimating the depth of high-gradient points in keyframes. A point in semi-dense map is defined as the point where the source pixels ray impinges on the surface. And the inverse depth of a map point is estimated by a Gaussian framework. The original DSO only generates a sparse map, which is not sufficient for the following dense reconstruction. Therefore, two strategies including 8-pixel residual pattern [3] and keeping more active points in the sliding window are used to make our result denser. In particular, marginalization points and optimization points in the sliding window are taken into this strategy.

3.3 The Back End

In this section, the exchanged data from the container is already gotten, we use a graphics-based segmentation method in [5]. As for the pixel gradient in an image, its always existing where color suddenly changed on the edge of the object. So we can determine the position relation between semi-point clouds and superpixel contours as follow:

where Eq. 4 denotes the standard pinhole model [2]. \(\varvec{{P}_{i}}\) denotes the 3D location. \({\lambda }_{i}\) denotes the depth constant. K denotes the camera parameters. \(\varvec{{p}_{i}}\) denotes the 2d position in the image. \(\varvec{{S}_{i}}\) in Eq. 5 denotes the superpixel in an image. Threshold \(\varepsilon \) denotes the neighbor eight pixels around the position of the projection point. If the distance is less than \(\varepsilon \), then the projection point is belong to the superpixel contour. The plane \({\varPi }\) is estimated by the points and contours which are defined in the previous paragraph. Every contour is calculated in the image. Singular value decomposition (SVD) and random sample consensus (RANSAC) are used to fit a robust plane similar to [1]. Three evaluation criteria are proposed to judge the quality of the estimated plane. Normalized residuals test uses the distance consistency ratio.

where the molecular stands for distance between the 3D points to the plane. The denominator stands for the distance between the 3D points to themselves, and the threshold \(\xi \) is −0.05. Degenerated cases exclude error contours by solving the degenerate rank in the SVD. Active Search is a very important part to reduce the error between 3D contours and 2D superpixels. After superpixel segmentation, we cannot distinguish which superpixel corresponds to the contour in a single view because every contour may have at least two neighboring superpixels. So the re-projection error between the re-projected contour and the contours of the potential matches in neighbor frames is calculated for accurate search. But low overlapping in the re-projection is rejected. Then a global energy function which contains three terms is put forward to dense mapping [1].

\({\omega }_{1}\), \({\omega }_{2}\) represent for relative importance between the photometric, Manhattan/piecewise-planar and smoothness costs.

Color difference between the reference image and the set of short-baseline images is calculated for photometric error. As for each pixel \(\varvec{v}\) in image \(\varvec{{I}_{r}}\), it is then backprojected at an inverse distance \(\rho \) and projected in other close image \(\varvec{{I}_{j}}\).

The preceding index item is the Huber norm of the gradient of the inverse depth map where \(\beta \) is a constant. The latter part decreases the regularization strength across superpixel contours.

\({{\rho }_{\tau }}\) represents for the inverse depth prior of superpixels. The third constraint is measured through distance between each point and the estimated planar prior \({\rho }_{\tau }\). In our strategy there is a situation where the keyframe is extracted with a small parallax (See the second criterion in Sect. 3.2). So the approach in [1] is used to reject the large error areas. The informative index of an area decides whether we should discard our candidate areas or not. After then, our superpixel contours become the robust, accurate dense maps. For convenience, we display our dense map in world coordinate and then combine with semi-dense map in Sect. 3.2 directly.

3.4 Bridge Part

Map Points Selection. DSO has candidate point activation to stabilize the points number across all active frames combined. It then generates four types of points: marginalization points, hessian points, immature points and hessian-outlier points. Marginalization points are generated by schur complement. Some candidate points need to be activated and replace the marginalized points at the same time. Hessian points are active points in DSO, which are used in windowed optimization [3]. Immature points are the original candidate points for roughly camera tracking. They initialize the depth of points once the points are activated. Hessian-outlier points are potential outliers which are removed by searching along the epipolar line during camera tracking. If we put all four types of points to dense mapping thread, we may get a big reconstruction error in our system. Taking into account the different properties of the four kinds of points, we propose a map points selection strategy (see Sect. 3.5).

Container. When DSO generates a keyframe we cannot pass data to dense mapping thread im-mediately, because dense mapping thread may be running. So using container to keep keyframe data is very necessary. But if we keep all keyframe information, it will progressive increase the cost in our system. After then the system memory will be crashed. So the deleting and updating mechanisms of container must be applied to reduce the burden of the system. Simultaneously, when our dense mapping system thread runs end in one time, we should exchange the contain data to this thread.

Coordinate Systems. We observed that the coordinate systems in DSO and the dense reconstruction are different. As shown in Fig. 2, where the X axis position of the point \(\varvec{{p}^{'}}\) become the opposite number of the point \(\varvec{p}\). So if we combine them directly, the dense reconstruction results will separate from other DSO semi-dense results. Thus we cannot get a perfect final reconstruction result. Therefore, in the bridge part, the camera position and map points position are also transformed from the DSO coordinate system to the dense reconstruction coordinate system.

3.5 Implementation Details

According to Sect. 3.4, DSO has four types of points in each keyframe, and among them the first two types of points are suitable for generating the denser scene. Because they are more stable. Although increasing the density has little benefit in terms of accuracy or robustness, denser point clouds have a certain impact on position determination between 2D points and contours as well as plane estimation. For trade-off speed and efficiency, we finally choose 8000 active points across all active frames in this paper. Our list container includes 5 list structures. An index id, a gray image, a rotation matrix, a translation matrix and accurate semi-dense point clouds of the keyframe are contained in each structure. A three-channel image from keyframe in DSO is prepared first; then, the rotation, translation matrix are transformed from world to camera; finally, semi-dense points are converted from camera coordinate to world coordinate. And then we put them together as one piece of the list container. Once dense mapping thread is over, we exchange data right away. Besides, when the coming data exceed the limit of a container, the oldest data is removed immediately according to the index id. Owning to this container, the memory consumption of the system is in a certain range and we also maintain the key information in recent keyframes.

4 Experimental Results

Our experiments is evaluated on the Monocular Visual Odometry Dataset published by TUM [3]. We first show the intermediate results about the location consistency between semi-dense points and superpixel contours (see Fig. 3).

The left image in Fig. 3 is the keyframe extracted in DSO (see Sect. 3.2). The third image in Fig. 3 is the semi-dense point cloud corresponding to the first one (see Sect. 3.3), the picture shows that those points almost corresponding to the border of the object in the image. The second image in Fig. 3 is the superpixel segmentation result in Sect. 3.3. In this picture, the planes in the real world are in the same color. The rightmost image in Fig. 3 is the dense reconstruction result after Sect. 3.5.

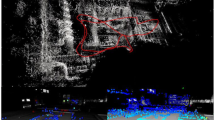

The white highlighted area is a part of our final dense result. These dense planes almost fill the black area in the third image in Fig. 3. Our results show that our algorithm can solve the gap between the high gradient points and the low texture areas. Second, we show our results which is compared with DPPTAM. In all our experiments we have used the same parameters which have been given in the DPPTAM (re-projection error threshold, overlapping threshold etc.). Simultaneously, our system runs for a camera resolution of \(640\times 480\) pixels. It must be mentioned that DSO relies on a global camera. So we just use the un-distortion image which is produced by DSO. And then put them into a ROS bag for DPPTAM. In most of datasets (sequence20, 26, 30 etc.), the camera position only changes a little after frame tracking initialization. But when the camera turns to another perspective and the rotation of camera is a little big, DPPTAM is weak to track the subsequent frame. And then the reconstruction pipeline will be broken. So some results of DPPTAM only have the reconstruction result at the beginning. Thus, we just show the compare results in the first few keyframes in Fig. 4. Our results in red color rebuild the low texture area successfully (see Fig. 4 right column). Figure 5 show our reconstruction of entire scene and the white high-light is our results. Our algorithm can reconstruct the streets, walls, and the surface of the building. Our algorithm runs on a 2.3 GHz Intel Core i5-6300HQ processor and 8.0 GB of RAM memory of a Lenovo Y700-15ISK laptop. Superpixel extraction using the algorithm in [1] takes around 0.18 s per image.

5 Conclusion

In this paper, we proposed a monocular dense reconstruction system that is effective and faster than the current state-of-the-art. We combined the state-of-the-art visual odometry and dense reconstruction pipeline by an elaborated bridge part. By comparison with state-of-the-art monocular method, our system can successfully run on the indoor, outdoor environments, even in textureless scenes. The experimental results indicated that the direct sparse odometry is well combined with the superpixel-based plane detection. Furthermore, our system is running on CPU and is realtime, therefore, it is possible to be applied in many fields.

References

Concha, A., Civera, J.: Dpptam: dense piecewise planar tracking and mapping from a monocular sequence. In: 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 5686–5693. IEEE (2015)

Delage, E., Lee, H., Ng, A.: Automatic single-image 3D reconstructions of indoor Manhattan world scenes. Robot. Res. 305–321 (2007)

Engel, J., Koltun, V., Cremers, D.: Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. (2017)

Engel, J., Schöps, T., Cremers, D.: LSD-SLAM: large-scale direct monocular SLAM. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8690, pp. 834–849. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10605-2_54

Felzenszwalb, P.F., Huttenlocher, D.P.: Efficient graph-based image segmentation. Int. J. Comput. Vis. 59(2), 167–181 (2004)

Greene, W.N., Ok, K., Lommel, P., Roy, N.: Multi-level mapping: real-time dense monocular slam. In: 2016 IEEE International Conference on Robotics and Automation (ICRA), pp. 833–840. IEEE (2016)

Newcombe, R.A., Izadi, S., Hilliges, O., Molyneaux, D., Kim, D., Davison, A.J., Kohi, P., Shotton, J., Hodges, S., Fitzgibbon, A.: Kinectfusion: real-time dense surface mapping and tracking. In: 2011 10th IEEE international symposium on Mixed and augmented reality (ISMAR), pp. 127–136. IEEE (2011)

Newcombe, R.A., Lovegrove, S.J., Davison, A.J.: Dtam: dense tracking and mapping in real-time. In: 2011 IEEE International Conference on Computer Vision (ICCV), pp. 2320–2327. IEEE (2011)

Pizzoli, M., Forster, C., Scaramuzza, D.: Remode: probabilistic, monocular dense reconstruction in real time. In: 2014 IEEE International Conference on Robotics and Automation (ICRA), pp. 2609–2616. IEEE (2014)

Steinbrücker, F., Sturm, J., Cremers, D.: Volumetric 3D mapping in real-time on a CPU. In: 2014 IEEE International Conference on Robotics and Automation (ICRA), pp. 2021–2028. IEEE (2014)

Whelan, T., Kaess, M., Fallon, M., Johannsson, H., Leonard, J., McDonald, J.: Kintinuous: Spatially Extended Kinectfusion (2012)

Whelan, T., Leutenegger, S., Salas-Moreno, R., Glocker, B., Davison, A.: Elasticfusion: dense slam without a pose graph. In: Robotics: Science and Systems (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Mao, L., Wu, J., Zhang, J., Chen, S. (2017). Monocular Dense Reconstruction Based on Direct Sparse Odometry. In: Yang, J., et al. Computer Vision. CCCV 2017. Communications in Computer and Information Science, vol 773. Springer, Singapore. https://doi.org/10.1007/978-981-10-7305-2_38

Download citation

DOI: https://doi.org/10.1007/978-981-10-7305-2_38

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7304-5

Online ISBN: 978-981-10-7305-2

eBook Packages: Computer ScienceComputer Science (R0)