Abstract

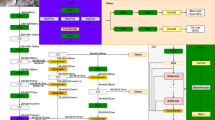

In the past decade, great progress has been made in general object detection based on deep convolutional neural networks. However, object detection from Unmanned Aerial Vehicles (UAV) imagery received not so much concern. In this paper, a densely connected feature mining network is proposed for high accuracy detection. Specifically, multi-scale predictions are used to enhance the feature representation of the tiny vehicles. Furthermore, a streamlined one-stage detection network is used to achieve satisfactory trade-off between speed and accuracy. Finally, a improved distance metric function is integrated into the priors clustering process, which can lead to a better preliminary location before training. The proposed architecture is evaluated on the highly competitive UAV benchmark (UAVDT). The experimental results show that the proposed dense-darknet network has achieved a competitive performance of 42.03% mAP (mean Average Precision) and good generalization ability on the other UAV benchmarks.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Zhao, Z.Q., Zheng, P., Xu, S.T., Wu, X.: Object detection with deep learning: a review (2018)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: International Conference on Neural Information Processing Systems (2015)

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: unified, real-time object detection (2015)

Liu, W., et al.: SSD: single shot multibox detector. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 21–37. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_2

He, K., Zhang, X., Ren, S., Sun, J.: Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1904–1916 (2014)

Dai, J., Li, Y., He, K., Sun, J.: R-FCN: object detection via region-based fully convolutional networks (2016)

Du, D., Qi, Y., Yu, H., Yang, Y., Duan, K.: The unmanned aerial vehicle benchmark: object detection and tracking (2018)

Wen, L., Du, D., Cai, Z., Lei, Z., Chang, M.C., Qi, H., et al.: UA-DETRAC: a new benchmark and protocol for multi-object detection and tracking. In: Computer Science (2015)

Mueller, M., Smith, N., Ghanem, B.: A benchmark and simulator for UAV tracking. Far East J. Math. Sci. 2(2), 445–461 (2016)

Kong, T., Sun, F., Yao, A., Liu, H., Lu, M., Chen, Y.: RON: reverse connection with objectness prior networks for object detection. In: CVPR (2017)

Everingham, M., et al.: The 2005 PASCAL visual object classes challenge. In: Quiñonero-Candela, J., Dagan, I., Magnini, B., d’Alché-Buc, F. (eds.) MLCW 2005. LNCS (LNAI), vol. 3944, pp. 117–176. Springer, Heidelberg (2006). https://doi.org/10.1007/11736790_8

Lin, T.-Y., et al.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_48

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., et al.: ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 115(3), 211–252 (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition (2015)

Zhu, P., Wen, L., Dawei, D., Xiao, B., Ling, H., Hu, Q., et al.: VisDrone-DET2018: the vision meets drone object detection in image challenge results (2018)

Lin, T.Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection (2016)

Xie, S., Girshick, R., Dollár, P., Tu, Z., He, K.: Aggregated residual transformations for deep neural networks (2016)

Gao, H., Zhuang, L., van der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: IEEE Conference on Computer Vision & Pattern Recognition (2017)

Chen, Y., Li, J., Xiao, H., Jin, X., Yan, S., Feng, J.: Dual path networks (2017)

Huang, G., Sun, Y., Liu, Z., Sedra, D., Weinberger, K.Q.: Deep networks with stochastic depth. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9908, pp. 646–661. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46493-0_39

Redmon, J., Farhadi, A.: YOLOv3: an incremental improvement (2018)

Cortes, C., Gonzalvo, X., Kuznetsov, V., Mohri, M., Yang, S.: AdaNet: adaptive structural learning of artificial neural networks (2016)

Pleiss, G., Chen, D., Huang, G., Li, T., van der Maaten, L., Weinberger, K.Q.: Memory-efficient implementation of DenseNets (2017)

Redmon, J., Farhadi, A.: YOLO9000: better, faster, stronger (2017)

Law, H., Deng, J.: CornerNet: detecting objects as paired keypoints (2018)

Zhang, T., Wang, R., Ding, J.: A discriminative feature learning based on deep residual network for face verification. In: Wang, Y., Jiang, Z., Peng, Y. (eds.) IGTA 2018. CCIS, vol. 875, pp. 411–420. Springer, Singapore (2018). https://doi.org/10.1007/978-981-13-1702-6_41

Zhang, X., Wang, R., Ding, J.: Abnormal event detection by learning spatiotemporal features in videos. In: Wang, Y., Jiang, Z., Peng, Y. (eds.) IGTA 2018. CCIS, vol. 875, pp. 421–431. Springer, Singapore (2018). https://doi.org/10.1007/978-981-13-1702-6_42

Zhou, J., Wang, R., Ding, J.: Deep convolutional features for correlation filter based tracking with parallel network. In: Wang, Y., Jiang, Z., Peng, Y. (eds.) IGTA 2018. CCIS, vol. 875, pp. 461–470. Springer, Singapore (2018). https://doi.org/10.1007/978-981-13-1702-6_46

Acknowledgments

This work is supported by National Key Research and Development Plan under Grant No. 2016YFC0801005. This work is supported by Grant No. 2018JXYJ49.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Li, J., Wang, R., Ding, J. (2019). Tiny Vehicle Detection from UAV Imagery. In: Wang, Y., Huang, Q., Peng, Y. (eds) Image and Graphics Technologies and Applications. IGTA 2019. Communications in Computer and Information Science, vol 1043. Springer, Singapore. https://doi.org/10.1007/978-981-13-9917-6_36

Download citation

DOI: https://doi.org/10.1007/978-981-13-9917-6_36

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-13-9916-9

Online ISBN: 978-981-13-9917-6

eBook Packages: Computer ScienceComputer Science (R0)