Abstract

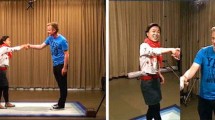

We have been collecting multimodal dialogue data [1] to contribute to the development of multimodal dialogue systems that can take a user’s non-verbal behaviors into consideration. We recruited 30 participants from the general public whose ages ranged from 20 to 50 and genders were almost balanced. The consent form to be filled in by the participants was updated to enable data distribution to researchers as long as it is used for research purposes. After the data collection, eight annotators were divided into three groups and assigned labels representing how much a participant looks interested in the current topic to every exchange. The labels given among the annotators do not always agree as they depend on subjective impressions. We also analyzed the disagreement among annotators and temporal changes of impressions of the same annotators.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

These activities are being conducted by a working group (Human-System Multimodal Dialogue Sharing Corpus Building Group) under SIG-SLUD of the Japanese Society for Artificial Intelligence (JSAI).

- 2.

- 3.

- 4.

Two annotators gave labels to the data of all three groups.

References

Araki M, Tomimasu S, Nakano M, Komatani K, Okada S, Fujie S, Sugiyama H (2018) Collection of multimodal dialog data and analysis of the result of annotation of users’ interest level. In: Proceedings of international conference on language resources and evaluation (LREC)

Carletta J (2007) Unleashing the killer corpus: experiences in creating the multi-everything AMI meeting corpus. Lang Resour Eval 41(2):181–190

Chen L, Rose RT, Qiao Y, Kimbara I, Parrill F, Welji H, Han TX, Tu J, Huang Z, Harper M, Quek F, Xiong Y, McNeill D, Tuttle R, Huang T (2006) VACE multimodal meeting corpus. In: Proceedings of the 2nd international conference on machine learning for multimodal interaction (MLMI05), pp 40–51. https://doi.org/10.1007/11677482_4

Chiba Y, Ito M, Nose T, Ito A (2014) User modeling by using bag-of-behaviors for building a dialog system sensitive to the interlocutor’s internal state. In: Proceedings of annual meeting of the special interest group on discourse and dialogue (SIGDIAL), pp 74–78. http://www.aclweb.org/anthology/W14-4310

Chollet M, Prendinger H, Scherer S (2016) Native vs. non-native language fluency implications on multimodal interaction for interpersonal skills training. In: Proceedings of international conference on multimodal interaction (ICMI), pp 386–393. http://doi.acm.org/10.1145/2993148.2993196

Dhall A, Goecke R, Ghosh S, Joshi J, Hoey J, Gedeon T (2017) From individual to group-level emotion recognition: EmotiW 5.0. In: Proceedings of international conference on multimodal interaction (ICMI). ACM, New York, NY, USA, pp 524–528. http://doi.acm.org/10.1145/3136755.3143004

Higashinaka R, Funakoshi K, Araki M, Tsukahara H, Kobayashi Y, Mizukami M (2015) Towards taxonomy of errors in chat-oriented dialogue systems. In: Proceedings of annual meeting of the special interest group on discourse and dialogue (SIGDIAL), pp 87–95

Hirayama T, Sumi Y, Kawahara T, Matsuyama T (2011) Info-concierge: proactive multi-modal interaction through mind probing. In: The Asia Pacific signal and information processing association annual summit and conference (APSIPA ASC 2011)

Inoue K, Lala D, Takanashi K, Kawahara T (2018) Latent character model for engagement recognition based on multimodal behaviors. In: Proceedings of international workshop on spoken dialogue systems (IWSDS)

Janin A, Baron D, Edwards J, Ellis D, Gelbart D, Morgan N, Peskin B, Pfau T, Shriberg E, Stolcke A, Wooters C (2003) The ICSI meeting corpus. In: Proceedings of IEEE international conference on acoustics, speech & signal processing (ICASSP), pp I–364–I–367. https://doi.org/10.1109/ICASSP.2003.1198793

Kumano S, Otsuka K, Matsuda M, Ishii R, Yamato J (2013) Using a probabilistic topic model to link observers’ perception tendency to personality. In: Proceedings of the ACM conference on affective computing and intelligent interaction (ACII), pp 588–593. https://doi.org/10.1109/ACII.2013.103

Landis JR, Koch GG (1977) The measurement of observer agreement for categorical data. Biometrics 33(1):159–174

Nakano YI, Ishii R (2010) Estimating user’s engagement from eye-gaze behaviors in human-agent conversations. In: Proceedings of international conference on intelligent user interfaces (IUI), pp 139–148. https://doi.org/10.1145/1719970.1719990

Ozkan D, Morency LP (2011) Modeling wisdom of crowds using latent mixture of discriminative experts. In: Proceedings of annual meeting of the association for computational linguistics (ACL): human language technologies (HLT), pp 335–340. http://dl.acm.org/citation.cfm?id=2002736.2002806

Ozkan D, Sagae K, Morency L (2010) Latent mixture of discriminative experts for multimodal prediction modeling. In: Proceedings of international conference on computational linguistics (COLING), pp 860–868. http://aclweb.org/anthology/C10-1097

Shibasaki Y, Funakoshi K, Shinoda K (2017) Boredom recognition based on users’ spontaneous behaviors in multiparty human-robot interactions. In: Proceedings of multimedia modeling, pp 677–689. https://doi.org/10.1007/978-3-319-51811-4_55

Sidner C, Kidd C, Lee C, Lesh N (2004) Where to look: a study of human-robot engagement. In: Proceedings of international conference on intelligent user interfaces (IUI), pp 78–84. https://doi.org/10.1145/964442.964458

Stratou G, Morency LP (2017) Multisense—context-aware nonverbal behavior analysis framework: a psychological distress use case. IEEE Trans Affect Comput 8(2):190–203. https://doi.org/10.1109/TAFFC.2016.2614300

Tomimasu S, Araki M (2016) Assessment of users’ interests in multimodal dialog based on exchange unit. In: Proceedings of the workshop on multimodal analyses enabling artificial agents in human-machine interaction (MA3HMI’16). ACM, New York, NY, USA, pp 33–37. http://doi.acm.org/10.1145/3011263.3011269

Vinciarelli A, Dielmann A, Favre S, Salamin H (2009) Canal9: a database of political debates for analysis of social interactions. In: 2009 3rd international conference on affective computing and intelligent interaction and workshops, pp 1–4. https://doi.org/10.1109/ACII.2009.5349466

Waibel A, Stiefelhagen R (2009) Computers in the human interaction loop, 1st edn. Springer Publishing Company, Incorporated, Berlin

Acknowledgements

We thank the working group members who contributed to the annotation. Ms. Sayaka Tomimasu contributed to this project during the data collection. This work was partly supported by the Research Program of “Dynamic Alliance for Open Innovation Bridging Human, Environment and Materials” in Network Joint Research Center for Materials and Devices.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Komatani, K., Okada, S., Nishimoto, H., Araki, M., Nakano, M. (2021). Multimodal Dialogue Data Collection and Analysis of Annotation Disagreement. In: Marchi, E., Siniscalchi, S.M., Cumani, S., Salerno, V.M., Li, H. (eds) Increasing Naturalness and Flexibility in Spoken Dialogue Interaction. Lecture Notes in Electrical Engineering, vol 714. Springer, Singapore. https://doi.org/10.1007/978-981-15-9323-9_17

Download citation

DOI: https://doi.org/10.1007/978-981-15-9323-9_17

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-9322-2

Online ISBN: 978-981-15-9323-9

eBook Packages: EngineeringEngineering (R0)