Abstract

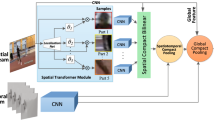

Action recognition in video sequences is an active research problem in Computer Vision. However, no significant efforts have been made for recognizing actions in hazy videos. This paper proposes a novel unified model for action recognition in hazy video using an efficient combination of a Convolutional Neural Network (CNN) for obtaining the dehazed video first, followed by extracting spatial features from each frame, and a deep bidirectional LSTM (DB-LSTM) network for extracting the temporal features during action. First, each frame of the hazy video is fed into the AOD-Net (All-in-One Dehazing Network) model to obtain the clear representation of frames. Next, spatial features are extracted from every sampled dehazed frame (produced by the AOD-Net model) by using a pre-trained VGG-16 architecture, which helps reduce the redundancy and complexity. Finally, the temporal information across the frames are learnt using a DB-LSTM network, where multiple LSTM layers are stacked together in both the forward and backward passes of the network. The proposed unified model is the first attempt to recognize human action in hazy videos. Experimental results on a synthetic hazy video dataset show state-of-the-art performances in recognizing actions.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Kopf, J., et al.: Deep photo: model-based photograph enhancement and viewing. In: SIGGRAPH Asia (2008)

Fattal, R.: Single image dehazing. ACM Trans. Graph 27(3), 72:1–72:9 (2008)

Narasimhan, S.G., Nayar, S.K.: Interactive deweathering of an image using physical models. In: Workshop on Color and Photometric Methods in Computer Vision (2003)

Tan, R.: Visibility in bad weather from a single image. In: CVPR (2008)

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. In: CVPR. IEEE (2009)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Ren, Z., et al.: Bidirectional homeostatic regulation of a depression-related brain state by gamma-aminobutyric acidergic deficits and ketamine treatment. Biol. Psychiatry 80, 457–468 (2016)

Li, B., Peng, X., Wang, Z., Xu, J., Feng, D.: AOD-net: all-in-one dehazing network (2017)

Ren, W., et al.: Single image dehazing via multi-scale convolutional neural networks. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 154–169. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_10

Cai, B., Xu, X., Jia, K., Qing, C., Tao, D.: DehazeNet: an end-to-end system for single image haze removal. IEEE Trans. Image Process. 25(11), 5187–5198 (2016)

Santra, S., Mondal, R., Chanda, B.: Learning a patch quality comparator for single image dehazing. IEEE Trans. Image Process. 27(9), 4598–4607 (2018)

Hong, J., Cho, B., Hong, Y.W., Byun, H.: Contextual action cues from camera sensor for multi-stream action recognition. Sensors 19(6), 1382 (2019)

Crasto, N., Weinzaepfel, P., Alahari, K., Schmid, C.: MARS: motion-augmented RGB stream for action recognition. In: CVPR, pp. 7882–7891 (2019)

Hanson, A., PNVR, K., Krishnagopal, S., Davis, L.: Bidirectional convolutional LSTM for the detection of violence in videos. In: Leal-Taixé, L., Roth, S. (eds.) ECCV 2018. LNCS, vol. 11130, pp. 280–295. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-11012-3_24

Soomro, K., Zamir, A.R., Shah, M.: UCF101: a dataset of 101 human action classes from videos in the wild. Report no. CRCV-TR-12-01 (November 2012)

Borkar, K., Mukherjee, S.: Video dehazing using LMNN with respect to augmented MRF. In: ICVGIP 2018, pp. 42:1–42:9. ACM (2018)

Kong, Y., Fu, Y.: Human action recognition and prediction: a survey. arXiv:1806.11230 (2018)

Mukherjee, S., Biswas, S.K., Mukherjee, D.P.: Recognizing interactions between human performers by dominating pose doublet. Mach. Vis. Appl. 25(4), 1033–1052 (2014)

Mukherjee, S., Singh, K.K.: Human action and event recognition using a novel descriptor based on improved dense trajectories. Multimedia Tools Appl. 77(11), 13661–13678 (2018)

Laptev, I., Lindeberg, T.: Space-time interest points. In: ICCV (2003)

Vinodh, B., Sunitha Gowd, T., Mukherjee, S.: Event recognition in egocentric videos using a novel trajectory based feature. In: ICVGIP 2016, pp. 76:1–76:8 (2016)

Acknowledgement

The authors wish to thank the NVIDIA for providing a TITANX GPU which was used for conducting experiments related to this study.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Tanneru, S.G., Mukherjee, S. (2021). Action Recognition in Haze Using an Efficient Fusion of Spatial and Temporal Features. In: Singh, S.K., Roy, P., Raman, B., Nagabhushan, P. (eds) Computer Vision and Image Processing. CVIP 2020. Communications in Computer and Information Science, vol 1377. Springer, Singapore. https://doi.org/10.1007/978-981-16-1092-9_3

Download citation

DOI: https://doi.org/10.1007/978-981-16-1092-9_3

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-1091-2

Online ISBN: 978-981-16-1092-9

eBook Packages: Computer ScienceComputer Science (R0)