Abstract

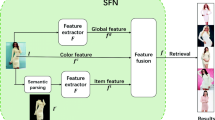

Clothes image retrieval is an element task that has attracted research interests during the past decades. While in most case that single retrieval cannot achieve the best retrieval performance, we consider to develop an interactive image retrieval system for fashion outfit search, where we utilize the natural language feedback provided by the user to grasp compound and more specific details for clothes attributes. In detail, our model is divided into two parts: feature fusion part and similarity metric learning part. The fusion module is used for combining the feature vectors of the modified description and the feature vectors of the image part. It is then optimized in an end-to-end method via a matching objective, where we have adopted contractive learning strategy to learn the similarity metric. Extensive simulations have been conducted. The simulation results show that the compared with other complex multi-model proposed in recent years, our work improves the model performance while keeping the model simple in architecture.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Berg, T.L., Berg, A.C., Shih, J.: Automatic attribute discovery and characterization from noisy web data. In: European Conference on Computer Vision. pp. 663–676. Springer (2010)

Buciluundefined, C., Caruana, R., Niculescu-Mizil, A.: Model compression. In: Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 535–541. KDD 2006, Association for Computing Machinery, New York (2006). https://doi.org/10.1145/1150402.1150464

Chen, Y., Gong, S., Bazzani, L.: Image search with text feedback by visiolinguistic attention learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3001–3011 (2020)

Cui, Z., Li, Z., Wu, S., Zhang, X.Y., Wang, L.: Dressing as a whole: Outfit compatibility learning based on node-wise graph neural networks. In: The World Wide Web Conference, pp. 307–317 (2019)

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: Bert: pre-training of deep bidirectional transformers for language understanding (2019)

Ge, Y., Zhang, R., Wang, X., Tang, X., Luo, P.: Deepfashion2: a versatile benchmark for detection, pose estimation, segmentation and re-identification of clothing images. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5337–5345 (2019)

Ge, Y., Zhang, R., Wu, L., Wang, X., Tang, X., Luo, P.: A versatile benchmark for detection, pose estimation, segmentation and re-identification of clothing images. In: CVPR (2019)

Gong, K., Liang, X., Zhang, D., Shen, X., Lin, L.: Look into person: self-supervised structure-sensitive learning and a new benchmark for human parsing. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 932–940 (2017)

Guo, X., Wu, H., Cheng, Y., Rennie, S., Tesauro, G., Feris, R.S.: Dialog-based interactive image retrieval (2018). arXiv:1805.00145

Han, X., Wu, Z., Jiang, Y.G., Davis, L.S.: Learning fashion compatibility with bidirectional lstms. In: Proceedings of the 25th ACM International Conference on Multimedia, pp. 1078–1086 (2017)

Han, X., Wu, Z., Wu, Z., Yu, R., Davis, L.S.: Viton: An image-based virtual try-on network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7543–7552 (2018)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778 (2016)

Hinton, G., Vinyals, O., Dean, J.: Distilling the knowledge in a neural network (2015)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Howard, J., Gugger, S.: Deep Learning for Coders with Fastai and Pytorch: AI Applications Without a PhD. O’Reilly Media, Incorporated (2020). https://books.google.no/books?id=xd6LxgEACAAJ

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization (2017)

Li, J., Zhao, J., Wei, Y., Lang, C., Li, Y., Sim, T., Yan, S., Feng, J.: Multiple-human parsing in the wild (2017). arXiv:1705.07206

Li, Q., Wu, Z., Zhang, H.: Spatio-temporal modeling with enhanced flexibility and robustness of solar irradiance prediction: a chain-structure echo state network approach. J. Cleaner Prod. 261, 121–151 (2020)

Li, X., Ye, Z., Zhang, Z., Zhao, M.: Clothes image caption generation with attribute detection and visual attention model. Pattern Recognit. Lett. 141, 68–74 (2021). https://doi.org/10.1016/j.patrec.2020.12.001, https://www.sciencedirect.com/science/article/pii/S0167865520304281

Liu, L., Zhang, H., Xu, X., Zhang, Z., Yan, S.: Collocating clothes with generative adversarial networks cosupervised by categories and attributes: a multidiscriminator framework. IEEE Trans. Neural Netw. Learn. Syst. (2019)

Liu, Z., Luo, P., Qiu, S., Wang, X., Tang, X.: Deepfashion: powering robust clothes recognition and retrieval with rich annotations. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016

Liu, Z., Yan, S., Luo, P., Wang, X., Tang, X.: Fashion landmark detection in the wild. In: European Conference on Computer Vision (ECCV), October 2016

Lu, J., Goswami, V., Rohrbach, M., Parikh, D., Lee, S.: 12-in-1: Multi-task vision and language representation learning. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 10434–10443 (2020). https://doi.org/10.1109/CVPR42600.2020.01045

Redmon, J., Farhadi, A.: Yolov3: an incremental improvement (2018). arXiv:1804.02767

Russakovsky, O., et al.: ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. (IJCV) 115(3), 211–252 (2015). 10.1007/s11263-015-0816-y

Sanh, V., Debut, L., Chaumond, J., Wolf, T.: Distilbert, a distilled version of bert: smaller, faster, cheaper and lighter (2020)

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.: Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2818–2826 (2016)

Vaswani, A., et al.: Attention is all you need (2017)

Vo, N., et al.: Composing text and image for image retrieval - an empirical odyssey (2018)

Wang, W., Xu, Y., Shen, J., Zhu, S.C.: Attentive fashion grammar network for fashion landmark detection and clothing category classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4271–4280 (2018)

Wu, H., et al.: Fashion IQ: a new dataset towards retrieving images by natural language feedback (2020)

Wu, Z., Li, Q., Xia, X.: Multi-timescale forecast of solar irradiance based on multi-task learning and echo state network approaches. IEEE Trans. Ind. Inf. (2020)

Zeiler, M.D., Fergus, R.: Visualizing and understanding convolutional networks (2013)

Zeng, W., Zhao, M., Gao, Y., Zhang, Z.: Tilegan: category-oriented attention-based high-quality tiled clothes generation from dressed person. Neural Comput. Appl. (2020)

Zhang, H., Sun, Y., Liu, L., Wang, X., Li, L., Liu, W.: Clothingout: a category-supervised gan model for clothing segmentation and retrieval. Neural Comput. Appl. 32, 1–12 (2018)

Zhang, Y., Li, X., Lin, M., Chiu, B., Zhao, M.: Deep-recursive residual network for image semantic segmentation. Neural Comput. Appl. (2020)

Zhao, M., Liu, J., Zhang, Z., Fan, J.: A scalable sub-graph regularization for efficient content based image retrieval with long-term relevance feedback enhancement. Knowl. Based Syst. 212, 106505 (2020)

Zhao, M., Liu, Y., Li, x., Zhang, Z., Zhang, Y.: An end-to-end framework for clothing collocation based on semantic feature fusion. IEEE Multimedia 1–10 (2020)

Zhu, S., Fidler, S., Urtasun, R., Lin, D., Loy, C.C.: Be your own prada: fashion synthesis with structural coherence. In: International Conference on Computer Vision (ICCV), October 2017

Zhu, Y., et al.: Aligning books and movies: towards story-like visual explanations by watching movies and reading books (2015)

Acknowledgement

This work is partially supported by National Key Research and Development Program of China (2019YFC1521300), partially supported by National Natural Science Foundation of China (61971121), partially supported by the Fundamental Research Funds for the Central Universities of China and partially supported by the T00120210002 of Shenzhen Research Institute of Big Data.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Li, X., Rong, Y., Zhao, M., Fan, J. (2021). Interactive Clothes Image Retrieval via Multi-modal Feature Fusion of Image Representation and Natural Language Feedback. In: Zhang, H., Yang, Z., Zhang, Z., Wu, Z., Hao, T. (eds) Neural Computing for Advanced Applications. NCAA 2021. Communications in Computer and Information Science, vol 1449. Springer, Singapore. https://doi.org/10.1007/978-981-16-5188-5_41

Download citation

DOI: https://doi.org/10.1007/978-981-16-5188-5_41

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-5187-8

Online ISBN: 978-981-16-5188-5

eBook Packages: Computer ScienceComputer Science (R0)