Abstract

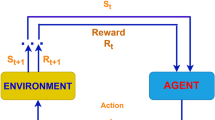

Smart transportation is crucial to citizens’ living experience. A high efficiency dispatching system will not only help drivers rise income, but also save the waiting time for passengers. However, drivers’ experience-based cruising strategy cannot meet the requirement. By conventional strategies, it is not easy for taxi drivers to find passengers efficiently and will also result in a waste of time and fuel. To address this problem, we construct a model for taxi cruising and taking passengers based on the view of drivers’ benefits. By employing real data of taxi orders, we apply a deep-Q-network in the framework of reinforcement learning to find a strategy to reduce the cost in taxi drivers’ finding the passengers and improve their earning. Finally, we prove the effect of our strategy by comparing it with a random-walk strategy in different segments of time both in workday and weekend.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Wang, H.: A Practice of Smart City-Intelligent Taxi Dispatch Services with Real-Time Traffic and Customer Information, 1st edn. Beihang University Press, Beijing (2011)

Dial, B.: Autonomous dial-a-ride transit introductory overview. Transp. Res. Part C Emerg. Technol. 3(5), 261–275 (1995)

Zeng, W., Wu, M., Sun, W., Xie, S.: Comprehensive review of autonomous taxi dispatching systems. Comput. Sci. 47(05), 181–189 (2020)

Powell, W., Huang, Y., Bastani, F., Ji, M.: Towards reducing taxicab cruising time using spatio-temporal profitability maps. In: Proceedings of the 12th International Conference on Advances in Spatial and Temporal Databases (SSTD 2011), pp. 242–260. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-22922-0

Liu, L., Andris, C., Ratti, C.: Uncovering cabdrivers’ behavior patterns from their digital traces. Comput. Environ. Urban Syst. 34(6), 541–548 (2010)

Li, B., et al.: Hunting or waiting? Discovering passenger-finding strategies from a large-scale real-world taxi dataset. In: Proceedings of the 2011 IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOM Workshops), pp. 63–68. (2011)

Zhang, K., Chen, Y., Nie, Y.M.: Hunting image: taxi search strategy recognition using sparse subspace clustering. Transp. Res. Part C Emerg. Technol. 109, 250–266 (2019)

Maciejewski, M., Bischoff, J., Nagel, K.: An assignment-based approach to efficient real-time city-scale taxi dispatching. IEEE Intell. Syst. 31(1), 68–77 (2016)

Nourinejad, M., Ramezani, M.: Developing a large-scale taxi dispatching system for urban networks. In: Proceedings of the IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), pp. 441–446. IEEE (2016)

Wang, Y., Liang, B., Zheng, W.: The development of a smart taxicab scheduling system: a multi-source fusion perspective. In: Proceedings of the IEEE 16th International Conference on Data Mining (ICDM), pp. 1275–1280. IEEE (2016)

Liu, Z., Miwa, T., Zeng, W.: Shared autonomous taxi system and utilization of collected travel-time information. J. Adv. Transport. 2018, 1–13 (2018)

Shen, Y., Chen, C., Zhou, Q.: Taxi resource allocation optimization under improved particle swarm optimization algorithm based on multi-chaotic strategy. J. Heilongjiang Univ. Technol. (Comprehens. Ed.) 18(05), 72–76 (2018)

Xie, R., Pan, W., Shibasaki, R.: Intelligent taxi dispatching based on artificial fish swarm algorithm. Syst. Eng. Theory Pract. 37(11), 2938–2947 (2017)

Verma, T., Varakantham, P., Kraus, S., Lau, H.C.: Augmenting decisions of taxi drivers through reinforcement learning for improving revenues. In: Proceedings of the 27th International Conference on Automated Planning and Scheduling, Pittsburgh, pp. 409–417 (2017)

Rong, H., Zhou, X., Yang, C., Shafiq, Z., Liu, A.: The rich and the poor: a Markov decision process approach to optimizing taxi driver revenue efficiency. In: Proceedings of the 25th ACM International on Conference on Information and Knowledge Management, pp. 2329–2334. Association for Computing Machinery, New York (2016)

Miyoung, H., Pierre, S., Stéphane, B., Wu, H.: Routing an autonomous taxi with reinforcement learning. In: Proceedings of the 25th ACM International on Conference on Information and Knowledge Management, pp. 2421–2424. Association for Computing Machinery, New York (2016)

Shi, D., Ding, J., Sai, E.: Deep Q-network-based route scheduling for TNC vehicles with passengers location differential privacy. IEEE Internet Things J. 6(5), 7681–7692 (2019)

Wang, Y., Liu, H., Zheng, W.: Multi-objective workflow scheduling with deep-Q-network-based multi-agent reinforcement learning. IEEE Access 7, 39974–39982 (2019)

Tseng, H., Luo, Y., Cui, S.: Deep reinforcement learning for automated radiation adaptation in lung cancer. Med. Phys. 44(12), 6690–6705 (2017)

Carta, S., Ferreira, A., Podda, S.: Multi-DQN: an ensemble of deep Q-learning agents for stock market forecasting. Exp. Syst. Appl. 164, 113820 (2021)

Sutton, S., Barto, G.: Reinforcement Learning: An Introduction. 2nd Edn. MIT Press, Cambridge (2018).

Watkins, H., Dayan, P.: Q-learning. Mach. Learn. 8, 279–292 (1992)

Leemon, B.: Residual algorithms: reinforcement learning with function approximation. In: Proceedings of the International Conference on Machine Learning (ICML 1995), pp. 30–37. Elsevier, Amsterdam (1995)

Justin, B., Andrew, M.: Generalization in reinforcement learning: safely approximating the value function. In: Tesauro, G., Touretzky, D.S., Leen, T.K. (eds.) Advances in Neural Information Processing Systems 7 (NIPS-94), pp. 369–376. MIT Press, Cambridge (1995)

Volodymyr, M., et al.: Human-level control through deep reinforcement learning. Nature 518(7540), 529–533 (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Hua, Z., Li, D., Guo, W. (2021). A Deep Q-Learning Network Based Reinforcement Strategy for Smart City Taxi Cruising. In: Zhang, H., Yang, Z., Zhang, Z., Wu, Z., Hao, T. (eds) Neural Computing for Advanced Applications. NCAA 2021. Communications in Computer and Information Science, vol 1449. Springer, Singapore. https://doi.org/10.1007/978-981-16-5188-5_5

Download citation

DOI: https://doi.org/10.1007/978-981-16-5188-5_5

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-5187-8

Online ISBN: 978-981-16-5188-5

eBook Packages: Computer ScienceComputer Science (R0)