Abstract

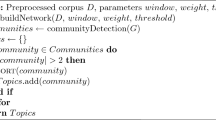

The most popular topic modelling algorithm, Latent Dirichlet Allocation, produces a simple set of topics. However, topics naturally exist in a hierarchy with larger, more general super-topics and smaller, more specific sub-topics. We develop a novel topic modelling algorithm, Community Topic, that mines communities from word co-occurrence networks to produce topics. The fractal structure of networks provides a natural topic hierarchy where sub-topics can be found by iteratively mining the sub-graph formed by a single topic. Similarly, super-topics can by found by mining the network of topic hyper-nodes. We compare the topic hierarchies discovered by Community Topic to those produced by two probabilistic graphical topic models and find that Community Topic uncovers a topic hierarchy with a more coherent structure and a tighter relationship between parent and child topics. Community Topic is able to find this hierarchy more quickly and allows for on-demand sub- and super-topic discovery, facilitating corpus exploration by researchers.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

References

Aletras, N., Stevenson, M.: Evaluating topic coherence using distributional semantics. In: Proceedings of the 10th International Conference on Computational Semantics (IWCS 2013)-Long Papers, pp. 13–22 (2013)

Austin, E., Zaïane, O., Largeron, C.: Community topic: topic model inference by consecutive word community discovery. In: Proceedings of COLING 2022, the 32nd International Conference on Computational Linguistics (2022)

Blei, D., Lafferty, J.: Correlated topic models. In: Advances in Neural Information Processing Systems, vol. 18, p. 147 (2006)

Blei, D., Lafferty, J.: Dynamic topic models. In: Proceeding of the 23rd International Conference on Machine Learning, pp. 113–120 (2006). https://doi.org/10.1145/1143844.1143859

Blei, D.M., Ng, A.Y., Jordan, M.I.: Latent Dirichlet allocation. J. Mach. Learn. Res. 3(Jan), 993–1022 (2003). https://doi.org/10.1016/B978-0-12-411519-4.00006-9

Bouma, G.: Normalized (pointwise) mutual information in collocation extraction. Proc. GSCL 30, 31–40 (2009)

Burkhardt, S., Kramer, S.: Decoupling sparsity and smoothness in the Dirichlet variational autoencoder topic model. J. Mach. Learn. Res. 20(131), 1–27 (2019)

Chen, J., Zaïane, O.R., Goebel, R.: An unsupervised approach to cluster web search results based on word sense communities. In: 2008 IEEE/WIC/ACM International Conference on Web Intelligence and Intelligent Agent Technology, vol. 1, pp. 725–729. IEEE (2008). https://doi.org/10.1109/WIIAT.2008.24

Coscia, M., Giannotti, F., Pedreschi, D.: A classification for community discovery methods in complex networks. Stat. Anal. Data Min. ASA Data Sci. J. 4(5), 512–546 (2011). https://doi.org/10.1002/sam.10133

Deerwester, S., Dumais, S.T., Furnas, G.W., Landauer, T.K., Harshman, R.: Indexing by latent semantic analysis. J. Am. Soc. Inf. Sci. 41(6), 391–407 (1990). 10.1002/(sici)1097-4571(199009)41:6\(<\)391::aid-asi1\(>\)3.0.co;2-9

Dieng, A.B., Ruiz, F.J.R., Blei, D.M.: Topic modeling in embedding spaces. Trans. Assoc. Comput. Linguist. 8, 439–453 (2020). https://doi.org/10.1162/tacl_a_00325

Dziri, N., Kamalloo, E., Mathewson, K., Zaïane, O.R.: Augmenting neural response generation with context-aware topical attention. In: Proceedings of the First Workshop on NLP for Conversational AI, pp. 18–31 (2019). https://doi.org/10.18653/v1/W19-4103

Epasto, A., Lattanzi, S., Paes Leme, R.: Ego-splitting framework: from non-overlapping to overlapping clusters. In: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 145–154 (2017)

Fortunato, S.: Community detection in graphs. Phys. Rep. 486(3–5), 75–174 (2010). https://doi.org/10.1016/j.physrep.2009.11.002

Fortunato, S., Hric, D.: Community detection in networks: a user guide. Phys. Rep. 659, 1–44 (2016). https://doi.org/10.1016/j.physrep.2016.09.002

Griffiths, T., Jordan, M., Tenenbaum, J., Blei, D.: Hierarchical topic models and the nested Chinese restaurant process. In: Advances in Neural Information Processing Systems, vol. 16 (2003)

Hofmann, T.: Probabilistic latent semantic indexing. In: Proceedings of the 22nd Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 50–57 (1999). https://doi.org/10.1145/312624.312649

Hoyle, A., Goel, P., Hian-Cheong, A., Peskov, D., Boyd-Graber, J., Resnik, P.: Is automated topic model evaluation broken? The incoherence of coherence. In: Advances in Neural Information Processing Systems, vol. 34 (2021)

Kim, J.H., Kim, D., Kim, S., Oh, A.: Modeling topic hierarchies with the recursive Chinese restaurant process. In: Proceedings of the 21st ACM International Conference on Information and Knowledge Management, pp. 783–792 (2012)

Kingma, D.P., Welling, M.: Auto-encoding variational bayes. In: Proceedings of the International Conference on Learning Representations (ICLR) (2014)

Kingma, D.P., Welling, M., et al.: An introduction to variational autoencoders. Found. Trends Mach. Learn. 12(4), 307–392 (2019). https://doi.org/10.1561/9781680836233

Krasnashchok, K., Jouili, S.: Improving topic quality by promoting named entities in topic modeling. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), pp. 247–253 (2018). https://doi.org/10.18653/v1/P18-2040

Lee, D.D., Seung, H.S.: Learning the parts of objects by non-negative matrix factorization. Nature 401(6755), 788–791 (1999). https://doi.org/10.1038/44565

Li, W., McCallum, A.: Pachinko allocation: DAG-structured mixture models of topic correlations. In: Proceedings of the 23rd International Conference on Machine Learning, ICML 2006, pp. 577–584. Association for Computing Machinery, New York (2006). https://doi.org/10.1145/1143844.1143917

Mantyla, M.V., Claes, M., Farooq, U.: Measuring LDA topic stability from clusters of replicated runs. In: Proceedings of the 12th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement, pp. 1–4 (2018). https://doi.org/10.1145/3239235.3267435

Martin, F., Johnson, M.: More efficient topic modelling through a noun only approach. In: Proceedings of the Australasian Language Technology Association Workshop 2015, pp. 111–115 (2015)

Mehrotra, R., Sanner, S., Buntine, W., Xie, L.: Improving LDA topic models for microblogs via tweet pooling and automatic labeling. In: Proceedings of the 36th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 889–892 (2013). https://doi.org/10.1145/2484028.2484166

Miao, Y., Yu, L., Blunsom, P.: Neural variational inference for text processing. In: International Conference on Machine Learning, pp. 1727–1736. PMLR (2016)

Mikolov, T., Sutskever, I., Chen, K., Corrado, G.S., Dean, J.: Distributed representations of words and phrases and their compositionality. In: Advances in Neural Information Processing Systems, vol. 26 (2013)

Nalisnick, E., Smyth, P.: Stick-breaking variational autoencoders. In: Proceedings of the International Conference on Learning Representations (ICLR) (2017)

Nan, F., Ding, R., Nallapati, R., Xiang, B.: Topic modeling with Wasserstein autoencoders. arXiv preprint arXiv:1907.12374 (2019). https://doi.org/10.18653/v1/P19-1640

Newman, M., Girvan, M.: Finding and evaluating community structure in networks. Phys. Rev. E 69(2), 026113 (2004). https://doi.org/10.1103/physreve.69.026113

Röder, M., Both, A., Hinneburg, A.: Exploring the space of topic coherence measures. In: Proceedings of the Eighth ACM International Conference on Web Search and Data Mining, pp. 399–408 (2015). https://doi.org/10.1145/2684822.2685324

Sakr, S., et al.: The future is big graphs: a community view on graph processing systems. Commun. ACM 64(9), 62–71 (2021). https://doi.org/10.1145/3434642

Schofield, A., Mimno, D.: Comparing apples to apple: the effects of stemmers on topic models. Trans. Assoc. Comput. Linguist. 4, 287–300 (2016). https://doi.org/10.1162/tacl_a_00099

Srivastava, A., Sutton, C.: Autoencoding variational inference for topic models. In: Proceedings of the International Conference on Learning Representations (ICLR) (2017)

Steyvers, M., Smyth, P., Rosen-Zvi, M., Griffiths, T.: Probabilistic author-topic models for information discovery. In: Proceedings of the Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 306–315 (2004). https://doi.org/10.1145/1014052.1014087

Traag, V.A., Waltman, L., Van Eck, N.J.: From Louvain to Leiden: guaranteeing well-connected communities. Sci. Rep. 9(1), 1–12 (2019). https://doi.org/10.1038/s41598-019-41695-z

Yang, K., Cai, Y., Chen, Z., Leung, H., Lau, R.: Exploring topic discriminating power of words in latent Dirichlet allocation. In: Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, pp. 2238–2247 (2016)

Zhang, H., Chen, B., Guo, D., Zhou, M.: WHAI: Weibull hybrid autoencoding inference for deep topic modeling. In: 6th International Conference on Learning Representations (ICLR) (2018)

Zhao, H., Du, L., Buntine, W., Liu, G.: MetaLDA: a topic model that efficiently incorporates meta information. In: 2017 IEEE International Conference on Data Mining (ICDM), pp. 635–644 (2017). https://doi.org/10.1109/ICDM.2017.73

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Austin, E., Trabelsi, A., Largeron, C., Zaïane, O.R. (2022). Hierarchical Topic Model Inference by Community Discovery on Word Co-occurrence Networks. In: Park, L.A.F., et al. Data Mining. AusDM 2022. Communications in Computer and Information Science, vol 1741. Springer, Singapore. https://doi.org/10.1007/978-981-19-8746-5_11

Download citation

DOI: https://doi.org/10.1007/978-981-19-8746-5_11

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-8745-8

Online ISBN: 978-981-19-8746-5

eBook Packages: Computer ScienceComputer Science (R0)