Abstract

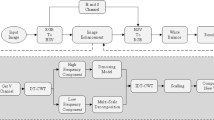

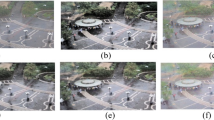

Frequency information (e.g., Discrete Wavelet Transform and Fast Fourier Transform) has been widely applied to solve the issue of Low-Light Image Enhancement (LLIE). However, existing frequency-based models primarily operate in the simple wavelet or Fourier space of images, which lacks utilization of valid global and local information in each space. We found that wavelet frequency information is more sensitive to global brightness due to its low-frequency component while Fourier frequency information is more sensitive to local details due to its phase component. In order to achieve superior preliminary brightness enhancement by optimally integrating spatial channel information with low-frequency components in the wavelet transform, we introduce channel-wise Mamba, which compensates for the long-range dependencies of CNNs and has lower complexity compared to Diffusion and Transformer models. So in this work, we propose a novel Wavelet-based Mamba with Fourier Adjustment model called WalMaFa, consisting of a Wavelet-based Mamba Block (WMB) and a Fast Fourier Adjustment Block (FFAB). We employ an Encoder-Latent-Decoder structure to accomplish the end-to-end transformation. Specifically, WMB is adopted in the Encoder and Decoder to enhance global brightness while FFAB is adopted in the Latent to fine-tune local texture details and alleviate ambiguity. Extensive experiments demonstrate that our proposed WalMaFa achieves state-of-the-art performance with fewer computational resources and faster speed. Code is now available at: https://github.com/mcpaulgeorge/WalMaFa.

J. Tan and S. Pei—Contribute equally.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Cai, Y., Bian, H., Lin, J., Wang, H., Timofte, R., Zhang, Y.: Retinexformer: One-stage retinex-based transformer for low-light image enhancement. In: ICCV (2023)

Chen, C., Chen, Q., Xu, J., Koltun, V.: Learning to see in the dark. In: CVPR. pp. 3291–3300 (2018). https://doi.org/10.1109/CVPR.2018.00347

Chen, H., Wang, Y., Guo, T., Xu, C., Deng, Y., Liu, Z., Ma, S., Xu, C., Xu, C., Gao, W.: Pre-trained image processing transformer. In: CVPR. pp. 12294–12305 (2021https://doi.org/10.1109/CVPR46437.2021.01212

Cui, Z., Li, K., Gu, L., Su, S., Gao, P., Jiang, Z., Qiao, Y., Harada, T.: You only need 90k parameters to adapt light: a light weight transformer for image enhancement and exposure correction. In: BMVC. BMVA Press (2022), https://bmvc2022.mpi-inf.mpg.de/0238.pdf

Gu, A., Dao, T.: Mamba: Linear-time sequence modeling with selective state spaces. arXiv preprint arXiv:2312.00752 (2023)

Gu, A., Goel, K., Ré, C.: Efficiently modeling long sequences with structured state spaces. CoRR abs/2111.00396 (2021), https://arxiv.org/abs/2111.00396

Gu, A., Johnson, I., Goel, K., Saab, K., Dao, T., Rudra, A., Ré, C.: Combining recurrent, convolutional, and continuous-time models with linear state space layers. In: Advances in Neural Information Processing Systems. pp. 572–585 (2021)

Guo, C., Li, C., Guo, J., Loy, C.C., Hou, J., Kwong, S., Cong, R.: Zero-reference deep curve estimation for low-light image enhancement. In: CVPR. pp. 1777–1786 (2020https://doi.org/10.1109/CVPR42600.2020.00185

Guo, X., Li, Y., Ling, H.: Lime: Low-light image enhancement via illumination map estimation. IEEE TIP 26(2), 982–993 (2017). https://doi.org/10.1109/TIP.2016.2639450

Jiang, Y., Gong, X., Liu, D., Cheng, Y., Fang, C., Shen, X., Yang, J., Zhou, P., Wang, Z.: Enlightengan: Deep light enhancement without paired supervision. IEEE TIP 30, 2340–2349 (2021https://doi.org/10.1109/TIP.2021.3051462

Kingma, D., Ba, J.: Adam: A method for stochastic optimization. Computer Science (2014)

Lee, C., Lee, C., Kim, C.S.: Contrast enhancement based on layered difference representation. In: ICIP. pp. 965–968 (2012https://doi.org/10.1109/ICIP.2012.6467022

Li, C., Guo, C.L., Zhou, M., Liang, Z., Zhou, S., Feng, R., Loy, C.C.: Embeddingfourier for ultra-high-definition low-light image enhancement. In: ICLR (2023)

Liu, R., Ma, L., Zhang, J., Fan, X., Luo, Z.: Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement. In: CVPR. pp. 10556–10565 (2021https://doi.org/10.1109/CVPR46437.2021.01042

Ma, K., Zeng, K., Wang, Z.: Perceptual quality assessment for multi-exposure image fusion. IEEE TIP 24(11), 3345–3356 (2015). https://doi.org/10.1109/TIP.2015.2442920

Nicholls, M.E.R.: Likert Scales. Corsini Encyclopedia of Psychology (2010)

Pei, S., Huang, J.: Slsnet: Weakly-supervised skin lesion segmentation network with self-attentions. In: PRICAI 2023. pp. 474–479. Springer Nature Singapore, Singapore (2024)

Vonikakis, V., Kouskouridas, R., Gasteratos, A.: On the evaluation of illumination compensation algorithms. Multimedia Tools and Applications 77, 1–21 (04 2018https://doi.org/10.1007/s11042-017-4783-x

Wang, J., Zhuang, W., Shang, D.: Light enhancement algorithm optimization for autonomous driving vision in night scenes based on yolact++. In: ISPDS. pp. 417–423 (2022https://doi.org/10.1109/ISPDS56360.2022.9874070

Wang, R., Zhang, Q., Fu, C.W., Shen, X., Zheng, W.S., Jia, J.: Underexposed photo enhancement using deep illumination estimation. In: CVPR. pp. 6842–6850 (2019https://doi.org/10.1109/CVPR.2019.00701

Wang, S., Zheng, J., Hu, H.M., Li, B.: Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE TIP 22(9), 3538–3548 (2013). https://doi.org/10.1109/TIP.2013.2261309

Wang, T., Zhang, K., Shen, T., Luo, W., Stenger, B., Lu, T.: Ultra-high-definition low-light image enhancement: A benchmark and transformer-based method. In: AAAI. AAAI’23, AAAI Press (2023https://doi.org/10.1609/aaai.v37i3.25364, https://doi.org/10.1609/aaai.v37i3.25364

Wang, Z., Cun, X., Bao, J., Zhou, W., Liu, J., Li, H.: Uformer: A general u-shaped transformer for image restoration. In: CVPR. pp. 17662–17672 (2022https://doi.org/10.1109/CVPR52688.2022.01716

Wei, C., Wang, W., Yang, W., Liu, J.: Deep retinex decomposition for low-light enhancement (2018)

Xu, J., Yuan, M., Yan, D.M., Wu, T.: Illumination guided attentive wavelet network for low-light image enhancement. IEEE TMM 25, 6258–6271 (2023https://doi.org/10.1109/TMM.2022.3207330

Xu, X., Wang, R., Fu, C.W., Jia, J.: Snr-aware low-light image enhancement. In: CVPR. pp. 17693–17703 (2022https://doi.org/10.1109/CVPR52688.2022.01719

Yang, W., Wang, W., Huang, H., Wang, S., Liu, J.: Sparse gradient regularized deep retinex network for robust low-light image enhancement. IEEE TIP 30, 2072–2086 (2021https://doi.org/10.1109/TIP.2021.3050850

Zamir, S.W., Arora, A., Khan, S., Hayat, M., Khan, F.S., Yang, M.: Restormer: Efficient transformer for high-resolution image restoration. In: CVPR. pp. 5718–5729 (2022https://doi.org/10.1109/CVPR52688.2022.00564

Zhang, Y., Zhang, J., Guo, X.: Kindling the darkness: A practical low-light image enhancer. In: ACM MM. p. 1632-1640. MM ’19, Association for Computing Machinery, New York, NY, USA (2019https://doi.org/10.1145/3343031.3350926, https://doi.org/10.1145/3343031.3350926

Acknowledgements

The authors would like to thank the anonymous reviewers for their invaluable comments. This work was partially funded by the National Natural Science Foundation of China under Grant No. 61975124, State Key Laboratory of Computer Architecture (ICT, CAS) under Grant No. CARCHA202111, Engineering Research Center of Software/Hardware Co-design Technology and Application, Ministry of Education, East China Normal University under Grant No. OP202202, and Open Project of Key Laboratory of Ministry of Public Security for Road Traffic Safety under Grant No. 2023ZDSYSKFKT04.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Tan, J., Pei, S., Qin, W., Fu, B., Li, X., Huang, L. (2025). Wavelet-Based Mamba with Fourier Adjustment for Low-Light Image Enhancement. In: Cho, M., Laptev, I., Tran, D., Yao, A., Zha, H. (eds) Computer Vision – ACCV 2024. ACCV 2024. Lecture Notes in Computer Science, vol 15475. Springer, Singapore. https://doi.org/10.1007/978-981-96-0911-6_10

Download citation

DOI: https://doi.org/10.1007/978-981-96-0911-6_10

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-96-0910-9

Online ISBN: 978-981-96-0911-6

eBook Packages: Computer ScienceComputer Science (R0)