Abstract

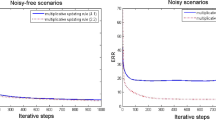

The accelerated proximal gradient (APG) is a classical algorithm for nonnegative tensor decomposition. The APG employs variable extrapolation to accelerate the computation. However, large-scale tensor decomposition still requires more efficient algorithms. In this paper, we propose a doubly accelerated proximal gradient algorithm. Specifically, in the block coordinate descent framework, we utilize double extrapolations in both the inner and outer loops to accelerate the proximal gradient. Moreover, a safe mode comes with the acceleration in the outer loop to enhance monotonic convergence. We conduct experiments on both synthetic and real-world tensors. The results demonstrate that the proposed algorithm outperforms state-of-the-art algorithms in running speed and accuracy.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Lin, J., Huang, T.Z., Zhao, X.L., Ji, T.Y., Zhao, Q.: Tensor robust kernel PCA for multidimensional data. IEEE Trans. Neural Netw. Learn. Syst. 1–13 (2024)

Wang, D., Zhu, Y., Ristaniemi, T., Cong, F.: Extracting multi-mode ERP features using fifth-order nonnegative tensor decomposition. J. Neurosci. Methods 308, 240–247 (2018)

Wang, M., et al.: Tensor decompositions for hyperspectral data processing in remote sensing: a comprehensive review. IEEE Geosci. Remote Sens. Mag. 11(1), 26–72 (2023)

Elcoroaristizabal, S., Bro, R., García, J.A., Alonso, L.: PARAFAC models of fluorescence data with scattering: a comparative study. Chemom. Intell. Lab. Syst. 142, 124–130 (2015)

Fernandes, S., Fanaee-T, H., Gama, J.: Tensor decomposition for analysing time-evolving social networks: an overview. Artif. Intell. Rev. 54(4), 2891–2916 (2021)

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009)

Cichocki, A., et al.: Tensor decompositions for signal processing applications: from two-way to multiway component analysis. IEEE Signal Process. Mag. 32(2), 145–163 (2015)

Sidiropoulos, N.D., Lathauwer, L.D., Fu, X., Huang, K., Papalexakis, E.E., Faloutsos, C.: Tensor decomposition for signal processing and machine learning. IEEE Trans. Signal Process. 65(13), 3551–3582 (2017)

Cichocki, A., Zdunek, R., Phan, A.H., Amari, S.I.: Nonnegative Matrix and Tensor Factorizations: Applications to Exploratory Multi-Way Data Analysis and Blind Source Separation. Wiley, Hoboken (2009)

Wang, D., Chang, Z., Cong, F.: Sparse nonnegative tensor decomposition using proximal algorithm and inexact block coordinate descent scheme. Neural Comput. Appl. 33(24), 17369–17387 (2021)

Xu, Y., Yin, W.: A block coordinate descent method for regularized multiconvex optimization with applications to nonnegative tensor factorization and completion. SIAM J. Imag. Sci. 6(3), 1758–1789 (2013)

Zhang, Y., Zhou, G., Zhao, Q., Cichocki, A., Wang, X.: Fast nonnegative tensor factorization based on accelerated proximal gradient and low-rank approximation. Neurocomputing 198, 148–154 (2016)

Liavas, A.P., Kostoulas, G., Lourakis, G., Huang, K., Sidiropoulos, N.D.: Nesterov-based alternating optimization for nonnegative tensor factorization: algorithm and parallel implementation. IEEE Trans. Signal Process. 66(4), 944–953 (2018)

Hien, L.T.K., Gillis, N., Patrinos, P.: Inertial block proximal methods for non-convex non-smooth optimization. In: Daume III, H., Singh, A. (eds.) Proceedings of the 37th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 119, pp. 5671–5681. PMLR (2020). https://proceedings.mlr.press/v119/le20a.html

Wang, D., Cong, F.: An inexact alternating proximal gradient algorithm for nonnegative CP tensor decomposition. SCIENCE CHINA Technol. Sci. 64(9), 1893–1906 (2021)

Evans, D., Ye, N.: Blockwise acceleration of alternating least squares for canonical tensor decomposition. Numer. Linear Algebra Appl. 30(6), e2516 (2023)

Ang, A.M.S., Cohen, J.E., Gillis, N., Hien, L.T.K.: Accelerating block coordinate descent for nonnegative tensor factorization. Numer. Linear Algebra Appl. 28(5), e2373 (2021)

Nesterov, Y.E.: A method of solving a convex programming problem with convergence rate \(\mathit{O}(\frac{1}{k^2})\). Soviet Math. Dokl. 269(3), 543–547 (1983). https://www.mathnet.ru/eng/dan46009

Kim, J., He, Y., Park, H.: Algorithms for nonnegative matrix and tensor factorizations: a unified view based on block coordinate descent framework. J. Global Optim. 58(2), 285–319 (2014)

Lin, C.J.: Projected gradient methods for nonnegative matrix factorization. Neural Comput. 19(10), 2756–2779 (2007)

Guan, N., Tao, D., Luo, Z., Yuan, B.: NeNMF: an optimal gradient method for nonnegative matrix factorization. IEEE Trans. Signal Process. 60(6), 2882–2898 (2012)

Bader, B.W., Kolda, T.G., et al.: Tensor toolbox for MATLAB, version 3.5 (2023). https://www.tensortoolbox.org/

Nascimento, S.M., Amano, K., Foster, D.H.: Spatial distributions of local illumination color in natural scenes. Vision. Res. 120, 39–44 (2016)

Acknowledgments

This work was supported by the State Key Laboratory of Robotics (2023-Z04) and the Natural Science Foundation of Liaoning Province (2022-BS-029). The author would like to thank Dalian University of Technology for the support of the experimental environment.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Wang, D. (2024). Doubly Accelerated Proximal Gradient for Nonnegative Tensor Decomposition. In: Le, X., Zhang, Z. (eds) Advances in Neural Networks – ISNN 2024. ISNN 2024. Lecture Notes in Computer Science, vol 14827. Springer, Singapore. https://doi.org/10.1007/978-981-97-4399-5_6

Download citation

DOI: https://doi.org/10.1007/978-981-97-4399-5_6

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-4398-8

Online ISBN: 978-981-97-4399-5

eBook Packages: Computer ScienceComputer Science (R0)