Abstract

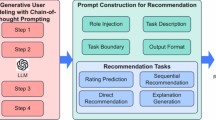

Generating user-friendly explanations regarding why an item is recommended has become increasingly prevalent, largely due to advances in language generation technology, which can enhance user trust and facilitate more informed decision-making during online consumption. However, existing explainable recommendation systems focus on using small-size language models. It remains uncertain what impact replacing the explanation generator with the recently emerging large language models (LLMs) would have. Can we expect unprecedented results? In this study, we propose LLMXRec, a simple yet effective two-stage explainable recommendation framework aimed at further boosting the explanation quality by employing LLMs. Unlike most existing LLM-based recommendation works, a key characteristic of LLMXRec is its emphasis on the close collaboration between previous recommender models and LLM-based explanation generators. Specifically, by adopting several key fine-tuning techniques, including parameter-efficient instructing tuning and personalized prompt techniques, controllable and fluent explanations can be well generated to achieve the goal of explanation recommendation. Most notably, we provide three different perspectives to evaluate the effectiveness of the explanations. Finally, we conduct extensive experiments over several benchmark recommender models and publicly available datasets. The experimental results not only yield positive results in terms of effectiveness and efficiency but also uncover some previously unknown outcomes. To facilitate further explorations in this area, the full code and detailed original results are open-sourced at (https://github.com/GodFire66666/LLM_rec_explanation).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bilgic, M., Mooney, R.J.: Explaining recommendations: Satisfaction vs. promotion. In: Beyond personalization workshop, IUI. vol. 5, p. 153 (2005)

Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J.D., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., et al.: Language models are few-shot learners. Advances in neural information processing systems 33, 1877–1901 (2020)

Chen, X., Zhang, Y., Xu, H., Cao, Y., Qin, Z., Zha, H.: Visually explainable recommendation. arXiv preprint arXiv:1801.10288 (2018)

Cheng, M., Liu, Z., Liu, Q., Ge, S., Chen, E.: Towards automatic discovering of deep hybrid network architecture for sequential recommendation. In: Proceedings of the ACM Web Conference 2022. pp. 1923–1932 (2022)

Cheng, M., Yuan, F., Liu, Q., Xin, X., Chen, E.: Learning transferable user representations with sequential behaviors via contrastive pre-training. In: 2021 IEEE International Conference on Data Mining (ICDM). pp. 51–60. IEEE (2021)

Diao, Q., Qiu, M., Wu, C.Y., Smola, A.J., Jiang, J., Wang, C.: Jointly modeling aspects, ratings and sentiments for movie recommendation (jmars). In: Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining. pp. 193–202 (2014)

Gao, Y., Sheng, T., Xiang, Y., Xiong, Y., Wang, H., Zhang, J.: Chat-rec: Towards interactive and explainable llms-augmented recommender system. arXiv preprint arXiv:2303.14524 (2023)

Geng, S., Liu, S., Fu, Z., Ge, Y., Zhang, Y.: Recommendation as language processing (rlp): A unified pretrain, personalized prompt & predict paradigm (p5). In: Proceedings of the 16th ACM Conference on Recommender Systems. pp. 299–315 (2022)

Harper, F.M., Konstan, J.A.: The movielens datasets: History and context. Acm transactions on interactive intelligent systems (tiis) 5(4), 1–19 (2015)

He, X., Deng, K., Wang, X., Li, Y., Zhang, Y., Wang, M.: Lightgcn: Simplifying and powering graph convolution network for recommendation. In: Proceedings of the 43rd International ACM SIGIR conference on research and development in Information Retrieval. pp. 639–648 (2020)

Kang, W.C., McAuley, J.: Self-attentive sequential recommendation. In: 2018 IEEE international conference on data mining (ICDM). pp. 197–206. IEEE (2018)

tatsu lab: Alpaca (2023), https://github.com/tatsu-lab/stanford_alpaca

McAuley, J., Targett, C., Shi, Q.e.a.: Image-based recommendations on styles and substitutes. In: Proceedings of the 38th international ACM SIGIR conference on research and development in information retrieval. pp. 43–52 (2015)

McAuley, J.e.a.: Hidden factors and hidden topics: understanding rating dimensions with review text. In: Proceedings of the 7th ACM RecSys. pp. 165–172 (2013)

OpenAI: Chatgpt (mar 14 version) (2023), https://chat.openai.com/chat

OpenAI: Gpt-4 technical report. CoRR abs/2303.08774 (2023)

Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., Sutskever, I., et al.: Language models are unsupervised multitask learners. OpenAI blog 1(8), 9 (2019)

Rendle, S., Freudenthaler, C., Gantner, Z., Schmidt-Thieme, L.: Bpr: Bayesian personalized ranking from implicit feedback. arXiv preprint arXiv:1205.2618 (2012)

Resnick, P., Varian, H.R.: Recommender systems. Communications of the ACM 40(3), 56–58 (1997)

THUDM: Chatglm2-6b (2023), https://github.com/THUDM/ChatGLM2-6B

Tintarev, N., Masthoff, J.: Designing and evaluating explanations for recommender systems. In: Recommender systems handbook, pp. 479–510. Springer (2010)

Touvron, H., Martin, L., Stone, K., Albert, P., Almahairi, A., Babaei, Y., Bashlykov, N., Batra, S., Bhargava, P., Bhosale, S., et al.: Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288 (2023)

Wang, X., Chen, Y., Yang, J., Wu, L., Wu, Z., Xie, X.: A reinforcement learning framework for explainable recommendation. In: 2018 IEEE international conference on data mining (ICDM). pp. 587–596. IEEE (2018)

Wei, J., Wang, X., Schuurmans, D., Bosma, M., Xia, F., Chi, E., Le, Q.V., Zhou, D., et al.: Chain-of-thought prompting elicits reasoning in large language models. Advances in Neural Information Processing Systems 35, 24824–24837 (2022)

Wong, T.T.: Performance evaluation of classification algorithms by k-fold and leave-one-out cross validation. Pattern recognition 48(9), 2839–2846 (2015)

Wu, F., Qiao, Y., Chen, J.H., Wu, C., Qi, T., Lian, J., Liu, D., Xie, X., Gao, J., Wu, W., et al.: Mind: A large-scale dataset for news recommendation. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. pp. 3597–3606 (2020)

Zhang, Y., Chen, X., et al.: Explainable recommendation: A survey and new perspectives. Foundations and Trends® in Information Retrieval 14(1), 1–101 (2020)

Acknowledgement

This research was supported by grants from the National Natural Science Foundation of China (Grants No. 62337001, 623B1020) and the Fundamental Research Funds for the Central Universities.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Luo, Y., Cheng, M., Zhang, H., Lu, J., Chen, E. (2024). Unlocking the Potential of Large Language Models for Explainable Recommendations. In: Onizuka, M., et al. Database Systems for Advanced Applications. DASFAA 2024. Lecture Notes in Computer Science, vol 14854. Springer, Singapore. https://doi.org/10.1007/978-981-97-5569-1_18

Download citation

DOI: https://doi.org/10.1007/978-981-97-5569-1_18

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-5568-4

Online ISBN: 978-981-97-5569-1

eBook Packages: Computer ScienceComputer Science (R0)