Abstract

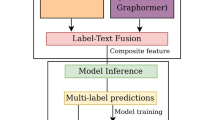

Hierarchical Text Classification (HTC) provides a robust mechanism for systematic text categorization, addressing diverse requirements of text understanding and retrieval. A key issue in HTC is the feature learning for long-tail distributed labels through the use of label relations. Most current HTC approaches mainly focus on label structures, overlooking the intricate semantic details of long-tail labels. This often leads to inadequate modeling of these less frequent, but semantically rich labels, compromising the overall classification accuracy. In this study, we propose the method named S2-HTC: Hierarchical Text Classification via fusing the Structural and Semantic Information(S2-HTC), which achieves hierarchical classification by leveraging a method incorporating label structural and semantics relations with the balancing loss calculation. Specifically, S2-HTC introduces the Label Semantic-Aware and Hierarchical Adjacency Matrix (LSA-HAM) to simultaneously capture and integrate the hierarchical and semantic associations of labels. Realizing the natural challenge of long-tailed label distributions in HTC, S2-HTC adopts the balanced loss computation to efficiently represent low-level label features. Experimental results demonstrate that S2-HTC outperforms state-of-the-art approaches.

Yinghan Shen and Yu Yan are co-first authors. This research was supported by the Natural Science Foundation Program (Grant No. U21B2046 and 62172393).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Aly, R., Remus, S., Biemann, C.: Hierarchical multi-label text classification with capsule networks. In: ACL SRW, pp. 323–330 (2019)

Beltagy, I., Lo, K., Cohan, A.: SciBERT: Pretrained LM for Scientific Text. In: EMNLP (2019), arXiv:1903.10676

Chen, H., Ma, Q., Lin, Z., Yan, J.: Hierarchy-aware label semantics matching network for HTC. In: ACL/IJCNLP, pp. 4370–4379 (2021)

Cui, Y., Jia, M., Lin, T.Y., Song, Y., Belongie, S.: Class-balanced loss based on effective number of samples. In: CVPR, pp. 9268–9277 (2019)

Deng, Z., Peng, H., He, D., Li, J., Yu, P.S.: HTCInfoMax: Global Model for HTC via Information Maximization. arXiv preprint (2021), arXiv:2104.05220

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: BERT: Pre-training of Deep Bidirectional Transformers. arXiv preprint (2018), arXiv:1810.04805

Gao, T., Yao, X., Chen, D.: Simcse: simple contrastive learning of sentence embeddings. In: EMNLP 2021, pp. 6894–6910 (2021)

Huang, Y., Giledereli, B., Köksal, A., Özgür, A., Ozkirimli, E.: Balancing methods for multi-label text classification with long-tailed class distribution. In: EMNLP, pp. 8153–8161 (2021)

Kowsari, K., Brown, D.E., Heidarysafa, M., Jafari Meimandi, K., Gerber, M.S., Barnes, L.E.: HDLTex: hierarchical deep learning for text classification. In: ICMLA, pp. 364–371 (2017)

Lewis, D.D., Yang, Y., Rose, T.R., Li, F.: RCV1: New Benchmark for Text Categorization Research. JMLR 5, 361–397 (2004)

Liu, M., et al.: Overview of NLPCC2022: multi-label classification for scientific lit. In: NLPCC, pp. 320–327 (2022)

Liu, Y., et al.: RoBERTa: Robustly Optimized BERT Pretraining. arXiv preprint (2019), arXiv:1907.11692

Liu, Y., et al.: Enhancing HTC via knowledge graph integration. In: ACL Findings, pp. 5797–5810 (2023)

Lu, J., et al.: Multi-task hierarchical cross-attention network for multi-label text class. In: NLPCC, pp. 156–167 (2022)

Mueller, A., et al.: Label semantic aware pre-training for few-shot text classification. In: ACL, pp. 8318–8334 (2022)

Song, J., Wang, F., Yang, Y.: Peer-label assisted HTC. In: ACL, pp. 3747–3758 (2023)

Wang, B., et al.: BIT-WOW at NLPCC-2022: hierarchical multi-label classification via LAGCN. In: NLPCC, pp. 192–203 (2022)

Wang, Z., Wang, P., Huang, L., Sun, X., Wang, H.: Incorporating hierarchy into text encoder: a contrastive learning approach for hierarchical text classification. In: ACL, pp. 7109–7119 (2022)

Wang, Z., et al.: HPT: hierarchy-aware prompt tuning for hierarchical text classification. In: EMNLP, pp. 3740–3751 (2022)

Xiao, M., Qiao, Z., Fu, Y., Du, Y., Wang, P., Zhou, Y.: Expert knowledge-guided length-variant hierarchical label generation for proposal classification. In: ICDM, pp. 757–766. IEEE (2021)

Yu, C., Shen, Y., Mao, Y.: Constrained sequence-to-tree generation for HTC. In: SIGIR, pp. 1865–1869 (2022)

Zangari, A., Marcuzzo, M., Schiavinato, M., Rizzo, M., Gasparetto, A., Albarelli, A.E.A.: Hierarchical text classification: a review of current research. Expert Syst. Appl. 224 (2023)

Zhang, K., et al.: Description-enhanced label embedding contrastive learning for text class. IEEE Trans. Neural Netw. Learn. Syst. (2023)

Zhao, X., et al.: Interactive fusion model for hierarchical multi-label text class. In: NLPCC, pp. 168–178 (2022)

Zhou, J., et al.: Hierarchy-aware global model for HTC. In: ACL, pp. 1106–1117 (2020)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Shen, Y., Yan, Y., Yin, D., Shen, H. (2024). S2-HTC: Hierarchical Text Classification via Fusing the Structural and Semantic Information. In: Onizuka, M., et al. Database Systems for Advanced Applications. DASFAA 2024. Lecture Notes in Computer Science, vol 14854. Springer, Singapore. https://doi.org/10.1007/978-981-97-5569-1_4

Download citation

DOI: https://doi.org/10.1007/978-981-97-5569-1_4

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-5568-4

Online ISBN: 978-981-97-5569-1

eBook Packages: Computer ScienceComputer Science (R0)