Abstract

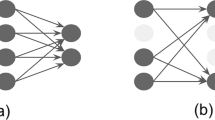

Deploying deep neural networks (DNNs) on IoT devices is growing due to privacy concerns. However, there is a contradiction between constrained computational capabilities of IoT devices and the large computational requirements of training. To improve training efficiency in DNNs, transfer learning is commonly used. This approach heavily relies on fine-tuning, which is crucial for accuracy but introduces additional computational costs. We propose an efficient fine-tuning method, Contribution-Driven Tuning (CDT), aimed at meeting accuracy requirements while speeding up the fine-tuning process. We modeled the problem of finding the optimal fine-tuning strategy to maximize accuracy by analyzing the contribution of each layer to the model performance. We utilized a solver to identify the best fine-tuning approach. Compared to meta-learning, CDT reduces the time required for fine-tuning by up to \(36\%\), while maintaining the accuracy.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Zhuang, F., et al.: A comprehensive survey on transfer learning. Proc. IEEE 109(1), 43–76 (2020)

Song, Y., Wang, T., Cai, P., Mondal, S.K., Sahoo, J.P.: A comprehensive survey of few-shot learning: evolution, applications, challenges, and opportunities. ACM Comput. Surv. 55(13), 1–40 (2023)

Kevin, I., Wang, K., Zhou, X., Liang, W., Yan, Z., She, J.: Federated transfer learning based cross-domain prediction for smart manufacturing. IEEE Trans. Ind. Inf. 18(6), 4088–4096 (2021)

Alrasheed, N., Sarker, S., Grieco, V., Rao, P.: Fewshot learning for word recognition in handwritten seventeenth-century Spanish American notary records. In: Proceedings of the 5th ACM International Conference on Multimedia in Asia, pp. 1–5 (2023)

Ravi, S., Larochelle, H.: Optimization as a model for few-shot learning. In: International Conference on Learning Representations (2016)

Dhillon, G.S., Chaudhari, P., Ravichandran, A., Soatto, S.: A baseline for few-shot image classification. arXiv preprint arXiv:1909.02729 (2019)

Chen, Y., Liu, Z., Xu, H., Darrell, T., Wang, X.: Meta-baseline: exploring simple meta-learning for few-shot learning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9062–9071 (2021)

Hussain, M., Bird, J.J., Faria, D.R.: A study on CNN transfer learning for image classification. In: Lotfi, A., Bouchachia, H., Gegov, A., Langensiepen, C., McGinnity, M. (eds.) UKCI 2018. AISC, vol. 840, pp. 191–202. Springer, Cham (2019). https://doi.org/10.1007/978-3-319-97982-3_16

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Weiss, K., Khoshgoftaar, T.M., Wang, D.: A survey of transfer learning. J. Big Data 3, 1–40 (2016)

Matiz, S., Barner, K.E.: Inductive conformal predictor for convolutional neural networks: applications to active learning for image classification. Pattern Recognit. 90, 172–182 (2019)

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2961–2969 (2017)

Ye, H.J., Hu, H., Zhan, D.C., Sha, F.: Few-shot learning via embedding adaptation with set-to-set functions. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8808–8817 (2020)

Lin, J., Zhu, L., Chen, W.M., Wang, W.C., Gan, C., Han, S.: On-device training under 256KB memory. In: Advances in Neural Information Processing Systems, vol. 35, pp. 22941–22954 (2022)

Snell, J., Swersky, K., Zemel, R.: Prototypical networks for few-shot learning. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Acknowledgement

This study is supported by National Key R&D Program of China (2022YFB4501600).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Liu, Z., Zhou, N., Liu, M., Liu, Z., Xu, C. (2024). Efficient Neural Network Fine-Tuning via Layer Contribution Analysis. In: Huang, DS., Zhang, C., Chen, W. (eds) Advanced Intelligent Computing Technology and Applications. ICIC 2024. Lecture Notes in Computer Science, vol 14865. Springer, Singapore. https://doi.org/10.1007/978-981-97-5591-2_30

Download citation

DOI: https://doi.org/10.1007/978-981-97-5591-2_30

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-5590-5

Online ISBN: 978-981-97-5591-2

eBook Packages: Computer ScienceComputer Science (R0)