Abstract

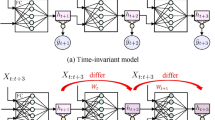

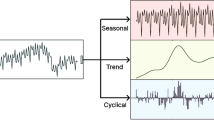

Long-term time series forecasting has widespread applications in multiple fields. Time series possess seasonality and trend, and existing models predict time series in either the time or frequency domain, with the former generally struggling to learn seasonality and the latter overlooking trend. Additionally, the Picket Fence Effect leads to bias in frequency domain observation, resulting in inaccurate capturing of frequency domain features. In our study, we propose a framework called Interpolation Frequency- and Time-domain Network (IFTNet) for time series forecasting, which considers both the frequency and time domains, as well as seasonality and trend of time series. Specifically, we model the seasonal and trend components of time series separately. We introduce a novel module for frequency-domain interpolation to mitigate the impact of the Picket Fence Effect. We also design a multi-scale depth-wise attention module to capture the features at different scales within and during the time series periods. The experimental results show that compared to other models, IFTNet has a stable improvement in prediction accuracy across multiple real-time series datasets.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bai, S., Kolter, J.Z., Koltun, V.: An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv preprint arXiv:1803.01271 (2018)

Brigham, E.O., et al.: The fast Fourier transform. IEEE Spectr. 4(12), 63–70 (1967)

Challu, C., et al.: NHITS: neural hierarchical interpolation for time series forecasting. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 37, pp. 6989–6997 (2023)

Chen, Y., Chen, X., Xu, A., Sun, Q., Peng, X.: A hybrid CNN-transformer model for ozone concentration prediction. Air Qual. Atmos. Health 15(9), 1533–1546 (2022)

Das, A., Kong, W., Leach, A., Sen, R., Yu, R.: Long-term forecasting with tide: time-series dense encoder. arXiv preprint arXiv:2304.08424 (2023)

Fukushima, K.: Neocognitron: a self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol. Cybern. 36(4), 193–202 (1980)

Gu, A., et al.: Efficiently modeling long sequences with structured state spaces. In: The 10th International Conference on Learning Representations (2022)

Guo, M.H., Lu, C.Z., Liu, Z.N., Cheng, M.M., Hu, S.M.: Visual attention network. Comput. Vis. Media 9(4), 733–752 (2023)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Lai, G., Chang, W.C., Yang, Y., Liu, H.: Modeling long-and short-term temporal patterns with deep neural networks. In: The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, pp. 95–104 (2018)

Liu, M., et al.: SCINet: time series modeling and forecasting with sample convolution and interaction. In: Advances in Neural Information Processing Systems, vol. 35, pp. 5816–5828 (2022)

Liu, Y., et al.: Non-stationary transformers: exploring the stationarity in time series forecasting. In: Advances in Neural Information Processing Systems, vol. 35, pp. 9881–9893 (2022)

Nie, Y., et al.: A time series is worth 64 words: long-term forecasting with transformers. In: The Eleventh International Conference on Learning Representations (2023)

Nosratabadi, et al.: Data science in economics: comprehensive review of advanced machine learning and deep learning methods. Mathematics 8(10), 1799 (2020)

Papadimitriou, S., Yu, P.: Optimal multi-scale patterns in time series streams. In: Proceedings of the 2006 ACM SIGMOD International Conference on Management of Data, pp. 647–658 (2006)

Vaswani, A., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Wu, H., et al.: TimesNet: temporal 2D-variation modeling for general time series analysis. In: The Eleventh International Conference on Learning Representations (2023)

Wu, H., Xu, J., Wang, J., Long, M.: Autoformer: decomposition transformers with auto-correlation for long-term series forecasting. In: Advances in Neural Information Processing Systems, vol. 34, pp. 22419–22430 (2021)

Zeng, A., et al.: Are transformers effective for time series forecasting? In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 37, pp. 11121–11128 (2023)

Zhang, Y., Yan, J.: Crossformer: transformer utilizing cross-dimension dependency for multivariate time series forecasting. In: The Eleventh International Conference on Learning Representations (2022)

Zhou, H., et al.: Informer: beyond efficient transformer for long sequence time-series forecasting. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 11106–11115 (2021)

Zhou, T., et al.: FEDformer: frequency enhanced decomposed transformer for long-term series forecasting. In: International Conference on Machine Learning, pp. 27268–27286. PMLR (2022)

Acknowledgments

This work is supported by the Joint Innovation Laboratory for Future Observation, Zhejiang University, focusing on the research of cloud-native observability technology based on GuanceCloud (JS20220726-0016).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Cheng, X., Yang, H., Wu, B., Zou, X., Chen, X., Zhao, R. (2024). IFTNet: Interpolation Frequency- and Time-Domain Network for Long-Term Time Series Forecasting. In: Huang, DS., Zhang, C., Pan, Y. (eds) Advanced Intelligent Computing Technology and Applications. ICIC 2024. Lecture Notes in Computer Science(), vol 14876. Springer, Singapore. https://doi.org/10.1007/978-981-97-5666-7_3

Download citation

DOI: https://doi.org/10.1007/978-981-97-5666-7_3

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-5665-0

Online ISBN: 978-981-97-5666-7

eBook Packages: Computer ScienceComputer Science (R0)