Abstract

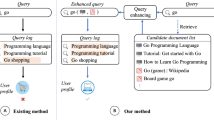

The critical process of personalized search is to reorder candidate documents of the current query based on the user’s historical behavior sequence. There are many types of information contained in user historical information sequence, such as queries, documents, and clicks. Most existing personalized search approaches concatenate these types of information to get an overall user representation, but they ignore the associations among them. We believe the associations of different information mentioned above are significant to personalized search. Based on a hierarchical transformer as base architecture, we design three auxiliary tasks to capture the associations of different information in user behavior sequence. Under the guidance of mutual information, we adjust the training loss, enabling our PSMIM model to better enhance the information representation in personalized search. Experimental results demonstrate that our proposed method outperforms some personalized search methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Ahmad, W.U., Chang, K., Wang, H.: Multi-task learning for document ranking and query suggestion. In: 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, April 30 - May 3, 2018, Conference Track Proceedings. OpenReview.net (2018). https://openreview.net/forum?id=SJ1nzBeA-

Ahmad, W.U., Chang, K.W., Wang, H.: Context attentive document ranking and query suggestion. In: Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 385–394. SIGIR’19, Association for Computing Machinery, New York, NY, USA (2019). https://doi.org/10.1145/3331184.3331246

Bennett, P.N., et al.: Modeling the impact of short- and long-term behavior on search personalization. In: Proceedings of the 35th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 185–194. SIGIR ’12, Association for Computing Machinery, New York, NY, USA (2012). https://doi.org/10.1145/3331184.3331246, https://doi.org/10.1145/2348283.2348312

Cai, F., Liang, S., de Rijke, M.: Personalized document re-ranking based on Bayesian probabilistic matrix factorization. In: Proceedings of the 37th International ACM SIGIR Conference on Research & Development in Information Retrieval, pp. 835–838. SIGIR ’14, Association for Computing Machinery, New York, NY, USA (2014). https://doi.org/10.1145/2600428.2609453

Dai, Z., Xiong, C., Callan, J., Liu, Z.: Convolutional neural networks for soft-matching n-grams in ad-hoc search. In: Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, pp. 126–134. WSDM ’18, Association for Computing Machinery, New York, NY, USA (2018).https://doi.org/10.1145/3159652.3159659

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pp. 4171–4186. Association for Computational Linguistics, Minneapolis, Minnesota (2019). https://doi.org/10.18653/v1/N19-1423, https://www.aclweb.org/anthology/N19-1423

Dou, Z., Song, R., Wen, J.R.: A large-scale evaluation and analysis of personalized search strategies. In: Proceedings of the 16th International Conference on World Wide Web, pp. 581–590. WWW ’07, Association for Computing Machinery, New York, NY, USA (2007). https://doi.org/10.1145/1242572.1242651

Ge, S., Dou, Z., Jiang, Z., Nie, J.Y., Wen, J.R.: Personalizing search results using hierarchical RNN with query-aware attention. In: Proceedings of the 27th ACM International Conference on Information and Knowledge Management, pp. 347-356. CIKM ’18, Association for Computing Machinery, New York, NY, USA (2018). https://doi.org/10.1145/3269206.3271728

Gutmann, M.U., Hyvärinen, A.: Noise-contrastive estimation of unnormalized statistical models, with applications to natural image statistics. J. Mach. Learn. Res. 13(null), 307–361 (2012)

Harvey, M., Crestani, F., Carman, M.J.: Building user profiles from topic models for personalised search. In: Proceedings of the 22nd ACM International Conference on Information & Knowledge Management, pp. 2309–2314. CIKM ’13, Association for Computing Machinery, New York, NY, USA (2013). https://doi.org/10.1145/2505515.2505642

Hjelm, R.D., et al.: Learning deep representations by mutual information estimation and maximization. In: 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019. OpenReview.net (2019). https://openreview.net/forum?id=Bklr3j0cKX

Huang, J., Zhang, W., Sun, Y., Wang, H., Liu, T.: Improving entity recommendation with search log and multi-task learning. In: Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI-18, pp. 4107–4114. International Joint Conferences on Artificial Intelligence Organization (2018). https://doi.org/10.24963/ijcai.2018/571

Kong, L., de Masson d’Autume, C., Yu, L., Ling, W., Dai, Z., Yogatama, D.: A mutual information maximization perspective of language representation learning. In: 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020. OpenReview.net (2020). https://openreview.net/forum?id=Syx79eBKwr

Logeswaran, L., Lee, H.: An efficient framework for learning sentence representations. In: 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, April 30 - May 3, 2018, Conference Track Proceedings. OpenReview.net (2018). https://openreview.net/forum?id=rJvJXZb0W

Lu, S., Dou, Z., Jun, X., Nie, J.Y., Wen, J.R.: PSGAN: a minimax game for personalized search with limited and noisy click data. In: Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 555–564. SIGIR’19, Association for Computing Machinery, New York, NY, USA (2019). https://doi.org/10.1145/3331184.3331218

Lu, S., Dou, Z., Xiong, C., Wang, X., Wen, J.R.: Knowledge enhanced personalized search. In: Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 709–718. SIGIR ’20, Association for Computing Machinery, New York, NY, USA (2020). https://doi.org/10.1145/3397271.3401089

Ma, Z., Dou, Z., Bian, G., Wen, J.R.: PSTIE: time information enhanced personalized search. In: Proceedings of the 29th ACM International Conference on Information & Knowledge Management, pp. 1075–1084. CIKM ’20, Association for Computing Machinery, New York, NY, USA (2020). https://doi.org/10.1145/3340531.3411877

van den Oord, A., Li, Y., Vinyals, O.: Representation learning with contrastive predictive coding. CoRR abs/1807.03748 (2018). http://arxiv.org/abs/1807.03748

Paninski, L.: Estimation of entropy and mutual information. Neural Comput. 15(6), 1191–1253 (2014)

Pass, G., Chowdhury, A., Torgeson, C.: A picture of search. In: Proceedings of the 1st International Conference on Scalable Information Systems, p. 1-es. InfoScale ’06, Association for Computing Machinery, New York, NY, USA (2006). https://doi.org/10.1145/1146847.1146848

Robertson, S., Zaragoza, H.: The probabilistic relevance framework: BM25 and beyond. Found. Trends Inf. Retr. 3(4), 333–389 (2009). https://doi.org/10.1561/1500000019

Sieg, A., Mobasher, B., Burke, R.: Web search personalization with ontological user profiles. In: Proceedings of the Sixteenth ACM Conference on Conference on Information and Knowledge Management, pp. 525–534. CIKM ’07, Association for Computing Machinery, New York, NY, USA (2007). https://doi.org/10.1145/1321440.1321515

Teevan, J., Liebling, D.J., Ravichandran Geetha, G.: Understanding and predicting personal navigation. In: Proceedings of the Fourth ACM International Conference on Web Search and Data Mining, pp. 85–94. WSDM ’11, Association for Computing Machinery, New York, NY, USA (2011). https://doi.org/10.1145/1935826.1935848

Vu, T., Nguyen, D.Q., Johnson, M., Song, D., Willis, A.: Search personalization with embeddings. In: Jose, J.M., et al. (eds.) Advances in Information Retrieval, pp. 598–604. Springer International Publishing, Cham (2017). https://doi.org/10.1007/978-3-319-56608-5_54

Vu, T., Willis, A., Tran, S.N., Song, D.: Temporal latent topic user profiles for search personalisation. In: Hanbury, A., Kazai, G., Rauber, A., Fuhr, N. (eds.) Advances in Information Retrieval, pp. 605–616. Springer International Publishing, Cham (2015). https://doi.org/10.1007/978-3-319-16354-3_67

White, R.W., Chu, W., Hassan, A., He, X., Song, Y., Wang, H.: Enhancing personalized search by mining and modeling task behavior. In: Proceedings of the 22nd International Conference on World Wide Web, pp. 1411–1420. WWW ’13, Association for Computing Machinery, New York, NY, USA (2013). https://doi.org/10.1145/2488388.2488511

Xiong, C., Dai, Z., Callan, J., Liu, Z., Power, R.: End-to-end neural ad-hoc ranking with kernel pooling. ACM SIGIR Forum 51(cd), 55–64 (2017)

Yao, J., Dou, Z., Wen, J.R.: Employing personal word embeddings for personalized search. In: Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1359–1368. SIGIR ’20, Association for Computing Machinery, New York, NY, USA (2020). https://doi.org/10.1145/3397271.3401153

Yao, J., Dou, Z., Xu, J., Wen, J.R.: RLPER: a reinforcement learning model for personalized search. In: Proceedings of The Web Conference 2020, pp. 2298–2308. WWW ’20, Association for Computing Machinery, New York, NY, USA (2020). https://doi.org/10.1145/3366423.3380294

Zhou, K., et al.: S3-Rec: self-supervised learning for sequential recommendation with mutual information maximization. In: Proceedings of the 29th ACM International Conference on Information & Knowledge Management, pp. 1893–1902. CIKM ’20, Association for Computing Machinery, New York, NY, USA (2020). https://doi.org/10.1145/3340531.3411954

Zhou, Y., Dou, Z., Wen, J.R.: Encoding history with context-aware representation learning for personalized search. In: Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 1111–1120. SIGIR ’20, Association for Computing Machinery, New York, NY, USA (2020). https://doi.org/10.1145/3397271.3401175

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable comments and suggestions to improve this paper. The study was supported by the China Postdoctoral Fellowship Program of CPSF(GZC20230287) and the Fundamental Research Funds for the Central Universities(2024QY004).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Ethics declarations

Disclosure of Interests

The authors have no competing interests to declare that are relevant to the content of this article.

Appendices

Appendix 1 Mutual Information Maximization

Mutual information maximization is a pivotal strategy for integrating diverse forms of historical information. Rooted in information theory, mutual information (MI) is a valuable tool for quantifying the dependence between random variables. Its mathematical definition is expressed as:

Suppose A and B are different views of the input data, such as a word and its context in NLP tasks or a document and its historical context sequence in personalized search. Let f be a function receiving \(A=a\) and \(B=b\) as inputs. The primary aim of maximizing MI is to tune the parameters of f to maximize the mutual information I(A, B), thereby extracting the most discriminative and salient attributes of the samples.

The essence of effective feature extraction involves distinguishing a sample from the entire dataset by capturing its distinctive information. By maximizing mutual information, one can isolate and harness such unique characteristics. However, when f constitutes neural networks or other encoders, directly optimizing MI is usually tricky [19]. Thus, a common workaround is to find a tractable lower bound for I(A, B) that closely approximates the target function. A specific lower bound proved to be effective in practice is InfoNCE [14, 18], which is based on noise contrast estimation [9]. InfoNCE is defined as follows:

where a and b are different views of the input data, and \(f_{\theta }\in {\mathbb {R}}\) is a function whose parameter is \(\theta \) (for example, dot product result expressed by word and context or cos distance). \(\tilde{\mathcal {B}}\) is a set of samples taken from the distribution \(q(\tilde{\mathcal {B}})\). The B set contains a positive sample b and \(|\tilde{\mathcal {B}}| - 1\) negative samples. Learning representation based on this goal is also called contrastive learning.

We can see that the InfoNCE is analogous to the cross-entropy form the formula below when \(\tilde{\mathcal {B}}\) can take all possible values of B (i.e., \(\tilde{\mathcal {B}}=\mathcal {B}\)) and they are uniformly distributed, maximizing InfoNCE is analogous to maximize the cross-entropy loss:

Appendix 2 Implementation Details

The parameters of our model PSMIM are set as follows: The word embedding size is 100. The hidden size of the transformer layer in our base model is 512. The number of heads in multi-head attention is 8. The size of the MLP hidden layer is 256. In the experiment, we set the hyperparameters \(z_1\), \(z_2\) and \(z_3\) in Formula 15 of three auxiliary tasks to 1.0. We use the Adam optimizer to minimize the final loss \(L_\textrm{total}\), and the learning rate of our optimizer is \(1e^{-3}\). In the experiment, we set \(\alpha \) in Formula 16 to 1.0. In addition, the number of matched cores for the KRNM model is 11.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Wang, S., Zhang, H., Yuan, Z. (2025). Enhancing Sequence Representation for Personalized Search. In: Sun, M., et al. Chinese Computational Linguistics. CCL 2024. Lecture Notes in Computer Science(), vol 14761. Springer, Singapore. https://doi.org/10.1007/978-981-97-8367-0_2

Download citation

DOI: https://doi.org/10.1007/978-981-97-8367-0_2

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-8366-3

Online ISBN: 978-981-97-8367-0

eBook Packages: Computer ScienceComputer Science (R0)