Abstract

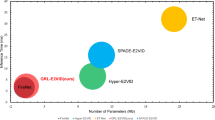

Event-based bionic camera asynchronously captures dynamic scenes with high temporal resolution and high dynamic range, offering potential for the integration of events and RGB under conditions of illumination degradation and fast motion. Existing RGB-E tracking methods model event characteristics utilising attention mechanism of Transformer before integrating both modalities. Nevertheless, these methods involve aggregating the event stream into a single event frame, lacking the utilisation of the temporal information inherent in the event stream. Moreover, the traditional attention mechanism is well-suited for dense semantic features, while the attention mechanism for sparse event features require revolution. In this paper, we propose a dynamic event subframe splitting strategy to split the event stream into more fine-grained event clusters, aiming to capture spatio-temporal features that contain motion cues. Based on this, we design an event-based sparse attention mechanism to enhance the interaction of event features in temporal and spatial dimensions. The experimental results indicate that our method outperforms existing state-of-the-art methods on the FE240 and COESOT datasets, providing an effective processing manner for the event data.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Chen, X., Yan, B., Zhu, J., Wang, D., Yang, X., Lu, H.: Transformer tracking. In: CVPR (2021)

Danelljan, M., Gool, L.V., Timofte, R.: Probabilistic regression for visual tracking. In: CVPR (2020)

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., et al.: An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

Fu, Y., Li, M., Liu, W., Wang, Y., Zhang, J., Yin, B., Wei, X., Yang, X.: Distractor-aware event-based tracking. IEEE TIP (2023)

Gallego, G., Delbrück, T., Orchard, G., Bartolozzi, C., Taba, B., Censi, A., Leutenegger, S., Davison, A.J., Conradt, J., Daniilidis, K., et al.: Event-based vision: a survey. IEEE TPAMI 44(1), 154–180 (2020)

Gao, S., Zhou, C., Ma, C., Wang, X., Yuan, J.: Aiatrack: attention in attention for transformer visual tracking. In: ECCV, pp. 146–164. Springer (2022)

Jiang, H., Wu, X., Xu, T.: Asymmetric attention fusion for unsupervised video object segmentation. In: PRCV, pp. 170–182. Springer (2023)

Mayer, C., Danelljan, M., Bhat, G., Paul, M., Paudel, D.P., Yu, F., Van Gool, L.: Transforming model prediction for tracking. In: CVPR, pp. 8731–8740 (2022)

Shao, P., Xu, T., Tang, Z., Li, L., Wu, X.J., Kittler, J.: Tenet: targetness entanglement incorporating with multi-scale pooling and mutually-guided fusion for RGB-E object tracking. arXiv preprint arXiv:2405.05004 (2024)

Tang, C., Wang, X., Huang, J., Jiang, B., Zhu, L., Zhang, J., Wang, Y., Tian, Y.: Revisiting color-event based tracking: a unified network, dataset, and metric. arXiv preprint arXiv:2211.11010 (2022)

Tang, Z., Xu, T., Li, H., Wu, X.J., Zhu, X., Kittler, J.: Exploring fusion strategies for accurate RGBT visual object tracking. Inf. Fusion 99, 101881 (2023)

Tang, Z., Xu, T., Wu, X., Zhu, X.F., Kittler, J.: Generative-based fusion mechanism for multi-modal tracking. In: AAAI, vol. 38, pp. 5189–5197 (2024)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. NeurIPS 30 (2017)

Wang, N., Zhou, W., Wang, J., Li, H.: Transformer meets tracker: exploiting temporal context for robust visual tracking. In: CVPR, pp. 1571–1580 (2021)

Wang, X., Li, J., Zhu, L., Zhang, Z., Chen, Z., Li, X., Wang, Y., Tian, Y., Wu, F.: Visevent: reliable object tracking via collaboration of frame and event flows. IEEE TCYB (2023)

Wang, X., Wang, S., Tang, C., Zhu, L., Jiang, B., Tian, Y., Tang, J.: Event stream-based visual object tracking: a high-resolution benchmark dataset and a novel baseline. arXiv preprint arXiv:2309.14611 (2023)

Xu, T., Feng, Z.H., Wu, X.J., Kittler, J.: Joint group feature selection and discriminative filter learning for robust visual object tracking. In: ICCV, pp. 7950–7960 (2019)

Xu, T., Feng, Z., Wu, X.J., Kittler, J.: Adaptive channel selection for robust visual object tracking with discriminative correlation filters. IJCV 129, 1359–1375 (2021)

Xu, T., Zhu, X.F., Wu, X.J.: Learning spatio-temporal discriminative model for affine subspace based visual object tracking. Vis. Intell. 1(1), 4 (2023)

Yan, B., Peng, H., Fu, J., Wang, D., Lu, H.: Learning spatio-temporal transformer for visual tracking. In: ICCV (2021)

Yan, S., Yang, J., Käpylä, J., Zheng, F., Leonardis, A., Kämäräinen, J.K.: Depthtrack: Unveiling the power of RGBD tracking. In: ICCV, pp. 10725–10733 (2021)

Ye, B., Chang, H., Ma, B., Shan, S., Chen, X.: Joint feature learning and relation modeling for tracking: a one-stream framework. In: ECCV, pp. 341–357. Springer (2022)

Zhang, H., Gao, Z., Zhang, J., Yang, G.: Visual tracking with levy flight grasshopper optimization algorithm. In: PRCV, pp. 217–227. Springer (2019)

Zhang, J., Dong, B., Fu, Y., Wang, Y., Wei, X., Yin, B., Yang, X.: A universal event-based plug-in module for visual object tracking in degraded conditions. IJCV pp. 1–23 (2023)

Zhang, J., Dong, B., Zhang, H., Ding, J., Heide, F., Yin, B., Yang, X.: Spiking transformers for event-based single object tracking. In: CVPR, pp. 8801–8810 (2022)

Zhang, J., Wang, Y., Liu, W., Li, M., Bai, J., Yin, B., Yang, X.: Frame-event alignment and fusion network for high frame rate tracking. In: CVPR, pp. 9781–9790 (2023)

Zhang, J., Yang, X., Fu, Y., Wei, X., Yin, B., Dong, B.: Object tracking by jointly exploiting frame and event domain. In: ICCV, pp. 13043–13052 (2021)

Zhang, P., Zhao, J., Wang, D., Lu, H., Ruan, X.: Visible-thermal UAV tracking: a large-scale benchmark and new baseline. In: CVPR, pp. 8886–8895 (2022)

Zhao, X., Zhang, Y.: Tfatrack: Temporal feature aggregation for UAV tracking and a unified benchmark. In: PRCV, pp. 55–66. Springer (2022)

Zhu, J., Lai, S., Chen, X., Wang, D., Lu, H.: Visual prompt multi-modal tracking. In: CVPR, pp. 9516–9526 (2023)

Zhu, X.F., Xu, T., Tang, Z., Wu, Z., Liu, H., Yang, X., Wu, X.J., Kittler, J.: Rgbd1k: a large-scale dataset and benchmark for RGB-D object tracking. In: AAAI, vol. 37, pp. 3870–3878 (2023)

Zhu, Z., Hou, J., Wu, D.O.: Cross-modal orthogonal high-rank augmentation for RGB-event transformer-trackers. In: CVPR, pp. 22045–22055 (2023)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Shao, P., Xu, T., Zhu, XF., Wu, XJ., Kittler, J. (2025). Dynamic Subframe Splitting and Spatio-Temporal Motion Entangled Sparse Attention for RGB-E Tracking. In: Lin, Z., et al. Pattern Recognition and Computer Vision. PRCV 2024. Lecture Notes in Computer Science, vol 15043. Springer, Singapore. https://doi.org/10.1007/978-981-97-8493-6_8

Download citation

DOI: https://doi.org/10.1007/978-981-97-8493-6_8

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-8492-9

Online ISBN: 978-981-97-8493-6

eBook Packages: Computer ScienceComputer Science (R0)