Abstract

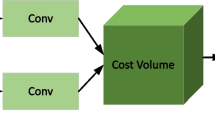

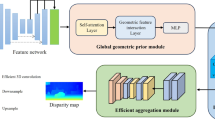

Stereo matching is a critical research area in computer vision. The advancement of deep learning has led to the gradual replacement of cost-filtering methods by iterative optimization techniques, characterized by outstanding generalization performance. However, cost volumes constructed solely through recurrent all-pairs field transforms in iterative optimization methods lack adequate image information, making it challenging to resolve blurring issues in pathological regions such as illumination changes or similar textures. In this paper, we propose SCA-Stereo, a disparity refinement network aimed at further optimizing the initial disparity map generated by iteration. First, we introduce a high- and low-frequency feature extractor to delve deeper into the structural and fine feature information inherent in the image. Furthermore, we propose a cross-modal feature fusion module to facilitate the exchange and integration of diverse features, expanding the receptive field to enhance information flow. Finally, we design a global hourglass aggregation network to efficiently capture non-local interactions between fusion features. Extensive experiments conducted across Scene Flow, KITTI, Middlebury, and ETH3D demonstrate the effectiveness of SCA-Stereo in achieving state-of-the-art stereo matching performance.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Mayer, N., et al.: A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4040–4048 (2016)

Chang, J.R., Chen, Y.S.: Pyramid stereo matching network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5410–5418 (2018)

Guo, X., Yang, K., Yang, W., Wang, X., Li, H.: Group-wise correlation stereo network. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3273–3282 (2019)

Zhang, F., Prisacariu, V., Yang, R., Torr, P.H.: Ga-net: guided aggregation net for end-to-end stereo matching. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 185–194 (2019)

Liu, B., Yu, H., Long, Y.: Local similarity pattern and cost self-reassembling for deep stereo matching networks. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 1647–1655 (2022)

Cheng, J., Xu, G., Guo, P., Yang, X.: Coatrsnet: fully exploiting convolution and attention for stereo matching by region separation. Int. J. Comput. Vision 132(1), 56–73 (2024)

Song, X., Yang, G., Zhu, X., Zhou, H., Wang, Z., Shi, J.: Adastereo: a simple and efficient approach for adaptive stereo matching. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10328–10337 (2021)

Teed, Z., Deng, J.: Raft: Recurrent all-pairs field transforms for optical flow. In: Proceedings of the European Conference on Computer Vision, pp. 402–419 (2020)

Lipson, L., Teed, Z., Deng, J.: Raft-stereo: Multilevel recurrent field transforms for stereo matching. In: Proceedings of the International Conference on 3D Vision, pp. 218–227 (2021)

Li, J., et al.: Practical stereo matching via cascaded recurrent network with adaptive correlation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16263–16272 (2022)

Liu, Z., Li, Y., Okutomi, M.: Global occlusion-aware transformer for robust stereo matching. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 3535–3544 (2024)

Zhao, H., Zhou, H., Zhang, Y., Zhao, Y., Yang, Y., Ouyang, T.: Eai-stereo: Error aware iterative network for stereo matching. In: Proceedings of the Asian Conference on Computer Vision, pp. 315–332 (2022)

Cho, K., et al.: Learning phrase representations using rnn encoder-decoder for statistical machine translation (2014). arXiv:1406.1078

Hirschmuller, H.: Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 30(2), 328–341 (2007)

Xu, G., Wang, X., Ding, X., Yang, X.: Iterative geometry encoding volume for stereo matching. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 21919–21928 (2023)

Vaswani, A., et al.: Attention is all you need. Adv. Neural Inf. Process. Syst. 30 (2017)

Tu, D., Min, X., Duan, H., Guo, G., Zhai, G., Shen, W.: End-to-end human-gaze-target detection with transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2192–2200 (2022)

Chen, X., Kang, B., Wang, D., Li, D., Lu, H.: Efficient visual tracking via hierarchical cross-attention transformer. In: Proceedings of the European Conference on Computer Vision, pp. 461–477 (2022)

Gu, J., et al.: Multi-scale high-resolution vision transformer for semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12094–12103 (2022)

Pan, Z., Cai, J., Zhuang, B.: Fast vision transformers with hilo attention. Adv. Neural. Inf. Process. Syst. 35, 14541–14554 (2022)

Shen, Z., Zhang, M., Zhao, H., Yi, S., Li, H.: Efficient attention: attention with linear complexities. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 3531–3539 (2021)

Geiger, A., Lenz, P., Urtasun, R.: Are we ready for autonomous driving? The kitti vision benchmark suite. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3354–3361 (2012)

Menze, M., Geiger, A.: Object scene flow for autonomous vehicles. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3061–3070 (2015)

Scharstein, D., et al.: High-resolution stereo datasets with subpixel-accurate ground truth. In: Proceedings of the German Conference on Pattern Recognition, pp. 31–42 (2014)

Schops, T., et al.: A multi-view stereo benchmark with high-resolution images and multi-camera videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3260–3269 (2017)

Zhao, H., Zhou, H., Zhang, Y., Chen, J., Yang, Y., Zhao, Y.: High-frequency stereo matching network. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1327–1336 (2023)

Shen, Z., Dai, Y., Rao, Z.: Cfnet: cascade and fused cost volume for robust stereo matching. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13906–13915 (2021)

Song, X., Zhao, X., Fang, L., Hu, H., Yu, Y.: Edgestereo: an effective multi-task learning network for stereo matching and edge detection. Int. J. Comput. Vision 128, 910–930 (2020)

Xu, G., Cheng, J., Guo, P., Yang, X.: Attention concatenation volume for accurate and efficient stereo matching. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12981–12990 (2022)

Liang, Z., Li, C.: Any-stereo: arbitrary scale disparity estimation for iterative stereo matching. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 3333–3341 (2024)

Zhang, Y., Chen, Y., Bai, X., Yu, S., Yu, K., Li, Z., Yang, K.: Adaptive unimodal cost volume filtering for deep stereo matching. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 12926–12934 (2020)

Xu, H., et al.: Unifying flow, stereo and depth estimation. IEEE Trans. Pattern Anal. Mach. Intell. 45(11), 13941–13958 (2023)

Zeng, J., Yao, C., Yu, L., Wu, Y., Jia, Y.: Parameterized cost volume for stereo matching. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 18347–18357 (2023)

Shen, Z., Song, X., Dai, Y., Zhou, D., Rao, Z., Zhang, L.: Digging into uncertainty-based pseudo-label for robust stereo matching. IEEE Trans. Pattern Anal. Mach. Intell. 45(12), 14301–14320 (2023)

Bleyer, M., Rhemann, C., Rother, C.: Patchmatch stereo-stereo matching with slanted support windows. In: Proceedings of the British Machine Vision Conference, pp. 1–11 (2011)

Hosni, A., Rhemann, C., Bleyer, M., Rother, C., Gelautz, M.: Fast cost-volume filtering for visual correspondence and beyond. IEEE Trans. Pattern Anal. Mach. Intell. 35(2), 504–511 (2012)

Li, Z., et al.: Revisiting stereo depth estimation from a sequence-to-sequence perspective with transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6197–6206 (2021)

Zhang, J., et al.: Revisiting domain generalized stereo matching networks from a feature consistency perspective. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13001–13011 (2022)

Rao, Z., et al.: Masked representation learning for domain generalized stereo matching. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5435–5444 (2023)

Acknowledgments.

This work was supported by the Natural Science Foundation of Jiangsu Province (No. BK20181340), and the Engineering Research Center of Integration and Application of Digital Learning Technology, Ministry of Education (No. 1311013).

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Wang, G., Yang, J., Wang, Y. (2025). Disparity Refinement Based on Cross-Modal Feature Fusion and Global Hourglass Aggregation for Robust Stereo Matching. In: Lin, Z., et al. Pattern Recognition and Computer Vision. PRCV 2024. Lecture Notes in Computer Science, vol 15036. Springer, Singapore. https://doi.org/10.1007/978-981-97-8508-7_15

Download citation

DOI: https://doi.org/10.1007/978-981-97-8508-7_15

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-8507-0

Online ISBN: 978-981-97-8508-7

eBook Packages: Computer ScienceComputer Science (R0)