Abstract

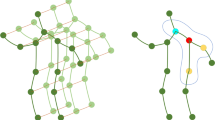

Early human action prediction aims to complete the prediction of complete action sequences based solely on initial action sequences acquired at an initial stage. Considering that the execution of a single action usually relies on the synergistic coordination of multiple key body parts and the movement amplitude of different body parts at the onset of an action varies minimally, early human action prediction demonstrates high sensitivity to the location of action initiation and the type of action. Currently, skeletal-based action prediction methods primarily focus on action classification and exhibit limited capability for discrimination in terms of semantic association between actions. For instance, distinguishing actions concentrated on elbow joint movements, such as “touching the neck” and “touching the head,” proves challenging through classification alone but can be achieved through semantic relationships. Therefore, when differentiating similar actions, incorporating descriptions of specific joint movements can enhance the feature extraction ability of the model. This paper introduces an Action Description-Assisted Learning Graph Convolutional Network (ADAL-GCN), which utilizes large language models as knowledge engines to pre-generate descriptions for key parts of different actions. These descriptions are then transformed into semantically rich feature vectors through text encoding. Furthermore, the model adopts a lightweight design, decoupling features across channel and temporal dimensions, consolidating redundant network modules, and executing strategic computational migration to optimize processing efficiency. Experimental results demonstrate significant performance improvements achieved by our proposed method, which achieves substantial reductions in training time without additional computational overhead.

This work was supported in part by the Natural Science Foundation of Xinjiang Uygur Autonomous Region, China Grant No. 2022D01A59, National Natural Science Foundation of China under Grand No. U20A20167, Key Research Foundation of Integration of Industry and Education and the Development of New Business Studies Research Center, Xinjiang University of Science and Technology under Grand No. 2022-KYZD02, Innovation Capability Improvement Plan Project of Hebei Province under Grand No. 22567637H. The authors also gratefully acknowledge the helpful comments and suggestions of the reviewers, which have improved the paper.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Hosseinzadeh, M., Sinopoli, B., Bobick, A.F.: Toward safe and efficient human-robot interaction via behavior-driven danger signaling. IEEE Trans. Control Syst. Technol. 32(1), 214–224 (2024)

Trirat, P., Yoon, S., Lee, J.-G.: Mg-tar: Multi-view graph convolutional networks for traffic accident risk prediction. IEEE Trans. Intell. Transp. Syst. 24, 3779–3794 (2023)

Li, J., Hu, H., Xing, Q., Wang, X., Li, J., Shen, Y.: Tai Chi action quality assessment and visual analysis with a consumer rgb-d camera. In: 2022 IEEE 24th International Workshop on Multimedia Signal Processing (MMSP), pp. 1–6 (2022)

Sarwar, M.A., Lin, Y.C., Daraghmi, Y.A., IK, T.U, Li, Y.L.: Badminton smash case: skeleton based keyframe detection framework for sports action analysis. IEEE Access 11, 90891–90900 (2023)

Ma, H., Yang, Z., Liu, H.: Fine-grained unsupervised temporal action segmentation and distributed representation for skeleton-based human motion analysis. IEEE Trans. Cybern. 52(12), 13411–13424 (2022)

Baselizadeh, A., Khaksar, W., Uddin, M.Z., Saplacan, D., Torresen, J.: Privacy-preserving user pose prediction for safe and efficient human-robot interaction. In: 2023 IEEE 19th International Conference on Automation Science and Engineering (CASE), pp. 1–8 (2023)

Li, X., Li, C., Wei, X., Yang, F.: Manifold guided graph neural networks for skeleton-based action recognition in human computer interaction videos. In: 2021 International Conference on Signal Processing and Machine Learning (CONF-SPML), pp. 239–244 (2021)

Jun, X., Wang, H., Zhang, J., Cai, L.: Robust hand gesture recognition based on rgb-d data for natural human-computer interaction. IEEE Access 10, 54549–54562 (2022)

Brown, T.B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D.M., Wu, J., Winter, C., Hesse, C., Chen, M., Sigler, E., Litwin, M., Gray, S., Chess, B., Clark, J., Berner, C., McCandlish, S., Radford, A., Sutskever, I., Amodei, D.: Language models are few-shot learners (2020) ArXiv, abs/2005.14165

Chen, Y., Zhang, Z., Yuan, C., Li, B., Deng, Y., Hu, W.: Channel-wise topology refinement graph convolution for skeleton-based action recognition. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 13339–13348 (2021)

Kipf, T., Welling, M.: Semi-supervised classification with graph convolutional networks (2016). ArXiv, abs/1609.02907

Ye, F., Pu, S., Zhong, Q., Li, C., Xie, D., Tang, H.: Dynamic gcn: context-enriched topology learning for skeleton-based action recognition. In: Proceedings of the 28th ACM International Conference on Multimedia, MM ’20, pp. 55–63, New York, NY, USA. Association for Computing Machinery (2020)

Liu, J., Wang, X., Wang, C., Gao, Y., Liu, M.: Temporal decoupling graph convolutional network for skeleton-based gesture recognition. IEEE Trans. Multimed. 1–13 (2023)

Liu, Z., Zhang, H., Chen, Z., Wang, Z., Ouyang, W.: Disentangling and unifying graph convolutions for skeleton-based action recognition. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 140–149 (2020)

Yan, S., Xiong, Y., Lin, D.: Spatial temporal graph convolutional networks for skeleton-based action recognition. In: AAAI Conference on Artificial Intelligence (2018)

Chi, H.G., Ha, M.H., Chi, S., Lee, S.W., Huang, Q., Ramani, K.: Infogcn: representation learning for human skeleton-based action recognition. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 20154–20164 (2022)

Wang, Q., Shi, S., He, J., Peng, J., Liu, T., Weng, R.: Iip-transformer: intra-inter-part transformer for skeleton-based action recognition. In: 2023 IEEE International Conference on Big Data (BigData), pp. 936–945 (2023)

Bouzid, H., Ballihi, L.: Spatr: Mocap 3d human action recognition based on spiral auto-encoder and transformer network. Comput. Vis. Image Underst. 241, 103974 (2024)

Huang, L., Huang, Y., Ouyang, W., Wang, L.: Part-level graph convolutional network for skeleton-based action recognition. In: AAAI Conference on Artificial Intelligence (2020)

Thakkar, K.C., Narayanan, P.J.: Part-based graph convolutional network for action recognition. In: British Machine Vision Conference (2018)

Song, Y.F., Zhang, Z., Shan, C., Wang, L.: Stronger, faster and more explainable: a graph convolutional baseline for skeleton-based action recognition. In: Proceedings of the 28th ACM International Conference on Multimedia, MM ’20, pp. 1625–1633, New York, NY, USA. Association for Computing Machinery (2020)

Guan, W., Song, X., Wang, K., Wen, H., Ni, H., Wang, Y., Chang, X.: Egocentric early action prediction via multimodal transformer-based dual action prediction. IEEE Trans. Circuits Syst. Video Technol. 33(9), 4472–4483 (2023)

Wang, K., Deng, H., Zhu, Q.: Lightweight channel-topology based adaptive graph convolutional network for skeleton-based action recognition. Neurocomputing 560, 126830 (2023)

Jiang, Y., Deng, H.: Lighter and faster: a multi-scale adaptive graph convolutional network for skeleton-based action recognition. Eng. Appl. Artif. Intell. 132, 107957 (2024)

Zheng, Q., Guo, H., Yin, Y., Zheng, B., Jiang, H.: Lfsimcc: spatial fusion lightweight network for human pose estimation. J. Vis. Commun. Image Represent. 99, 104093 (2024)

Zhao, Y., Gao, Q., Zhaojie, J., Zhou, J., Guo, Y.: Sharing-net: lightweight feedforward network for skeleton-based action recognition based on information sharing mechanism. Pattern Recogn. 146, 110050 (2024)

Vaswani, A., Shazeer, N.M., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L., Polosukhin, I.: Attention is all you need. In: Neural Information Processing Systems (2017)

Radford, A., Kim, J.W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., Askell, A., Mishkin, P., Clark, J., Krueger, G., Sutskever, I.: Learning transferable visual models from natural language supervision. In: International Conference on Machine Learning (2021)

Shahroudy, A., Liu, J., Ng, T.T., Wang, G.: Ntu rgb+d: a large scale dataset for 3d human activity analysis. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1010–1019 (2016)

Garcia-Hernando, G., Yuan, S., Baek, S., Kim, T.K.: First-person hand action benchmark with rgb-d videos and 3d hand pose annotations. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 409–419 (2018)

Aliakbarian, M.S., Saleh, F.S., Salzmann, M., Fernando, B., Petersson, L., Andersson, L.: Encouraging lstms to anticipate actions very early. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 280–289 (2017)

Wang, X., Hu, J.F., Lai, J.H., Zhang, J., Zheng, W.S.: Progressive teacher-student learning for early action prediction. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3551–3560 (2019)

Pang, G., Wang, X., Hu, J.F., Zhang, Q., Zheng, W.S.: Dbdnet: learning bi-directional dynamics for early action prediction. In: Proceedings of the 28th International Joint Conference on Artificial Intelligence, IJCAI’19, pp. 897–903. AAAI Press (2019)

Weng, J., Jiang, X., Zheng, W.-L., Yuan, J.: Early action recognition with category exclusion using policy-based reinforcement learning. IEEE Trans. Circuits Syst. Video Technol. 30(12), 4626–4638 (2020)

Ke, Q., Bennamoun, M., Rahmani, H., An, S., Sohel, F., Boussaid, F.: Learning latent global network for skeleton-based action prediction. IEEE Trans. Image Process. 29, 959–970 (2020)

Li, T., Liu, J., Zhang, W., Duan, L.Y.: Hard-net: hardness-aware discrimination network for 3d early activity prediction. In: European Conference on Computer Vision (2020)

Wang, W., Chang, F., Liu, C., Li, G., Wang, B.: Ga-net: a guidance aware network for skeleton-based early activity recognition. IEEE Trans. Multimed. 25, 1061–1073 (2023)

Song, Y.-F., Zhang, Z., Shan, C., Wang, L.: Constructing stronger and faster baselines for skeleton-based action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 45(2), 1474–1488 (2023)

Liu, C., Zhao, X., Yan, Z., Jiang, Y., Shi, X.: A graph convolutional network with early attention module for skeleton-based action prediction. In: 2022 26th International Conference on Pattern Recognition (ICPR), pp. 1266–1272 (2022)

Liu, C., Zhao, X., Li, Z., Yan, Z., Chong, D.: A novel two-stage knowledge distillation framework for skeleton-based action prediction. IEEE Signal Process. Lett. 29, 1918–1922 (2022)

Li, G., Li, N., Chang, F., Liu, C.: Adaptive graph convolutional network with adversarial learning for skeleton-based action prediction. IEEE Trans. Cogn. Dev. Syst. 14(3), 1258–1269 (2022)

Wang, R., Liu, J., Ke, Q., Peng, D., Lei, Y.: Dear-net: learning diversities for skeleton-based early action recognition. IEEE Trans. Multimed. 25, 1175–1189 (2023)

Shi, L., Zhang, Y., Cheng, J., Lu, H.: Two-stream adaptive graph convolutional networks for skeleton-based action recognition. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 12018–12027 (2019)

Foo, L.G., Li, T., Rahmani, H., Ke, Q., Liu, J.: Era: expert retrieval and assembly for early action prediction. In: Computer Vision—ECCV 2022: 17th European Conference, Tel Aviv, Israel, Proceedings, Part XXXIV, pp. 670–688. Springer, Berlin, Heidelberg (2022)

Wang, S., Zhang, Y., Wei, F., Wang, K., Zhao, M., Jiang, Y.: Skeleton-based action recognition via temporal-channel aggregation (2022). ArXiv, abs/2205.15936

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Li, X., Dong, Y., Ning, X., Zhang, P., Zhao, F. (2025). ADAL-GCN: Action Description Aided Learning Graph Convolution Network for Early Action Prediction. In: Lin, Z., et al. Pattern Recognition and Computer Vision. PRCV 2024. Lecture Notes in Computer Science, vol 15041. Springer, Singapore. https://doi.org/10.1007/978-981-97-8795-1_1

Download citation

DOI: https://doi.org/10.1007/978-981-97-8795-1_1

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-8794-4

Online ISBN: 978-981-97-8795-1

eBook Packages: Computer ScienceComputer Science (R0)