Abstract

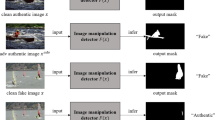

The widespread usage of Deepfake technology poses a significant threat to societal security, making the detection of Deepfakes a critical area of research. In recent years, forgery detection methods based on reconstruction errors have garnered widespread attention due to their excellent performance and generalization capabilities. However, those methods often focus on spatial reconstruction errors while neglecting the potential utility of frequency-based reconstruction errors. In this paper, we propose a novel deepfake detection framework based on Spatial-Frequency Dual-stream Reconstruction (SFDR). Specifically, our approach to forgery detection utilizes both frequency reconstruction error and spatial reconstruction error to provide complementary information that enhances the detection process. In addition, during the reconstruction, we ensure the consistency of frequency content between the original genuine images and their reconstructed versions. Finally, to mitigate the adverse impact of reconstruction tasks on the performance of forgery detection, we have refined the reconstruction loss to minimize the discrepancy between the original genuine images and their reconstructed counterparts; while simultaneously maximizing the difference between manipulated images and their reconstructions. Experimental results on multiple challenging forged datasets evaluation show that our method achieves superior performance in detection and generalization ability.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Cao, J., Ma, C., Yao, T., Chen, S., Ding, S., Yang, X.: End-to-end reconstruction-classification learning for face forgery detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4113–4122, June (2022)

Cheng, Z., Chen, C., Zhou, Y., Hu, X.: Mining temporal inconsistency with 3d face model for deepfake video detection. In: Chinese Conference on Pattern Recognition and Computer Vision (PRCV), pp. 231–243. Springer (2023)

Chollet, F.: Xception: deep learning with depthwise separable convolutions. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1800–1807 (2017)

Deng, J., Guo, J., Ververas, E., Kotsia, I., Zafeiriou, S.: Retinaface: single-shot multi-level face localisation in the wild. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June (2020)

Du, M., Pentyala, S., Li, Y., Hu, X.: Towards generalizable deepfake detection with locality-aware autoencoder. In: Proceedings of the 29th ACM International Conference on Information & Knowledge Management, pp. 325–334 (2020)

Frank, J., Eisenhofer, T., Schönherr, L., Fischer, A., Kolossa, D., Holz, T.: Leveraging frequency analysis for deep fake image recognition. In: International Conference on Machine Learning, pp. 3247–3258. PMLR (2020)

Groshev, A., Maltseva, A., Chesakov, D., Kuznetsov, A., Dimitrov, D.: Ghost-a new face swap approach for image and video domains. IEEE Access 10, 83452–83462 (2022)

Qiqi, G., Chen, S., Yao, T., Chen, Y., Ding, S., Yi, R.: Exploiting fine-grained face forgery clues via progressive enhancement learning. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, pp. 735–743 (2022)

Hassani, A., Malik, H., Diedrich, J.: Efficiently mitigating face-swap-attacks: compressed-prnu verification with sub-zones. Technologies 10(2), 46 (2022)

He, Y., Yu, N., Keuper, M., Fritz, M.: Beyond the spectrum: detecting deepfakes via re-synthesis. arXiv preprint arXiv:2105.14376 (2021)

Hui, Z., Li, J., Wang, X., Gao, X.: Image fine-grained inpainting. arXiv preprint arXiv:2002.02609 (2020)

Jia, F., Yang, S.: Video face swap with deepfacelab. In: International Conference on Computer Graphics, Artificial Intelligence, and Data Processing (ICCAID 2021), vol. 12168, pp. 326–332. SPIE (2022)

Jiang, L., Dai, B., Wu, W., Loy, C.C.: Focal frequency loss for image reconstruction and synthesis. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 13919–13929 (2021)

Li, Y., Yang, X., Sun, P., Qi, H., Lyu, S.: Celeb-df: a large-scale challenging dataset for deepfake forensics. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June (2020)

Luo, Y., Zhang, Y., Yan, J., Liu, W.: Generalizing face forgery detection with high-frequency features. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 16317–16326, June (2021)

Miao, C., Chu, Q., Li, W., Li, S., Tan, Z., Zhuang, W., Nenghai, Yu.: Learning forgery region-aware and id-independent features for face manipulation detection. IEEE Trans. Biom. Behav. Identity Sci. 4(1), 71–84 (2021)

Nguyen, H.H., Fang, F., Yamagishi, J., Echizen, I.: Multi-task learning for detecting and segmenting manipulated facial images and videos. In: 2019 IEEE 10th International Conference on Biometrics Theory, Applications and Systems (BTAS), pp. 1–8. IEEE (2019)

Qian, Y., Yin, G., Sheng, L., Chen, Z., Shao, J.: Thinking in frequency: face forgery detection by mining frequency-aware clues. In: European Conference on Computer Vision, pp. 86–103. Springer (2020)

Reader, A.J., Corda, G., Mehranian, A., da Costa-Luis, C., Ellis, S., Schnabel, J.A.: Deep learning for pet image reconstruction. IEEE Trans. Radiat. Plasma Med. Sci. 5(1), 1–25 (2020)

Rossler, A., Cozzolino, D., Verdoliva, L., Riess, C., Thies, J., Niessner, M.: Faceforensics++: learning to detect manipulated facial images. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October (2019)

Ruan, D., Yan, Y., Lai, S., Chai, Z., Shen, C., Wang, H.: Feature decomposition and reconstruction learning for effective facial expression recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7660–7669 (2021)

Tan, M., Le, Q.: Efficientnet: rethinking model scaling for convolutional neural networks. In: International Conference on Machine Learning, pp. 6105–6114. PMLR (2019)

Wang, C., Deng, W.: Representative forgery mining for fake face detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 14923–14932, June (2021)

Wang, L., Ma, C.: Adapting pretrained large-scale vision models for face forgery detection. In: International Conference on Multimedia Modeling, pp. 71–85. Springer (2024)

Wang, Z., Guo, Y., Zuo, W.: Deepfake forensics via an adversarial game. IEEE Trans. Image Process. (2022)

Yoshihashi, R., Shao, W., Kawakami, R., You, S., Iida, M., Naemura, T.: Classification-reconstruction learning for open-set recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4016–4025 (2019)

Yu, Y., Ni, R., Zhao, Y.: Mining generalized features for detecting ai-manipulated fake faces. arXiv preprint arXiv:2010.14129 (2020)

Zhang, A., McAllister, R., Calandra, R., Gal, Y., Levine, S.: Learning invariant representations for reinforcement learning without reconstruction. arXiv preprint arXiv:2006.10742 (2020)

Zhao, H., Zhou, W., Chen, D., Wei, T., Zhang, W., Yu, N.: Multi-attentional deepfake detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2185–2194, June (2021)

Zheng, J., Zhou, Y., Hu, X., Tang, Z.: Dt-transunet: a dual-task model for deepfake detection and segmentation. In: Chinese Conference on Pattern Recognition and Computer Vision (PRCV), pp. 244–255. Springer (2023)

Zi, B., Chang, M., Chen, J., Ma, X., Jiang, Y.-G.: Wilddeepfake: a challenging real-world dataset for deepfake detection. In: Proceedings of the 28th ACM International Conference on Multimedia, pp. 2382–2390 (2020)

Acknowledgement

This work was supported in part by the National Natural Science Foundation of China under Grant 62276198, Grant U22A2035, Grant U22A2096 and Grant 62306227; in part by the Key Research and Development Program of Shaanxi (Program No. 2023-YBGY-231); in part by Young Elite Scientists Sponsorship Program by CAST under Grant 2022QNRC001; in part by the Guangxi Natural Science Foundation Program under Grant 202 1GXNSFDA075011; in part by Xi’an Science and Technology Plan Project under Grant 23GJSY0004; in part by Open Research Project of Key Laboratory of Artificial Intelligence Ministry of Education under Grant AI202401, and in part by the Fundamental Research Funds for the Central Universities under Grant QTZX23083, Grant QTZX23042, and Grant ZYTS24142.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Peng, C., Chen, T., Liu, D., Zheng, Y., Wang, N. (2025). Spatial-Frequency Dual-Stream Reconstruction for Deepfake Detection. In: Lin, Z., et al. Pattern Recognition and Computer Vision. PRCV 2024. Lecture Notes in Computer Science, vol 15041. Springer, Singapore. https://doi.org/10.1007/978-981-97-8795-1_32

Download citation

DOI: https://doi.org/10.1007/978-981-97-8795-1_32

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-8794-4

Online ISBN: 978-981-97-8795-1

eBook Packages: Computer ScienceComputer Science (R0)