Abstract

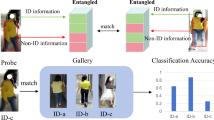

In recent years, the increasing demand for long-term pedestrian retrieval has brought the cloth-changing person re-identification (CC-ReID) challenge into the spotlight. In scenarios spanning long periods, there are two main challenges: (1) clothing and background interference; (2) extraction of identity-sensitive information. To address these issues, we introduce a robust framework titled Mask-guided clothes-irrelevant and background-irrelevant Network (Magic-Net). Magic-Net employs knowledge distillation across two distinct streams: the outline stream and the exposed stream. The outline stream captures the pedestrians’ contour, minimizing the impact of clothing and background, while the exposed stream enriches identity-sensitive information from the pedestrian’s exposed areas. This dual-stream integration focuses the model on critical re-identification regions. Evaluations on several benchmark datasets demonstrate Magic-Net’s exceptional performance in tackling the CC-ReID challenge.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Chen, J., Jiang, X., Wang, F., Zhang, J., Zheng, F., Sun, X., Zheng, W.S.: Learning 3d shape feature for texture-insensitive person re-identification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8146–8155 (2021)

Chen, L., Yang, H., Xu, Q., Gao, Z.: Harmonious attention network for person re-identification via complementarity between groups and individuals 453, 766–776 (2021)

Eom, C., Lee, W., Lee, G., Ham, B.: Disentangled representations for short-term and long-term person re-identification. IEEE Trans. Pattern Anal. Mach. Intell. 44(12), 8975–8991 (2021)

Fan, C., Peng, Y., Cao, C., Liu, X., Hou, S., Chi, J., Huang, Y., Li, Q., He, Z.: Gaitpart: Temporal part-based model for gait recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 14225–14233 (2020)

Gao, Z., Wei, H., Guan, W., Nie, J., Wang, M., Chen, S.: A semantic-aware attention and visual shielding network for cloth-changing person re-identification. IEEE Trans. Neural Netw. Learn. Syst. (2023)

Gou, J., Yu, B., Maybank, S.J., Tao, D.: Knowledge distillation: a survey. Int. J. Comput. Vision 129(6), 1789–1819 (2021)

Hermans, A., Beyer, L., Leibe, B.: In defense of the triplet loss for person re-identification (2017). arXiv:1703.07737

Herzog, F., Ji, X., Teepe, T., Hörmann, S., Gilg, J., Rigoll, G.: Lightweight multi-branch network for person re-identification. In: 2021 IEEE International Conference on Image Processing (ICIP), pp. 1129–1133. IEEE (2021)

Hong, P., Wu, T., Wu, A., Han, X., Zheng, W.S.: Fine-grained shape-appearance mutual learning for cloth-changing person re-identification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10513–10522 (2021)

Huang, Y., Wu, Q., Xu, J., Zhong, Y., Zhang, Z.: Clothing status awareness for long-term person re-identification. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 11895–11904 (2021)

Huang, Y., Xu, J., Wu, Q., Zhong, Y., Zhang, P., Zhang, Z.: Beyond scalar neuron: adopting vector-neuron capsules for long-term person re-identification. IEEE Trans. Circuits Syst. Video Technol. 30(10), 3459–3471 (2019)

Jin, X., He, T., Zheng, K., Yin, Z., Shen, X., Huang, Z., Feng, R., Huang, J., Chen, Z., Hua, X.S.: Cloth-changing person re-identification from a single image with gait prediction and regularization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 14278–14287 (2022)

Li, Y.J., Luo, Z., Weng, X., Kitani, K.M.: Learning shape representations for clothing variations in person re-identification (2020). arXiv:2003.07340

Li, Y.J., Weng, X., Kitani, K.M.: Learning shape representations for person re-identification under clothing change. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 2432–2441 (2021)

Liu, M., Yan, X., Wang, C., Wang, K.: Segmentation mask-guided person image generation. Appl. Intell. 51, 1161–1176 (2021)

Liu, S., Zeng, Z., Ren, T., Li, F., Zhang, H., Yang, J., Li, C., Yang, J., Su, H., Zhu, J., Zhang, L.: Grounding dino: Marrying dino with grounded pre-training for open-set object detection (2023). arXiv:abs/2303.05499

Medeiros, L.: Lang segment anything (2023). https://github.com/luca-medeiros/lang-segment-anything

Miao, J., Wu, Y., Liu, P., Ding, Y., Yang, Y.: Pose-guided feature alignment for occluded person re-identification. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 542–551 (2019)

Peng, C., Wang, B., Liu, D., Wang, N., Hu, R., Gao, X.: Masked attribute description embedding for cloth-changing person re-identification (2024). arXiv:2401.05646

Shi, W., Liu, H., Liu, M.: Iranet: identity-relevance aware representation for cloth-changing person re-identification. Image Vis. Comput. 117, 104335 (2022)

Shu, X., Li, G., Wang, X., Ruan, W., Tian, Q.: Semantic-guided pixel sampling for cloth-changing person re-identification. IEEE Signal Process. Lett. 28, 1365–1369 (2021)

Song, C., Huang, Y., Ouyang, W., Wang, L.: Mask-guided contrastive attention model for person re-identification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1179–1188 (2018)

Thanh, D.T., Lee, Y., Kang, B.: Enhancing long-term person re-identification using global, local body part, and head streams. Neurocomputing 580, 127480 (2024)

Wu, J., Liu, H., Shi, W., Tang, H., Guo, J.: Identity-sensitive knowledge propagation for cloth-changing person re-identification. In: 2022 IEEE International Conference on Image Processing (ICIP), pp. 1016–1020. IEEE (2022)

Xie, S., Tu, Z.: Holistically-nested edge detection. In: Proceedings of the IEEE International Conference on computer Vision, pp. 1395–1403 (2015)

Xu, W., Liu, H., Shi, W., Miao, Z., Lu, Z., Chen, F.: Adversarial feature disentanglement for long-term person re-identification. In: IJCAI, pp. 1201–1207 (2021)

Yang, Q., Wu, A., Zheng, W.S.: Person re-identification by contour sketch under moderate clothing change. IEEE Trans. Pattern Anal. Mach. Intell. 43(6), 2029–2046 (2019)

Yang, Z., Lin, M., Zhong, X., Wu, Y., Wang, Z.: Good is bad: Causality inspired cloth-debiasing for cloth-changing person re-identification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1472–1481 (2023)

Yu, S., Li, S., Chen, D., Zhao, R., Yan, J., Qiao, Y.: Cocas: a large-scale clothes changing person dataset for re-identification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3400–3409 (2020)

Zhou, K., Yang, Y., Cavallaro, A., Xiang, T.: Omni-scale feature learning for person re-identification. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3702–3712 (2019)

Acknowledgement

This work is supported by Xiamen Natural Science Foundation(Grant No.3502Z202372034), the research startup foundation of Huaqiao university(Grant No.20201XD022, Grant No.HQJGYB2406) and Quanzhou Science and Technology Projects(Grant No.2023N013).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Zhu, G., Liu, G., Chen, L., Liao, G., Zeng, H. (2025). Mask-Guided Clothes-Irrelevant and Background-Irrelevant Network with Knowledge Propagation for Cloth-Changing Person Re-identification. In: Lin, Z., et al. Pattern Recognition and Computer Vision. PRCV 2024. Lecture Notes in Computer Science, vol 15042. Springer, Singapore. https://doi.org/10.1007/978-981-97-8858-3_16

Download citation

DOI: https://doi.org/10.1007/978-981-97-8858-3_16

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-8857-6

Online ISBN: 978-981-97-8858-3

eBook Packages: Computer ScienceComputer Science (R0)