Abstract

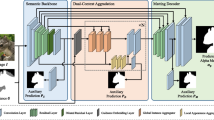

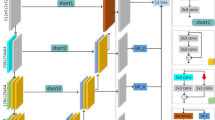

Recent human matting methods typically suffer from two drawbacks: 1) high computation overhead caused by multiple stages, and 2) limited practical application due to the need for auxiliary guidance (e.g., trimap, mask, or background). To address these issues, we propose EfficientMatting, a real-time human matting method using only a single image as input. Specifically, EfficientMatting incorporates a bilateral network composed of two complementary branches: a transformer-based context information branch and a CNN-based spatial information branch. Furthermore, we introduce three novel techniques to enhance model performance while maintaining high inference efficiency. Firstly, we design a Semantic Guided Fusion Module (SGFM), which empowers the model to dynamically acquire valuable features with the assistance of context information. Secondly, we design a lightweight Detail Preservation Module (DPM) to achieve detail preservation and mitigate image artifacts during the upsampling process. Thirdly, we introduce the Supervised-Enhanced Training Strategy (SETS) to explicitly provide supervision on hidden features. Extensive experiments on P3M-10k, Human-2K, and PPM-100 datasets show that EfficientMatting outperforms state-of-the-art real-time human matting methods in terms of both model performance and inference speed.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Cai, S., Zhang, X., Fan, H., Huang, H., Liu, J., Liu, J., Liu, J., Wang, J., Sun, J.: Disentangled image matting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8819–8828 (2019)

Chen, Q., Li, D., Tang, C.K.: KNN matting. IEEE Trans. Pattern Anal. Mach. Intell. 35(9), 2175–2188 (2013)

Chen, Q., Ge, T., Xu, Y., Zhang, Z., Yang, X., Gai, K.: Semantic human matting. In: Proceedings of the 26th ACM International Conference on Multimedia, pp. 618–626 (2018)

Chuang, Y.Y., Curless, B., Salesin, D.H., Szeliski, R.: A bayesian approach to digital matting. In: Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001. vol. 2, pp. II–II. IEEE (2001)

Ding, X., Zhang, X., Ma, N., Han, J., Ding, G., Sun, J.: RepVGG: making VGG-style convnets great again. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13733–13742 (2021)

Hong, J., Zuo, J., Han, C., Zheng, R., Tian, M., Gao, C., Sang, N.: Spatial cascaded clustering and weighted memory for unsupervised person re-identification (2024). arXiv:2403.00261

Hong, Y., Pan, H., Sun, W., Jia, Y.: Deep dual-resolution networks for real-time and accurate semantic segmentation of road scenes (2021). arXiv:2101.06085

Hou, Q., Liu, F.: Context-aware image matting for simultaneous foreground and alpha estimation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4130–4139 (2019)

, Karacan, L., Erdem, A., Erdem, E.: Image matting with KL-divergence based sparse sampling. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 424–432 (2015)

Ke, Z., Sun, J., Li, K., Yan, Q., Lau, R.W.: MODNet: real-time trimap-free portrait matting via objective decomposition. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, pp. 1140–1147 (2022)

Lee, P., Wu, Y.: Nonlocal matting. In: CVPR 2011, pp. 2193–2200. IEEE (2011)

Levin, A., Lischinski, D., Weiss, Y.: A closed-form solution to natural image matting. IEEE Trans. Pattern Anal. Mach. Intell. 30(2), 228–242 (2007)

Li, J., Ma, S., Zhang, J., Tao, D.: Privacy-preserving portrait matting. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 3501–3509 (2021)

Li, J., Zhang, J., Maybank, S.J., Tao, D.: Bridging composite and real: towards end-to-end deep image matting. Int. J. Comput. Vision 130(2), 246–266 (2022)

Li, J., Zhang, J., Tao, D.: Deep automatic natural image matting (2021). arXiv:2107.07235

Li, Y., Lu, H.: Natural image matting via guided contextual attention. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 11450–11457 (2020)

Lin, S., Ryabtsev, A., Sengupta, S., Curless, B.L., Seitz, S.M., Kemelmacher-Shlizerman, I.: Real-time high-resolution background matting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8762–8771 (2021)

Liu, X., Peng, H., Zheng, N., Yang, Y., Hu, H., Yuan, Y.: Efficientvit: Memory efficient vision transformer with cascaded group attention. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 14420–14430 (2023)

Liu, Y., Xie, J., Shi, X., Qiao, Y., Huang, Y., Tang, Y., Yang, X.: Tripartite information mining and integration for image matting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 7555–7564 (2021)

Lu, H., Dai, Y., Shen, C., Xu, S.: Indices matter: Learning to index for deep image matting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3266–3275 (2019)

Luo, R., Wei, R., Gao, C., Sang, N.: Frequency information matters for image matting. In: Asian Conference on Pattern Recognition, pp. 81–94. Springer, Berlin (2023)

Park, G., Son, S., Yoo, J., Kim, S., Kwak, N.: Matteformer: transformer-based image matting via prior-tokens. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11696–11706 (2022)

Qiao, Y., Liu, Y., Yang, X., Zhou, D., Xu, M., Zhang, Q., Wei, X.: Attention-guided hierarchical structure aggregation for image matting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13676–13685 (2020)

Rhemann, C., Rother, C., Wang, J., Gelautz, M., Kohli, P., Rott, P.: A perceptually motivated online benchmark for image matting. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1826–1833. IEEE (2009)

Sengupta, S., Jayaram, V., Curless, B., Seitz, S.M., Kemelmacher-Shlizerman, I.: Background matting: the world is your green screen. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2291–2300 (2020)

Shahrian, E., Rajan, D., Price, B., Cohen, S.: Improving image matting using comprehensive sampling sets. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 636–643 (2013)

Wang, J., Cohen, M.F.: Optimized color sampling for robust matting. In: 2007 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8. IEEE (2007)

Wei, R., Liu, Y., Song, J., Cui, H., Xie, Y., Zhou, K.: Chain: Exploring global-local spatio-temporal information for improved self-supervised video hashing. In: Proceedings of the 31st ACM International Conference on Multimedia, pp. 1677–1688 (2023)

Xu, N., Price, B., Cohen, S., Huang, T.: Deep image matting. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2970–2979 (2017)

Xu, Z., Shang, H., Yang, S., Xu, R., Yan, Y., Li, Y., Huang, J., Yang, H.C., Zhou, J.: Hierarchical painter: Chinese landscape painting restoration with fine-grained styles. Vis. Intell. 1(1), 19 (2023)

Yao, J., Wang, X., Yang, S., Wang, B.: Vitmatte: boosting image matting with pre-trained plain vision transformers. Inf. Fusion 103, 102091 (2024)

Yao, J., Wang, X., Ye, L., Liu, W.: Matte anything: interactive natural image matting with segment anything models (2023). arXiv:2306.04121

Yu, Q., Zhang, J., Zhang, H., Wang, Y., Lin, Z., Xu, N., Bai, Y., Yuille, A.: Mask guided matting via progressive refinement network. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1154–1163 (2021)

Zhang, H., Wang, X., Xu, X., Qing, Z., Gao, C., Sang, N.: Hr-pro: Point-supervised temporal action localization via hierarchical reliability propagation (2023). arXiv:2308.12608

Zhang, Y., Gong, L., Fan, L., Ren, P., Huang, Q., Bao, H., Xu, W.: A late fusion cnn for digital matting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7469–7478 (2019)

Zhou, Y., Lu, R., Xue, F., Gao, Y.: Occlusion relationship reasoning with a feature separation and interaction network. Vis. Intell. 1(1), 23 (2023)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Luo, R., Wei, R., Zhang, H., Tian, M., Gao, C., Sang, N. (2025). EfficientMatting: Bilateral Matting Network for Real-Time Human Matting. In: Lin, Z., et al. Pattern Recognition and Computer Vision. PRCV 2024. Lecture Notes in Computer Science, vol 15042. Springer, Singapore. https://doi.org/10.1007/978-981-97-8858-3_9

Download citation

DOI: https://doi.org/10.1007/978-981-97-8858-3_9

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-97-8857-6

Online ISBN: 978-981-97-8858-3

eBook Packages: Computer ScienceComputer Science (R0)