Abstract

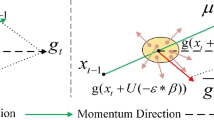

Neural networks have achieved state-of-the-art results in many fields. With further research, researchers have found that neural network models are vulnerable to adversarial examples which are carefully designed to fool the neural network models. Adversarial training is a process of creating adversarial samples during the training process and directly training a model on the adversarial samples, which can improve the robustness of the model to adversarial samples. In the adversarial training process, the stronger the attack ability of the adversarial samples, the more robust the adversarial training model. In this paper, we incorporate the momentum ideas into the projected gradient descent (PGD) attack algorithm and propose a novel momentum-PGD attack algorithm (M-PGD) that greatly improves the attack ability of the PGD attack algorithm. After that, we train a neural network model on the adversarial samples generated by the M-PGD attack algorithm, which could greatly improve the robustness of the adversarial training model. We compare our adversarial training model with the other five adversarial training models on the CIFAR-10 and CIFAR-100 datasets. Experiments show that our adversarial training model can be extremely more robust to adversarial samples than the other adversarial training models. We hope our adversarial training model will be used as a benchmark in the future to test the attack ability of attack models.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. Commun. ACM 60(6), 84–90 (2017). https://doi.org/10.1145/3065386

Lecun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998). https://doi.org/10.1109/5.726791

Hinton, G., et al.: Deep neural networks for acoustic modeling in speech recognition: the shared views of four research groups. IEEE Signal Process. Mag. 29(6), 82–97 (2012). https://doi.org/10.1109/MSP.2012.2205597

Andor, D., et al.: Globally normalized transition-based neural networks. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 2442–2452. Association for Computational Linguistics, Berlin, Germany, August 2016. https://doi.org/10.18653/v1/P16-1231

Szegedy, C., et al.: Intriguing properties of neural networks. In: Bengio, Y., LeCun, Y. (eds.) 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, 14–16 April 2014, Conference Track Proceedings (2014)

Carlini, N., Wagner, D.A.: Towards evaluating the robustness of neural networks. In: 2017 IEEE Symposium on Security and Privacy, SP 2017, San Jose, CA, USA, 22–26 May 2017, pp. 39–57. IEEE Computer Society (2017). https://doi.org/10.1109/SP.2017.49

Papernot, N., McDaniel, P., Wu, X., Jha, S., Swami, A.: Distillation as a defense to adversarial perturbations against deep neural networks. In: 2016 IEEE Symposium on Security and Privacy (SP), pp. 582–597 (2016). https://doi.org/10.1109/SP.2016.41

He, W., Wei, J., Chen, X., Carlini, N., Song, D.: Adversarial example defenses: ensembles of weak defenses are not strong. In: Proceedings of the 11th USENIX Conference on Offensive Technologies, p. 15. WOOT 2017, USENIX Association, USA (2017)

Xu, W., Evans, D., Qi, Y.: Feature squeezing: Detecting adversarial examples in deep neural networks. In: 25th Annual Network and Distributed System Security Symposium, NDSS 2018, San Diego, California, USA, 18–21 February 2018, The Internet Society (2018)

Carlini, N., Wagner, D.: Adversarial examples are not easily detected: bypassing ten detection methods. In: Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, pp. 3–14. AISec 2017, Association for Computing Machinery, New York, NY, USA (2017). https://doi.org/10.1145/3128572.3140444

Madry, A., Makelov, A., Schmidt, L., Tsipras, D., Vladu, A.: Towards deep learning models resistant to adversarial attacks. In: 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, April 30– May 3, 2018, Conference Track Proceedings. OpenReview.net (2018)

Goodfellow, I.J., Shlens, J., Szegedy, C.: Explaining and harnessing adversarial examples. In: Bengio, Y., LeCun, Y. (eds.) 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015, Conference Track Proceedings (2015)

Kurakin, A., Goodfellow, I.J., Bengio, S.: Adversarial examples in the physical world. In: 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017, Workshop Track Proceedings, OpenReview.net (2017)

Moosavi-Dezfooli, S., Fawzi, A., Frossard, P.: Deepfool: a simple and accurate method to fool deep neural networks. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016, pp. 2574–2582. IEEE Computer Society (2016). https://doi.org/10.1109/CVPR.2016.282

Hearst, M., Dumais, S., Osuna, E., Platt, J., Scholkopf, B.: Support vector machines. IEEE Intell. Syst. Appl. 13(4), 18–28 (1998). https://doi.org/10.1109/5254.708428

Polyak, B.: Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 4(5), 1–17 (1964)

Shafahi, A., et al.: Adversarial training for free! In: Advances in Neural Information Processing Systems, vol. 32. Curran Associates, Inc. (2019)

Zhang, D., Zhang, T., Lu, Y., Zhu, Z., Dong, B.: You only propagate once: accelerating adversarial training via maximal principle. In: Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, 8–14 December 2019, Vancouver, BC, Canada, pp. 227–238 (2019)

Krizhevsky, A., Hinton, G.: Learning multiple layers of features from tiny images. Master’s thesis, Department of Computer Science, University of Toronto, pp. 32–33 (2009)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778. IEEE Computer Society, Los Alamitos, CA, USA, June 2016. https://doi.org/10.1109/CVPR.2016.90

Dong, Y., et al.: Boosting adversarial attacks with momentum. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9185–9193. IEEE Computer Society, Los Alamitos, CA, USA, June 2018. https://doi.org/10.1109/CVPR.2018.00957

Kurakin, A., Goodfellow, I.J., Bengio, S.: Adversarial machine learning at scale. In: Conference Track Proceedings of 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017, OpenReview.net (2017)

Abadi, M., et al.: TensorFlow: Large-scale machine learning on heterogeneous systems (2015), software available from tensorflow.org

Jiang, Y., Ma, X., Erfani, S.M., Bailey, J.: Dual head adversarial training. CoRR abs/2104.10377 (2021). https://arxiv.org/abs/2104.10377

Chiu, M.C., Ma, X.: Learning representations robust to group shifts and adversarial examples (2022). https://doi.org/10.48550/ARXIV.2202.09446, https://arxiv.org/abs/2202.09446

Wang, X., He, X., Wang, J., He, K.: Admix: enhancing the transferability of adversarial attacks. CoRR abs/2102.00436 (2021), https://arxiv.org/abs/2102.00436

Shamsabadi, A.S., Sanchez-Matilla, R., Cavallaro, A.: Colorfool: semantic adversarial colorization. CoRR abs/1911.10891 (2019). http://arxiv.org/abs/1911.10891

Acknowledgements

This work is supported by the National Key R &D Program of China (No. 2020YFB1006105).

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

He, C. et al. (2023). Boosting the Robustness of Neural Networks with M-PGD. In: Tanveer, M., Agarwal, S., Ozawa, S., Ekbal, A., Jatowt, A. (eds) Neural Information Processing. ICONIP 2022. Communications in Computer and Information Science, vol 1791. Springer, Singapore. https://doi.org/10.1007/978-981-99-1639-9_47

Download citation

DOI: https://doi.org/10.1007/978-981-99-1639-9_47

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-1638-2

Online ISBN: 978-981-99-1639-9

eBook Packages: Computer ScienceComputer Science (R0)